AI and the User Perspective - accessibility use cases

@@ This section explores the current guidance and standards as well as what relevant standards need to be considered when incorporating AI or ML technologies into development pipelines for accessible content creation.

Relevance of current standards and guidance

The W3C Accessibility Initiative (WAI) consists of three guidelines capable of assisting in the creation of accessible content that may potentially be supported by AI features. These guidelines include standards relating to web content, user agents and authoring tools.

The Web Content Accessibility Guidelines (WCAG) 2.2 features several guidelines and success criteria (SC) that are potentially being addressed, such as SC 1.1.1 with the provision of automated alternative text, and SC 1.2.4 with the provision of time-based media through automated live captioning.

In addition, the User Agent Accessibility (UAAG) Guidelines 2.0 could suggest that user agents, like web browsers with generative AI, could make real-time adjustments during browsing sessions, such as fixing color contrast issues and improving poor heading structures through the interpretation of codes.

Furthermore, the Authoring Tool Accessibility Guidelines (ATAG) 2.0 could also offer support, particularly in Part B, in which the creation of accessible content is of particular importance. For example, authoring tools that have automatically generated alternative text could support the creation of accessible content.

Alternative text for images

There currently exists a number of machine learning-based tools that have been integrated into popular social media platforms, alongside authoring tools, that are equipped to create an automated alternative text description based on machine learning algorithms that scan and determine the contents of visual materials, such as an image. Until recently, this automated process was considered to hold high inaccuracy to the point where its utility was questioned [[RN3]]. Recent developments have improved automated alternative text accuracy, but criticism persists due to limitations in providing detail and recognising the importance of relevant data.

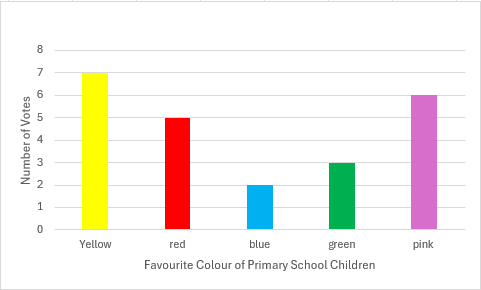

A good example can be seen in a popular image used to illustrate data (Twinkl, n.d.). The image features a classic bar graph of responses from children clarifying their favourite colour, in which yellow has been found the achieve the highest result with 9 responses. While an appropriate alternative text for the image should endeavour to capture the significant points of the graph with detail, such as its drawn intention - the information organising its X and Y axis, as well as the resulting data, the automated alternative text simply describes this image as “a graph with different coloured bars”. While technically accurate, this information lacks depth to convey important technical details from the graph.

A second example is shown through an image from the James Webb Space Telescope. As all images publicly released include automated alternative text, the alternative text for Figure 2 was compared to that of a manually created alternative text. The former reads the description, “The image is divided horizontally by an undulating line between a cloudscape forming a nebula along the bottom portion and a comparatively clear upper portion”, while the latter states: “Speckled across both portions is a starfield, showing innumerable stars of many sizes. The smallest of these are small, distant, and faint points of light. The largest of these appear larger, closer, brighter, and more fully resolved with 8-point diffraction spikes. The upper portion of the image is bluish and has wispy translucent cloudlike streaks rising from the nebula below.”

Upon observation, the automated alternative text presents a simplified iteration of the image, using the brief narration, “a nebula in space with stars”. As such, once again, this comparison supports that, while automated alternative text provided by machine learning is representative of the image being studied and could assist in delivering a basic and minimised understanding of an image, it does not have the ability to incorporate the orientation of detail required to capture the essence of the image.

Although machine learning techniques embedded in authoring tools and other platforms may provide some information, generative AI platforms that are able to create images, videos and other visual media content based on text input tend not to provide automated alternative text. Hence, this would make it difficult for people who are blind or have low vision to attain a meaningful interpretation of these AI-generated outputs.

Automatic Speech Recognition for captioning

A common alternative accessibility support is the provision of live captioning through the features of Automated Speech Recognition (ASR) processes, whereby generative AI is employed to sample speech and convert them into captions as the same language, or subtitles in a different language.

Until recently, the accuracy and validity of ASR techniques in the same language were considered as ineffective practices as the translation quality was poor due to an extra machine learning step that attempted to convert ASR outputs further. Currently however, the provision of captions has become increasingly reliable, with an approximate success rate of 85%. Furthermore, its ability to translate in real time has improved substantially [[RN5]].

While ASR captions may provide merits when serving the role of a complementary tool alongside curated content, it is still generally perceived by the Deaf community as lacking in accuracy, thereby preventing ASR from being considered as a truly beneficial feature. Other issues associated with ASR may include delays in processing time, as most contemporary solutions require devices to be connected for the generative AI features to occur online, the lack of grammar and punctuation, the lack of block captioning associated with pre-recorded materials, and the necessity for good quality audio in a quiet environment in order for ASR to optimise its output.

However, that is not to discredit the fact that some of these issues are beginning to be corrected. With the introduction of NPUs into consumer devices, ASR captions can be rendered on devices rather than online. Early testing suggests that this significantly improves the speed of captions being presented, as well as provides enhanced auto-correction features in an instance where ASR misunderstands a word or phrase.

While these advancements do not resolve all issues of ASR, and the notion remains that this process is still not adequately accessible as a standalone feature, they demonstrate that current generative AI processes are capable of potentially contributing to accessibility improvements.

ASR technologies may also be applied to audio-only material in which pre-recorded content could be provided to a generative AI process to output a transcript. While the removal of real-time ASR may increase reliability to a point where some specialist writing tools could be applicable to utilise this function, issues of accuracy, spelling, grammar, punctuation and errors persist.

Plain language

Current demonstrations of generative AI often employ examples of language being translated or simplified to showcase the potential of these mechanisms. While the ability for popular AI platforms to convert language based on specific word count, rhyming pattern and subject matter are often viewed as successful outcomes, converting complex language content to the level of lower secondary reading abilities often require human intervention.

Key concepts including the need for common words, the definition of words, and the removal of double negatives are exemplary demonstrations of how generative AI may achieve some positive effects. However, other plain language concepts such as the conversion of text into literal language, understanding different grammar tenses, and addressing text with nested clauses highlight some current limitations of AI. For example, a translation of the popular poem, “Mary Had a Little Lamb”, into a non-English language, and then back to English observes the error of “it’s fleece was white as snow” being converted to “it snowed sheep hair”. While the other lines in the poem were largely accurately translated, the issue of literal language and the shortcoming of generative AI in understanding regional contexts and language structures remains.

Automated Language detection

Within the WCAG 2.2 standard, Guideline 3.1 explains the necessity to globally define language on a page, as well as when there is a change of language in parts. This would then allow assistive technologies to understand the language change and adjust its language selection accordingly. A potential benefit to machine learning could be the improved ability in identifying the type of language used. This would then enable assistive technologies such as screen readers to select that language if supported.

Although there are few examples in a web context where the language of content is defined by machine learning processes, rather than coded directly, the ability for assistive technologies to immediately comprehend the language being presented could provide a considerable benefit.

Colour contrast

Another area where machine learning could identify an issue lies in the remediation of colour contrast issues as they are recognised. Automated testing tools at present are capable of detecting some colour contrast issues [[RN11]], hence, the possibility of real-time remediation could be likely in the case that a detected text colour contrast is below the 4.5:1 colour contrast ratio for foreground, background and text colour, or the 3:1 colour contrast ratio for user interface elements. With generative AI processes, these elements could be made more contrasting through colour adjustments, while largely preserving the colours intended by the original author.

Heading structure

Currently, the use of elements such as bold text to look like a heading without the provision of a programmatically determined heading structure is a significant accessibility issue that remains in web content. With a generative AI feature, web pages or app screens can visually identify headings and their nested content, utilising current technologies. While there is presently no awareness of its potential implementation, the remediation of heading structure represents a significant opportunity to provide improvements in readability and navigation should generative AI be capable of effectively addressing this issue.

Adjustment of visual spacing

At present, some web browsers offer the proficiency to structurally reorder web content to optimise the aspect of readability. As enhancements in machine learning continues, it is likely that such features will continue to improve and confront accessibility concerns relating to text, word, and line spacing for the purpose of better supporting people with a cognitive and print disability.

Link purpose

Presently, a widespread issue existing within online channels may be attributed to the use of non-descriptive links such as “click here” or “read more”, as identified in WCAG 2.2 SC 2.4.4 Link Purpose. While it is currently necessary to create links with a descriptive text to remediate the issue, generative AI represents an opportunity for issues to be addressed in real-time. This could be accomplished through the employment of AI following a non-descriptive link to its source, and distinguishing its content before resolving the link for users in a manner that is more indicative of what the link represents.

Sign language

The utility of sign language in relation to WCAG presently stands as a Level AAA success criterion that is not as widely implemented within policy-related legislative frameworks, with compliancy up to Level AA appearing to be the most adopted. Thus, support for sign language is limited within time-based media such as online videos.

However, thanks to generative AI, it is now feasible to provide language translation services from text or symbol-based languages to sign language. Some websites and movie apps already offer a limited version of this capability. On the other hand, while this observation shows promise for the future use of sign language, the effectiveness of generative AI in translating sign language, coupled with the diverse localised variations in sign language across countries, poses challenges for providing a fully effective automated solution at this time.