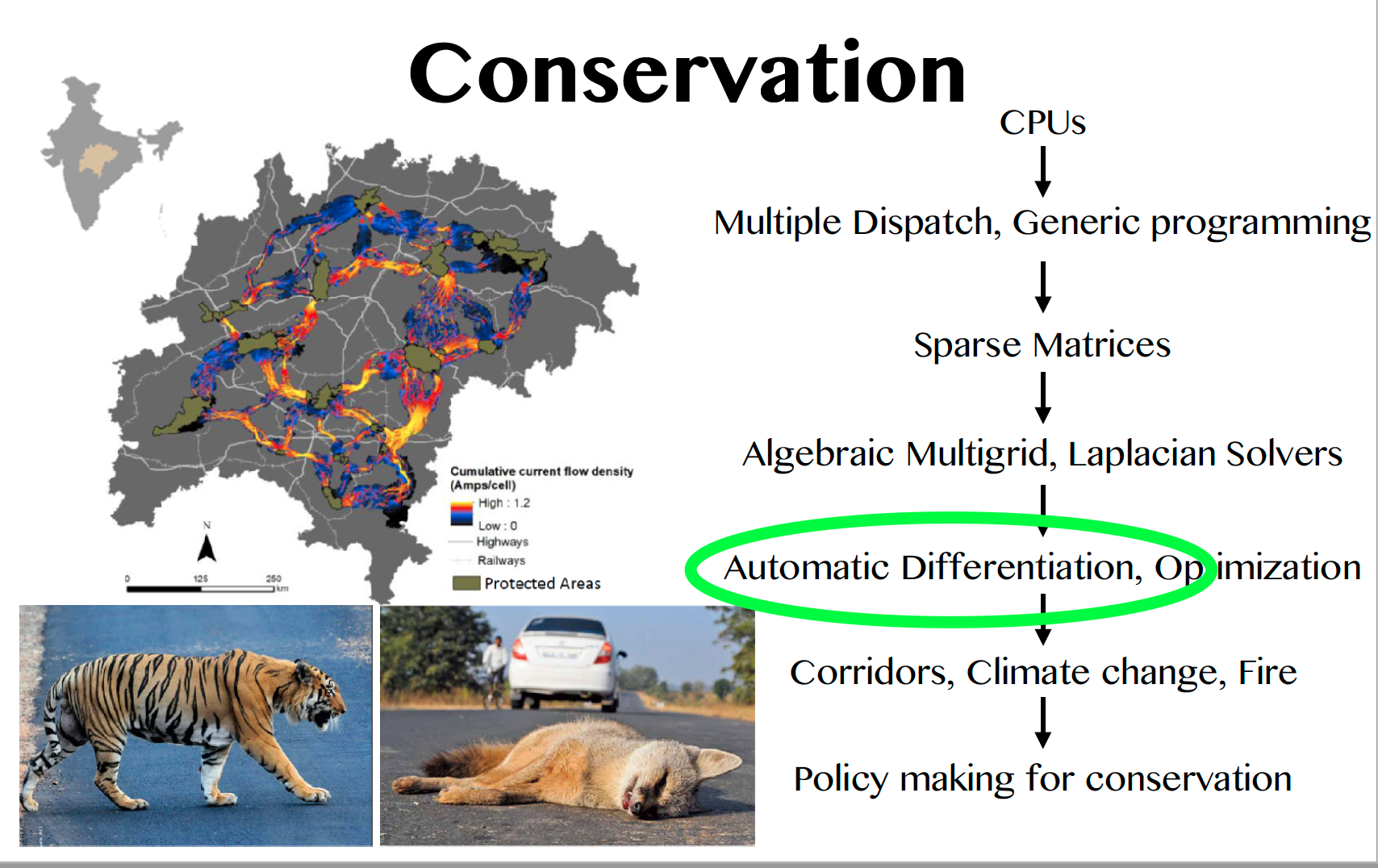

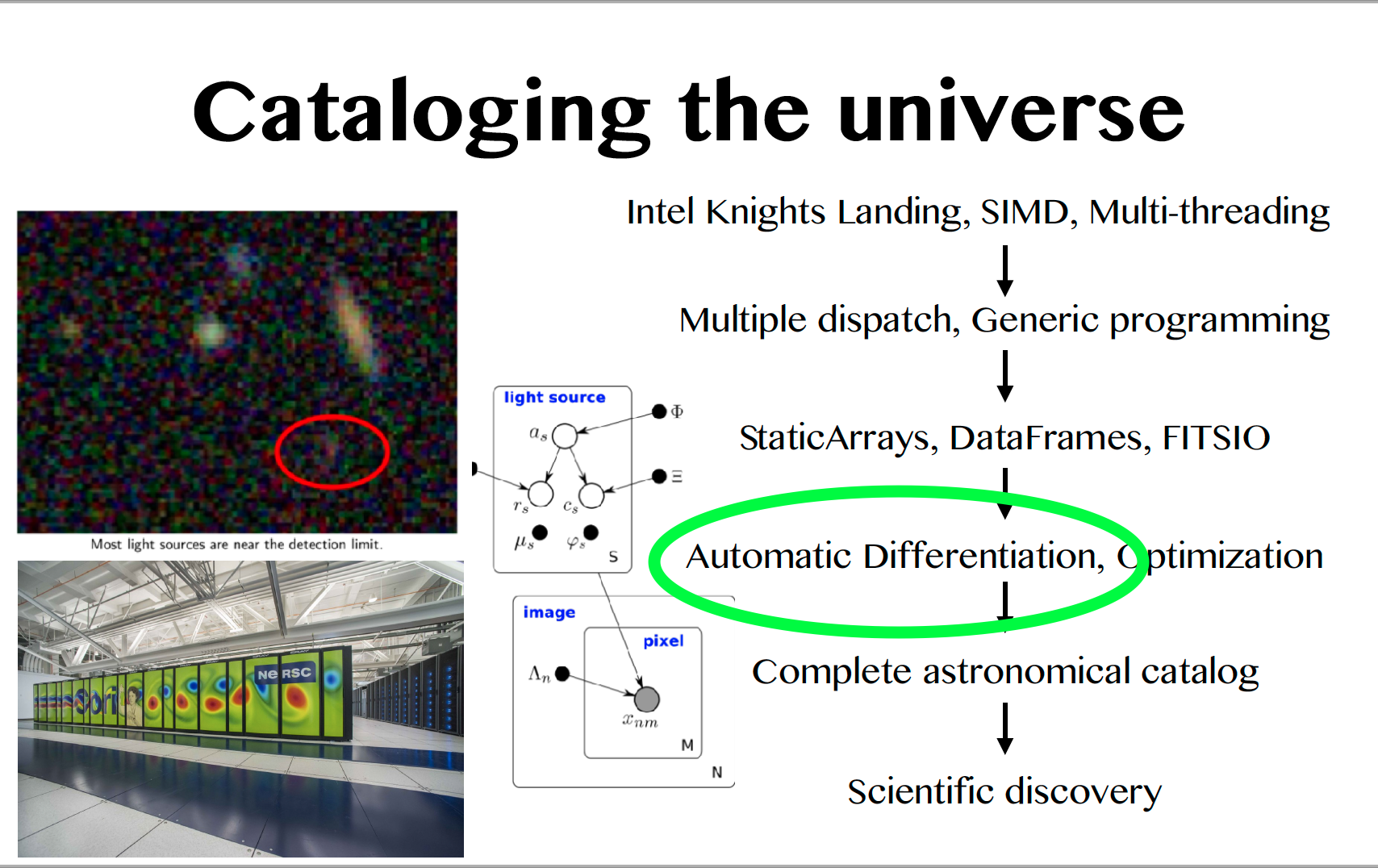

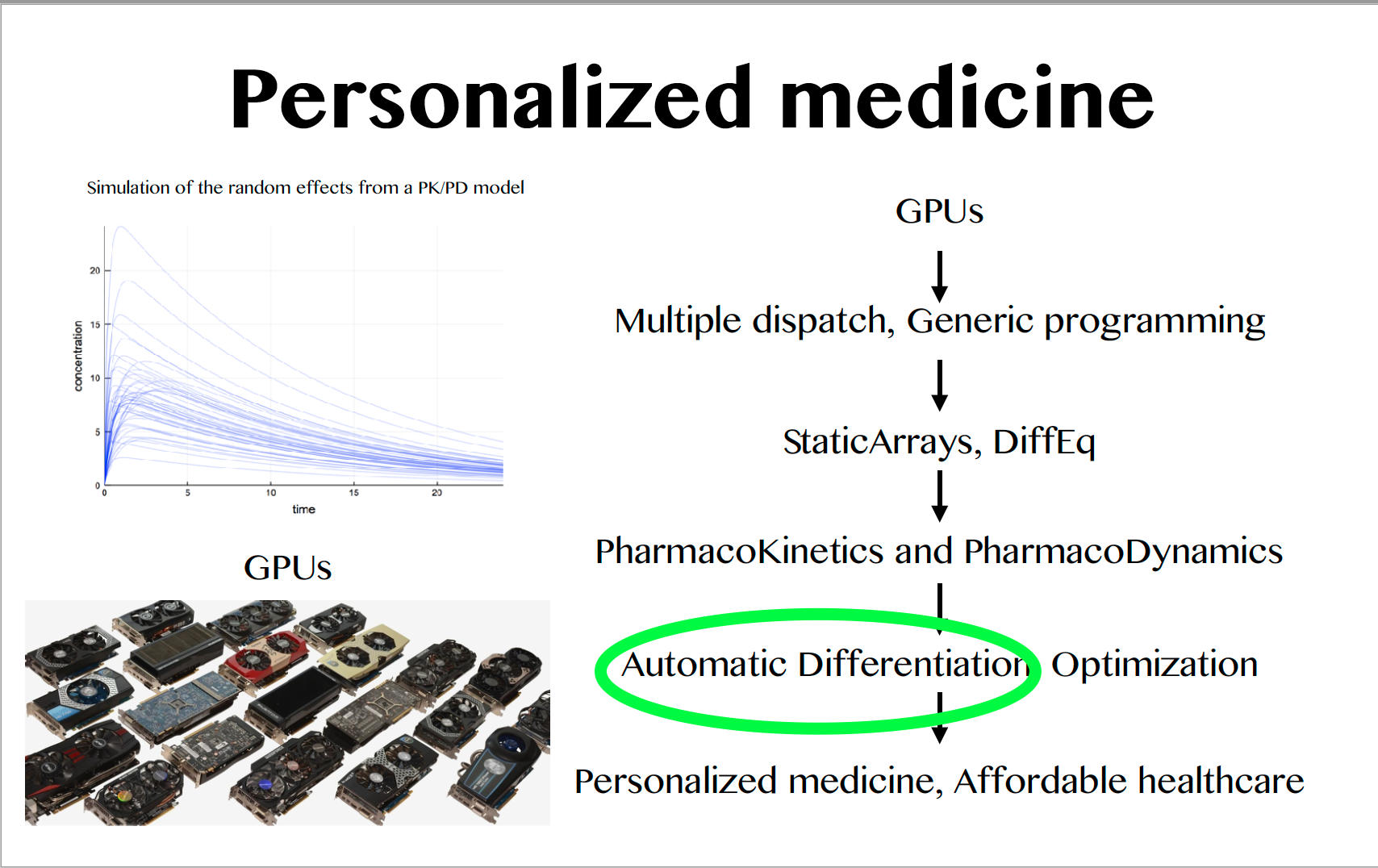

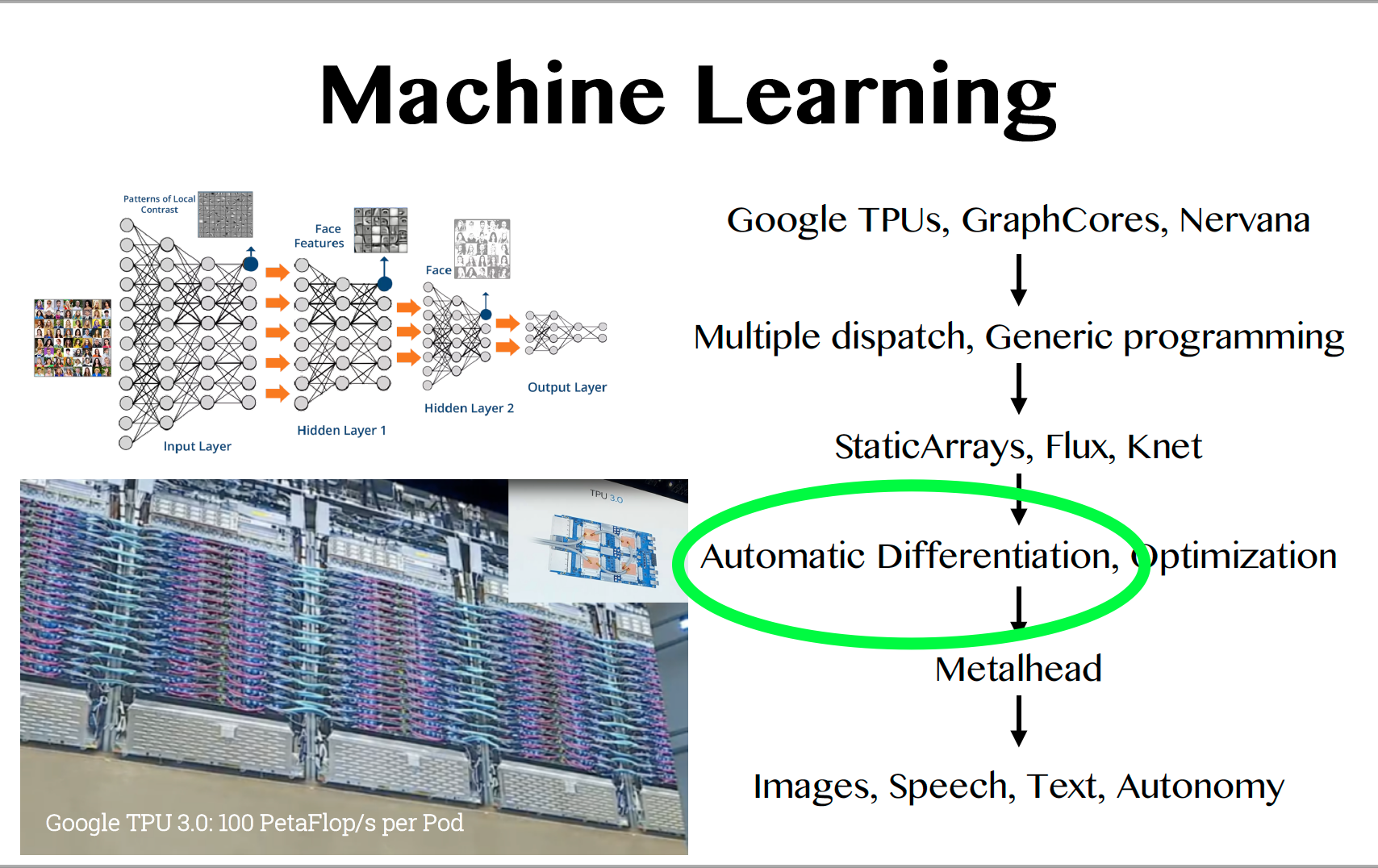

# ChainRules <br> .image-30[] <br> <br> .row[ .col[**Lyndon White**] .col[Research Software Engineer] ] .row[ .image-30[] .col[JuliaCon 2020] .image-50[] ] --- .image-float[] # Invenia is hiring Invenia has over 30 Developers, Research Software Engineers, Machine Learning Reseachers, and Power Systems Researchers working full-time in Julia; **and we would like to have more.** Come join us and contribute to our codebase of over <br> **400,000 lines of Julia code**. .col[**Also come see our JuliaCon 2020 talks**] .row[ .col[ ChainRules.jl<br> Fast GPs for time series<br> HydroPowerModels.jl<br> NamedDims.jl<br> ] .col[ NeuralProcesses.jl<br> ScoreDrivenModels.jl<br> Fancy Array Indexing BoF<br> Julia In Production BoF<br> ] ] --- # Ya Humans .row[ .image-60[] .image-60[] .image-60[] .image-60[] ] .row[ .col[.image-50[] Jarret Revels] .col[.image-50[] Alex Arslan] .col[.image-50[] Seth Axen] ] .row[ .col[.image-40[] Simeon Schaub] .col[.image-40[] Yingbo Ma] ] --- # Thanks also .row[ .col[ * Wessel Bruinsma * Takafumi Arakaki * Simon Etter * Shashi Gowda * Rory Finnegan * Roger Luo * Mike Innes * Michael Abbott * Mason Protter ] .col[ * Jeffrey Sarnoff * James Bradbury * Eric Davies * Curtis Vogt * Christopher Rackauckas * Anton Isopoussu * Antoine Levitt * Andrew Fitzgibbon ] ] --- # Why AutoDiff? .row[ .col[ .image-90[ ] .image-90[ ] ] .col[ .image-90[ ] .image-90[ ] ] ] **JuliaCon 2018 Founder's Keynote – The Future.**<br> Jeff Bezanson, Stefan Karpinski, Viral Shah, Alan Edelman; --- # How does Forward Mode AD work? Forward-mode AD means replacing every function with a function that calculates the primal result and pushesforward the derivative. -- How do we get such a function? Either we have a `frule` giving us one, or we open up the function and replace every function inside it with such a propagating function. --- ## Lets do AD by hand: forward-mode ```julia function foo(x) u = sin(x) v = asin(u) return v end ``` ``` foo (generic function with 1 method) ``` .row[ .col[ ```julia-repl julia> x = π/4; julia> ẋ = 1.0; julia> u, u̇ = frule((NO_FIELDS, ẋ), sin, x) (0.7071067811865475, 0.7071067811865476) julia> v, v̇ = frule((NO_FIELDS, u̇), asin, u) (0.7853981633974482, 1.0) julia> v̇ 1.0 ``` ] .col[ $\dot{x}=\textcolor{blue}{\dfrac{\partial x}{\partial x}}$ <br><br> $\dot{u}= \textcolor{green}{\dfrac{\partial u}{\partial x}} =\dfrac{\partial u}{\partial x} \textcolor{blue}{\dfrac{\partial x}{\partial x}}$<br><br> $\dot{v}= \textcolor{purple}{\dfrac{\partial v}{\partial x}} =\dfrac{\partial v}{\partial u} \textcolor{green}{\dfrac{\partial u}{\partial x}}$ ] ] --- # How does Reverse Mode AD work? Reverse-mode AD means replacing every function with a function that calculates the primal result and stores the pullback onto a tape, which it then composes backwards at the end to pull all the way back. -- How do we get such a function that tells us the pullback? Either we have a `rrule` giving us one, or we open up the function and replace every function inside it with such a propagating function. --- ## Lets do AD by hand: reverse-mode ```julia function foo(x) u = sin(x) v = asin(u) return v end ``` First the forward pass, computing the pullbacks, which we would record onto the tape ```julia-repl julia> x = π/4 0.7853981633974483 julia> u, u_pullback = rrule(sin, x) (0.7071067811865475, ChainRules.var"#477#sin_pullback#117"{Float64}(0.7853981633974483)) julia> v, v_pullback = rrule(asin, u) (0.7853981633974482, ChainRules.var"#501#asin_pullback#123"{Float64}(0.7071067811865475)) ``` --- ## Lets do AD by hand: reverse-mode ```julia function foo(x) u = sin(x) v = asin(u) return v end ``` Then the backward pass calculating gradients .row[ .col[ <br> ```julia-repl julia> v̅ = 1; julia> _, u̅ = v_pullback(v̅) (ChainRulesCore.Zero(), 1.414213562373095) julia> _, x̄ = u_pullback(u̅) (ChainRulesCore.Zero(), 1.0) julia> x̄ 1.0 ``` ] .col[ $\bar{v}=\textcolor{blue}{\dfrac{\partial v}{\partial v}}$ <br><br> $\bar{u}=\textcolor{green}{\dfrac{\partial v}{\partial u}} =\textcolor{blue}{\dfrac{\partial v}{\partial v}}\dfrac{\partial v}{\partial u}$<br><br> $\bar{x}= \textcolor{purple}{\dfrac{\partial v}{\partial x}} =\textcolor{green}{\dfrac{\partial v}{\partial u}} \dfrac{\partial u}{\partial x}$ <br><br> ] ] --- <br> <br> .row[ .col[ # A series of needs <br> .image-30[] ] ] --- # What does AD Need ? * Ability to decompose functions down into primitive operations that it has **rules** for. * Ability to recompose those rules and the results to get overall derivatives. * A collection of those **rules**: ChainRules --- # Why does AD need rules: * Fundamentally need rules for the instruction set: `+`, `*`, etc. * Insert domain-knowledge about best way to find it. Extreme example: *QuadGK + Fundamental Theorem of Calculus.* it is the identity. * Need rules to handle things the AD can't deal with (e.g. Zygotes current lack of mutation support) .funfact[ Instruction set size varies a lot across ADs. E.g. PyTorch, Jax, MxNet have an instruction set that is $\approx~\text{Numpy's API}$. Enzyme has an instruction set that is the LLVM instruction set. ] --- # What does ChainRules Need? -- ### An AD Agnostic System for Writing Rules ChainRulesCore.jl -- ### An inventory of actual rules for Base and StdLibs ChainRules.jl -- ### A way to test they are right ChainRulesTestUtils.jl --- # The ChainRules project fills those needs <img src="data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAC7gAAAIICAIAAABxJTSIAAAABmJLR0QA/wD/AP+gvaeTAAAgAElEQVR4nOzdZ1hUZ/7/8e8MRVAURFBEbCj2gmBvoGIQG2rsUYlJ0GSTGF2TVbN2s67GRI0a3WDsvQYrGrsSUSxRROxYURGkSG8z/wdn//xcY4wizIHh/Xqw1/c+c+a+P7B7sQ/8XOdo9Hq9AAAAAAAAAAAAAAAAAMZOq3YAAAAAAAAAAAAAAAAAwBAoygAAAAAAAAAAAAAAAKBYoCgDAAAAAAAAAAAAAACAYoGiDAAAAAAAAAAAAAAAAIoFijIAAAAAAAAAAAAAAAAoFijKAAAAAAAAAAAAAAAAoFigKAMAAAAAAAAAAAAAAIBigaIMAAAAAAAAAAAAAAAAigWKMgAAAAAAAAAAAAAAACgWKMoAAAAAAAAAAAAAAACgWKAoAwAAAAAAAAAAAAAAgGKBogwAAAAAAAAAAAAAAACKBYoyAAAAAAAAAAAAAAAAKBYoygAAAAAAAAAAAAAAAKBYoCgDAAAAAAAAAAAAAACAYoGiDAAAAAAAAAAAAAAAAIoFijIAAAAAAAAAAAAAAAAoFijKAAAAAAAAAAAAAAAAoFigKAMAAAAAAAAAAAAAAIBigaIMAAAAAAAAAAAAAAAAigWKMgAAAAAAAAAAAAAAACgWKMoAAAAAAAAAAAAAAACgWKAoAwAAAAAAAAAAAAAAgGKBogwAAAAAAAAAAAAAAACKBYoyAAAAAAAAAAAAAAAAKBYoygBAvknLTktIT1A7BQAAAAAAAAAAAADg5UzVDgAARU9sauzpqNMRMRG34m7djLt5L/FefHr8s4xnmTmZufdYmFo4WDlUKl3Jwcqhtl3txhUaN6rQyKWci4nGRMXkAAAAAAAAAAAAAFCcafR6vdoZAKAISMxIDLoRFHQzKOR+yI24G3nbpLR5ac9qnl7OXl7OXvXs6+VvQgAAAAAAAAAAAADAq1GUAYBXSc5M3nR506bwTUfvHM3SZb36ZktTS3MT88SMxNfZua5d3UENBw1qMKimbc38SAoAAAAAAAAAAAAA+AsUZQDg5S5GX1xyZsmG8A3PMp698FEJkxLuju5uFd1qlatV07ZmdZvq5UqWsy5hbW5inntPalbqg2cPolOi7yXeC38SfvHxxYvRFx8mPfzjQR5VPUa3HN2zdk+tRluwPxIAAAAAAAAAAAAAFG8UZQDgRWcfnp1xfMaua7v08n9/ITWicXd0963t6+Xs5VbR7flOzOu7GXfz0O1DhyIP7bu5Lykz6fmPatrW/LL1lx82+dBUa/q2PwAAAAAAAAAAAAAA4GUoygDA/7n+9PrYX8fuvr77+Yt17er6u/v3r9+/UulK+XVQWnbarmu7NoRv2HN9z/NvdKpdrvbMTjN71+mt0Wjy6ywAAAAAAAAAAAAAgIKiDACIiCRnJv/rxL/mhszNzMlUrmg12j51+4xqMapdlXYFd25UUtSi0EUB5wLi0uJyL7ar0i6gR0AduzoFdy4AAAAAAAAAAAAAFEMUZQBAjt456hfody/xnrI00Zj0r99/YvuJ9ezrGSZASlbK4jOL/33i3/Hp8coVC1OLSe0nfdXmKzOtmWEyAAAAAAAAAAAAAIDRoygDoFjL0mVNPjL529++1el1ypW2Vdou9Fno6uBq+DDx6fEzT8xccHpB7lNtmjk229J/S1XrqoYPAwAAAAAAAAAAAADGh6IMgOLrYdLDPpv6nI46rSztS9p/7/39kIZDNBqNiqnCn4R/tPOj3FS2lrZreq/p6tJVxUgAAAAAAAAAAAAAYBwoygAops4/Ou+70ffBswfK0ruG94peKypaVVQ3lSJHnzP/1PyvD32tPFpGq9HO6DDj63Zfq50LAAAAAAAAAAAAAIo2ijIAiqNd13cN3DowNStVREy1pnM6z/mixRfqPkjmj0KjQvtt6Xcv8Z6y/LTZpwt8Fmg1WnVTAQAAAAAAAAAAAEDRRVEGQLGz/cr2gVsHZumyRKSsRdkt/bd0qt5J7VAvF5saO3DrwEO3DynL/vX7r+2z1kxrpm4qAAAAAAAAAAAAACiiKMoAKF42Xd40ZPuQbF22iNS0rbln8J5a5WqpHepVMnMy/QL9NoZvVJYDGwxc12cdz5UBAAAAAAAAAAAAgDzgn1oBFCP7b+3PbcnUsatz7P1jhbwlIyLmJubr+qwb1WKUstwYvvHj3R/TcQQAAAAAAAAAAACAPDCZOnWq2hkAwBDCosO6ruualp0mIvXt6x/2O1yxdEW1Q70WjUbjU9PnadrT0KhQETn/6Lxe9B2qdVA7FwAAAAAAAAAAAAAUMbx6CUCx8Cj5UfOlzR88eyAiVayrnProVEWrotGSyaXX64fvGL7q4ioR0Yhm24Btvev0VjsUAAAAAAAAAAAAABQlvHoJgPHT6XVDtg9RWjLWJaz3vre3yLVkRESj0fzc8+cuNbuIiF70fr/4XY65rHYoAAAAAAAAAAAAAChKKMoAMH7fHP/m8O3DImKiMdnaf2t9+/pqJ8ojU63p+nfX17StKSJJmUn9t/RPz05XOxQAAAAAAAAAAAAAFBkUZQAYuZP3T04/Nl2ZJ3lM8nL2UjfPWyprUTZwYKCVuZWIRMRETDoySe1EAAAAAAAAAAAAAFBkUJQBYMyydFkjdo3I0eeIiGc1z4ntJ6qdKB/Ut68/p/McZZ4XMu+3+7+pmwcAAAAAAAAAAAAAigqKMgCM2Xcnv7scc1lESpuXXt17tYnGRO1E+WOk+8h3arwjIjn6nI93f6w0gQAAAAAAAAAAAAAAr0ZRBoDRupd4b8axGco8o+OMymUqq5snH2k0mmU9l5U2Ly0i4U/CA84FqJ0IAAAAAAAAAAAAAIoAijIAjNb0Y9PTstNExL2i+2fNP1M7Tj5zKuM0od0EZZ5yZEpCeoK6eQAAAAAAAAAAAACg8KMoA8A43Yi7seriKmX+3vt7o3np0vPGtBxTzaaaiMSkxsw/NV/tOAAAAAAAAAAAAABQ2FGUAWCcph2dlq3LFpHOzp09qnqoHadAWJha/Kvjv5R5UeiilKwUdfMAAAAAAAAAAAAAQCFHUQaAEXqU/Gjz5c3K/E3Hb9QNU6AGNBhQo2wNEXma9nTpuaVqxwEAAAAAAAAAAACAQo2iDAAjFHAuIEuXJSIeVT2aV2qudpwCZKIxGdt6rDL/cPoHnV6nbh4AAAAAAAAAAAAAKMwoygAwNlm6rIBzAcr8afNP1Q1jAMNdh5ezLCcidxLuHLt7TO04AAAAAAAAAAAAAFB4UZQBYGwORh58mPRQRCqVrtSrTi+14xQ4C1OLQQ0HKfOqC6vUDQMAAAAAAAAAAAAAhRlFGQDGZmvEVmUY1HCQmdZM3TCG8b7r+8qw7cq21KxUVbMAAAAAAAAAAAAAQOFFUQaAUcnSZQVeDVTmvvX6qhvGYNwrute1qysiyZnJR+4cUTsOAAAAAAAAAAAAABRSFGUAGJXge8FxaXEiUsW6SnPH5mrHMZzutborQ9CNIHWTAAAAAAAAAAAAAEChRVEGgFE5fve4MnRz6abRaNQNY0g+Lj7KEHSTogwAAAAAAAAAAAAAvBxFGQBGJbco075qe3WTGFibym1Km5cWkcj4yKikKLXjAAAAAAAAAAAAAEBhRFEGgPHI0mWdenBKmYtbUcbcxNzd0V2Zzz48q24YAAAAAAAAAAAAACicKMoAMB43426mZqWKSFXrqo6lHdWOY2hNHZsqw7mH59RNAgAAAAAAAAAAAACFE0UZAMbjSswVZahnX0/dJKpwr/jfJ8r8/vh3dZMAAAAAAAAAAAAAQOFEUQaA8YiIiVCGuvZ11U2iijp2dZThdvxtdZMAAAAAAAAAAAAAQOFEUQaA8YiMj1SG2uVqq5tEFVWsqyjDvcR76iYBAAAAAAAAAAAAgMKJogwA4/Ek5YkyOFg5/PHTjIyMkJCQDRs2nDx5UqfT3bt3b968ea/YLT09fdq0aX+8/uDBg4MHDx48ePDSpUvZ2dkv/e6pU6d27NjxOpmzs7PPnDmzYcOGY8eO/dlur8nW0ra0eWkRScpMik+Pf5utAAAAAAAAAAAAAMAoUZQBYDxiU2OVwa6k3QsfXbt2rX79+vPnz79+/frChQt9fHxiYmL27Nnzit30en1aWtofr+/YsePzzz/fsmXLuHHjGjZs+PTp0z/ec/ny5RMnTvxl4KioqCZNmsyYMeP69evLly9v2bLlX37l1XIbQrmdIQAAAAAAAAAAAABALlO1AwBAvnma9t/Oyh+LMkOGDBkzZsynn36qLG/fvh0XFycily5dOnjwoJubm4eHR3x8/N69ex88eFC7dm1fX19TU9MWLVokJCRcuHDBzs7u4MGDrq6unp6eItKuXbuffvpJRHx8fH755ZfBgwcHBwe/8847IvLo0aP79+/nnnvjxo39+/dbWFgMHDjQysoqJiZmy5YtSUlJderU8fX1HTlyZO/evadPn56bSkSePXu2ZcuWtLS0rl27Ojs7P3jwIDo6OjU19cyZM3//+9/j4uK2b9+enp7es2fPKlWqvPBjljQrqQypWan5+IsFAAAAAAAAAAAAAOPAE2UAGI/MnExlsDC1eP56TExMeHj4yJEjc69Ur15dRK5cubJs2TI7O7sRI0acOHEiNDQ0NjbWxcVl69atX375ZWpq6pgxY+7evTt48OClS5fa2dl9/PHHR44cyd1Er9c/e/bM0tLy6dOnX331lXLx3LlzixYtUubffvutf//+1tbWCQkJHTt2zMrK8vb2Tk1NrVWr1oULF7Kzsw8cODB69OjnU6Wnp7dp0+bRo0eWlpZeXl4REREhISE9e/YMDAy0tbWNiYnx8PBIS0uzsrLq0qXLgwcPXvgNUJQBAAAAAAAAAAAAgFfgiTIAjN+9e/ccHBxMTV/8i2dlZTVv3jyNRhMXF3fo0KGpU6c2btz4xo0b77333scffzx58mTltpIlS86fP1+j0SQkJBw6dKhixYp79+7t3LnzvXv3mjRp0q9fv+jo6JeeO3PmzPnz53t4eIjI+fPnjx8//uDBgxYtWrRt27Z3794PHjwwNTW1tbV9/iu7du2qXbv2xIkTRSQpKWnx4sUeHh61atX6/vvvRWT69OlDhw79/PPPRSQxMXHNmjUTJkx4/uuWZpYiUtq8tEY0+fKrAwAAAAAAAAAAAABjQlEGgPGrVKlSdHS0TqfTav/nMVqVK1fWaDQiUqZMmfv37//888/Lli3r0qWLhYXF06dPc29zcnLKve3mzZsi0rZt25kzZ545c2bChAmpqX/67JbIyMg5c+b8+OOPytLU1HTt2rXffPPNlStX3nvvvWnTpmVlZSUmJlpbW+d+5cGDBzVr1lRmFxeXo0ePikiNGjVyN7x27drZs2eVpbe39wsnpmeni0hSZpJe9G/8awIAAAAAAAAAAAAAY0dRBoDxMNOaKUNGTsbz1x0cHGrUqLFmzRo/Pz/lykufAbNixYoVK1bUqVPn5s2b06ZNe8VBZcqUcXZ2dnZ2Pnv27LRp06ZMmZKQkKB8FBkZmXubs7PzyJEje/To8fx333nnnfj4+A4dOvTu3bt9+/YBAQG5r22Kjo6uWrXqsWPHlOWVK1eqVasmIrn9Hmdn58qVK8+YMePPguW+cSn3HUwAAAAAAAAAAAAAgFwUZQAYj3Ily92KvyUisamxLrYuz3+0Zs2aHj16nDx5sn79+teuXbty5cqcOXNe+Hrjxo1nzJjRuXPn7du3//E9TS81fvz4unXrfvnll05OTmPHjnV0dAwMDKxevbry6dSpU4cNG3bjxg0bG5uQkJBRo0bNmTPH09MzISEhNTXV2dn5p59+8vb2vnTpUtOmTW/fvn306NHTp0/PnDlz3Lhxjo6OS5Ys2bdv34ULF3KP++yzzzw9PfV6fa1atSIiItq1a9etW7fn81CUAQAAAAAAAAAAAIBX0Oj1vJ4DgJHovr77nht7RGTnoJ09avV44dOkpKQTJ07cvn27evXqnTt3Tk5ODgsL8/DwEJE7d+4kJibWq1dv27ZtmZmZXbp0CQkJ6dat25EjR5o1a3bhwgVPT08RuXv3bnx8vJWVVWJioru7u7JtcHCwo6NjuXLltmzZUqFCBTc3t6ioKAcHh6SkpPr16z9+/PjAgQPJycmurq7NmjU7efLkpUuXLC0tu3XrVqFCBRFJS0s7ceLEjRs3KlWq5O3tbWlpmZaWtnPnzuTkZB8fH0dHxwcPHkRHR+cel5qaunfv3sePH9esWdPT09PCwuL5n9Fmlk1iRqKIxHwVY1fSrkB/2wAAAAAAAAAAAABQ5FCUAWA83g98f9XFVSIS0CPA381f7TiGlpCeUHZ2WREpZVYq+etkteMAAAAAAAAAAAAAQKGjVTsAAOQb57LOynDj6Q11k6jibuJdZahqU1XdJAAAAAAAAAAAAABQOFGUAWA86tnXU4aImAh1k6ji+tPrylDNppqqQQAAAAAAAAAAAACgkKIoA8B41LWvqwxXYq+om0QV5x6eUwZXB1d1kwAAAAAAAAAAAABA4URRBoDxcLF1sTC1EJHb8bejU6LVjmNoZx+eVQb3iu7qJgEAAAAAAAAAAACAwomiDADjYW5i3qJSCxHRi/743eNqxzGobF12blGmqWNTdcMAAAAAAAAAAAAAQOFEUQaAUWlXtZ0ynLh7Qt0kBnbqwanEjEQRqWJdpYp1FbXjAAAAAAAAAAAAAEBhRFEGgFFpX7W9Muy9sVfdJAYWdDNIGXxq+qibBAAAAAAAAAAAAAAKLYoyAIxK+6rtrUtYi8it+FvnH51XO47h7L6+Wxl8XCjKAAAAAAAAAAAAAMDLUZQBYFRKmJToWbunMm+N2KpuGIMJiw4Liw4TEUtTy07VO6kdBwAAAAAAAAAAAAAKKYoyAIxN33p9lWH9pfU5+hx1wxjGqourlKFXnV5W5lbqhgEAAAAAAAAAAACAQouiDABj413Tu3yp8iJyN/Fu7guJjFhmTua6sHXK7Ofqp24YAAAAAAAAAAAAACjMKMoAMDYlTEp85PaRMi8+s1jdMAawNmxtdEq0iDiVcfJy9lI7DgAAAAAAAAAAAAAUXhRlABihke4jTTQmInLg1oELjy+oHacA6fS6OSfnKPNnzT9TfmoAAAAAAAAAAAAAwEtRlAFghKpYV+ldt7eI6EU/+chkteMUoMCrgVdjr4qIdQnrT5p+onYcAAAAAAAAAAAAACjUKMoAME5TPadqNVoR2XV91+mo02rHKRBZuqwJhyYo8yfNPilTooy6eQAAAAAAAAAAAACgkKMoA8A41bevP6jBIGX++/6/6/Q6dfMUhB9Df7z+9LqIlLUoO7bVWLXjAAAAAAAAAAAAAEBhR1EGgNGa6jnV3MRcRE7eP/nz+Z/VjpPPolOipx+brsyTPCbZlbRTNw8AAAAAAAAAAAAAFH4UZQAYrZq2Nce1GafM4w+Oj06JVjdP/hq5a2R8eryI1CpX69Nmn6odBwAAAAAAAAAAAACKAIoyAIzZ1+2+drF1EZH49PjhgcP1er3aifLH6ourd1zbISIa0Szutlh5cA4AAAAAAAAAAAAA4NUoygAwZhamFv/p/h+tRisiQTeDvg/5Xu1E+eBG3I1RQaOU+W/N/tapeid18wAAAAAAAAAAAABAUUFRBoCR61i941etv1Lmrw99ffL+SXXzvKWkzKTeG3snZiSKSE3bmrM7z1Y7EQAAAAAAAAAAAAAUGRRlABi/GR1ntHJqJSJZuqxeG3vdjLupdqI80ul17we+fznmsohYmlpu6ruplFkptUMBAAAAAAAAAAAAQJFBUQaA8TPTmm3ou6F8qfIiEpMa0219t6dpT9UOlRdf7Pti+5XtyhzQI8Ctopu6eQAAAAAAAAAAAACgaKEoA6BYqGpddeegnZamliJy/en1Lmu7xKfHqx3qzfzz8D8XhS5S5jEtxwxpNETdPAAAAAAAAAAAAABQ5FCUAVBctKjUYm2ftVqNVkTOPjzbaVWnIvRcmclHJs88MVOZBzUY9N0736mbBwAAAAAAAAAAAACKIo1er1c7AwAYzrLfl43YNUKn14lIw/INdw/eXcW6itqhXiVHn/O3PX8LOBegLHvU6rFtwDYzrZm6qQAAAAAAAAAAAACgKKIoA6DYWXVx1Yc7PszR54iIg5XDLwN+aenUUu1QL5eUmTR0+9Ad13YoS5+aPtsHbLcwtVA3FQAAAAAAAAAAAAAUURRlABRH6y+tH75jeGZOpohYmFr82PXHD5p8oHaoF4U/Ce+7ue+1p9eU5dBGQ5f5LuNZMgAAAAAAAAAAAACQZxRlABRTJ+6d6LOpT2xqrLLsV6/ff7r/x9bSVt1UCr1ev+z3ZaP3jU7JSlGu/KPNP2Z1mqXRaNQNBgAAAAAAAAAAAABFGkUZAMVXZHxkzw09L8dcVpZOZZwWdV3kW9tX9VQjdo04dPuQsrQyt/q5588D6g9QNxUAAAAAAAAAAAAAGAGKMgCKtdSs1LG/jv3p7E96+e8fQ5+aPj/4/OBi62L4MClZKXND5s4Onp37IJl69vW29d9Wx66O4cMAAAAAAAAAAAAAgPGhKAMAsuv6ro92fvQk5YmyNDcx/6DJB+Pbjq9qXdUwAbJ0WcvOL5t+bPqj5EfKFVOt6d9b/X2q51RLU0vDZAAAAAAAAAAAAAAAo0dRBgBEROLS4iYenhhwLiBHn6NcMTcxH9po6KgWoxpVaFSg5wacC1gUuigqKSr3YuMKjZf5LnOv6F5w5wIAAAAAAAAAAABAMURRBgD+6+nTpx4DPS5Xviz/+xyZFpVa+Lv7v1v3XRsLm/w6K0efczDy4IZLG7ZGbM190ZKIOJVxmuY5zc/Vz0Rjkl9nAQAAAAAAAAAAAAAUFGUAQETk8ePH3t7eYWFhIqKpoan+fvXInMjnbzDTmnlU8+hZu6eXs1edcnU0Gk0eTnmS8uTw7cMHIw/uvr47OiX6fz5LkspRlS8EXLAtbfsWPwcAAAAAAAAAAAAA4E9RlAEAuXPnTufOnW/evCkiJiYmS5Ys8ff3P3HvxOIzi3+58ktGTsYL95e1KNvSqaVbRTeXci4uti7OZZ1tLW3NTcyfv0ev10enREenRN9LvHf5yeULjy+ERYddjb2qlxf/6mqiNfqTegkXyZHu3bsHBgaamPA4GQAAAAAAAAAAAADIfxRlABR3p0+f9vX1jY6OFhFzc/M1a9b0798/99PY1NjVF1dvurzpTNSZP3ZcnmdpalmmRBmNRpORnSEiyZnJWbqsV9zvVMZpQP0BgxsOjjgUMWzYsNy/xmPHjv3uu+/y4QcDAAAAAAAAAAAAAPwvijIAirXNmze///77aWlpIlKyZMlt27Z16dLlpXeu27luyIwhUlM0VTT6knn8y2luYt7KqVUn505ezl4tKrXQarTK9SlTpkyfPj33toULF3722Wd5OwIAAAAAAAAAAAAA8GcoygAopvR6/b/+9a/Jkycrfwbt7e0DAwNbt279Z/d36tTp8OHDIjJmzJhPJ316+sHpiJiIW/G3bsbdvJtwNzEjMTMn84Wv2JW0s7Owuxp6VeLELM7s0LpD7pXdS5qVfGmYwYMHb9y4UVlqNJr169cPHDgw335aAAAAAAAAAAAAAABFGQDFU2Zmpr+//+rVq5Wli4vL7t27a9Wq9Wf3X7x4sUmTJnq93tTU9ObNm1WrVv3jPWnZaYnpiXrRW5haiEhJs5IlTEqISP369SMiIkTkwIEDXl5er4jk6+u7b98+ZWliYrJz586uXbu+xU8JAAAAAAAAAAAAAPgfWrUDAIChxcTEeHl55bZkvLy8QkNDX9GSEZFZs2YptcIBAwa8tCUjIpamlg5WDhWtKpa1KFvWoqzSklH2V4ZDhw694ghzc/Pt27e3bdtWWebk5PTu3fv48eNv8pMBAAAAAAAAAAAAAF6FogyA4iU0NNTd3f3EiRPKcsSIEXv37rWxsXnFV+7cubN161ZlHjt27Jue2KlTJ2U4ePDgq++0tLTct29fixYtlGVmZmaXLl3OnTv3picCAAAAAAAAAAAAAF6KogyAYmT16tWenp73798XEY1GM2XKlJ9++snMzOzV35ozZ052draIeHl5NWnS5E0P9fT0NDU1FZHz58/Hxsa++uZSpUoFBQXVrVtXWaalpXXq1OnKlStveigAAAAAAAAAAAAA4I8oygAoFjIyMkaMGOHn55eWliYitra2e/funTp16l9+MTo6esWKFco8YcKEPBxdpkwZ5SExOp3uwIEDf3l/2bJljx496uzsrCwTExPbtGlz7dq1PBwNAAAAAAAAAAAAAHgeRRkAxu/+/fvt27dfunSpsnR1dT1z5kyXLl1e57vff/+90q1p3rx5x44d8xbA29tbGfbv3/8695cvX/748eNOTk7KMj4+vlWrVjdv3szb6QAAAAAAAAAAAAAABUUZAEZuz549TZo0CQ0NVZZ+fn4nT57MfV7Lq8XFxf3nP/9R5okTJ+Y5Q24pZ//+/Xq9/nW+UqlSpbNnz9aoUUNZxsfHN2vW7NatW3nOAAAAAAAAAAAAAACgKAPAaGVmZo4dO7ZHjx5Pnz4VEXNz88WLF69cudLS0vI1d1i4cGFSUpKINGzYsHv37nlO4u7uXr58eRF5/PhxWFjYa36rQoUKZ86cqVu3rrJMSEho2rTpnTt38hwDAAAAAAAAAAAAAIo5ijIAjFNkZGTbtm3nzp2rPMGlcuXKx44d++STT15/h2fPnv3www/KPGHCBI1Gk+cwWq3Wy8tLmfft2/f6XyxbtmxISEiDBg2UZUJCQuPGjXmuDAAAAAAAAAAAAADkDUUZAEZo+/btTZs2PXPmjLLs0aPH77//3rJlyzfaZMGCBfHx8SLi4uLSr1+/t4zk7e2tDHv37n2jL1pbW4eEhLi6uirLZ8+eubq6Xr169S3zAAAAAAAAAAAAAEAxpFGetQAAxiEtLe2LL75YunSpsjQ3N589e/YXX3zxps+DSU5Orv+MSsQAACAASURBVF69emxsrIisXr166NChbxksJibGwcFBp9OZmprGxMTY2Ni80dfT0tI8PT1DQ0OVpYWFxfPtGQAAAAAAAAAAAADA6+CJMgCMx6VLl1q2bJnbkqlWrdqxY8dGjx6dh7cmLVy4UGnJ1KhRY9CgQW+fzd7evmnTpiKSnZ3966+/vunXLS0tT5w4kfv+pvT09ObNm584ceLtgwEAAAAAAAAAAABA8UFRBoAxyMnJmTVrVtOmTcPCwpQrgwYNunjx4pu+bkmRnJw8d+5cZZ44caKpqWm+hOzatasyvOnblxTm5ua//vrr8OHDlWVWVlaHDh127dqVL9kAAAAAAAAAAAAAoDigKAOgyLt7927Hjh0nTJiQmZkpIqVLl162bNn69evLlCmTtw1zHyfj7Ow8ZMiQ/MrZrVs3Zdi7d69Op8vDDhqNZvny5ePHj1eWOTk5vXr1WrVqVX4lBAAAAAAAAAAAAADjRlEGQNG2ZcuWJk2aHD9+XFk2b9783LlzH3zwQZ43TE5OnjdvnjLn4+NkRMTNzc3BwUFEYmJizpw5k+d9/v3vf8+aNUt5n5ROpxs+fPjs2bPzKyQAAAAAAAAAAAAAGDGKMgCKqri4uIEDB/bv3z8+Pl5ETE1Np02b9ttvv7m4uLzNtvPmzYuJiRGRGjVqDB06NH+yioiIVqv18fFR5rd8ZdK4ceNWr16t1WpFRK/Xjx8//m26QQAAAAAAAAAAAABQTFCUAVAk7dmzp0GDBps2bVKWLi4uwcHBkydPfssHwCQmJs6fP1+Z3363P+rRo4cyvGVRRkSGDBmye/fu3IQrVqzw8vLKysp6y20BAAAAAAAAAAAAwIhRlAFQxDx79uyjjz7q3r37o0ePlCv+/v6///57ixYt3n7z+fPnx8XFiUitWrXee++9t9/wBe+8846FhYWIhIWF3blz5y138/HxOX36tLKhiBw6dKh+/frK43AAAAAAAAAAAAAAAH9EUQZAURIcHOzm5rZs2TJlWaFChR07dgQEBJQqVertN4+Li5s3b54yT5o0ycTE5O33fEGpUqU8PT2Vec+ePW+/oZubW0REhLW1tbK8ceNGvXr1IiIi3n5nAAAAAAAAAAAAADA+FGUAFA2pqamjRo1q3779rVu3lCuDBg2KiIjo2bNnfh3x3XffJSYmikitWrUGDRqUX9u+IPftS7t3786XDatXr37jxg0nJydlGRsb26RJk/zaHAAAAAAAAAAAAACMiUav16udAQD+wsmTJz/44INr164py7Jlyy5YsGDIkCH5eERsbKyzs3NSUpKIbN68uV+/fvm4+fPu3btXrVo1vV5fokSJmJiY0qVL58u2ycnJ7dq1u3DhgrLUaDQzZ84cN26cRqPJl/0BAAAAAAAAAAAAwAjwRBkAhVpqauqYMWPatWuX25Lx9fW9cuVK/rZkROTf//630pJp2LDhu+++m7+bP69KlSqurq4ikpGRsX///vza1srK6rfffmvbtq2y1Ov1EyZMGDx4cGpqan4dAQAAAAAAAAAAAABFHUUZAIVXcHCwq6vr/PnzdTqdiNjY2KxatSowMLBChQr5e9CjR4+WLFmizDNmzNBqC/ZvY+7ronbu3JmP25YsWfLw4cO+vr65VzZu3NiqVas7d+7k4ykAAAAAAAAAAAAAUHRRlAFQGKWlpY0fP97T0/PGjRvKlS5duly6dGnYsGEFcdzMmTPT0tJEpFmzZrktloKTe8SePXuys7PzcWczM7Pt27d/9NFHuVfCwsLc3d0PHDiQj6cAAAAAAAAAAAAAQBFFUQZAoRMcHNy4cePZs2fn5OSIiI2NzcqVK4OCgpycnAriuHv37i1dulSZZ8yYodFoCuKU57m5uVWrVk1E4uLigoOD83dzrVYbEBAwZcqU3CtxcXE+Pj6zZ8/W6/X5exYAAAAAAAAAAAAAFC0UZQAUIqmpqaNHj/bw8Mh9kEy3bt3Cw8P9/PwK7tAZM2ZkZGSISJs2bby9vQvuoOd169ZNGfL37UsKjUYzderU+fPn55Z+cnJyxo8f/95776WkpOT7cQAAAAAAAAAAAABQVGh4wACAQuLYsWMffvjhrVu3lKWNjc28efPef//9Aj30zp07tWvXzszMFJHDhw936NChQI/L9euvvyqlHGdn59wfOd8tXbr0k08+UR7Mo2jQoMG2bdtq1apVQCcCAAAAAAAAAAAAQGHGE2UAqC8tLW38+PEdO3bMrYx06dIlLCysoFsyIjJ58mSlJdOpUyeDtWREpEOHDjY2NiISGRkZFhZWQKf4+/tv2LDB3Nw890p4eHizZs1++eWXAjoRAAAAAAAAAAAAAAozijIAVHbkyJEGDRrMnj1bp9OJSNmyZVetWhUUFFS5cuWCPvrKlSvr169X5hkzZhT0cc8zMzPz8fFR5sDAwII7qF+/fjt37ixVqlTulWfPnr377rtffPFFVlZWwZ0LAAAAAAAAAAAAAIUQRRkAqklNTR0/fryXl1dkZKRypWvXrmFhYcOGDTNMgKlTpyqvJeratWurVq0Mc2guX19fZdixY0eBHuTt7X348OFy5crlXtHr9QsWLPDy8nr06FGBHg0AAAAAAAAAAAAAhYpGr9ernQFAcXTkyJEPP/zw9u3bytLW1vaHH34YMmSIwQKEh4c3btxYeYzN6dOnmzdvbrCjFcnJyfb29unp6SISGRlZvXr1Aj3u4sWL3t7e0dHRz190dHTcvHlzmzZtCvRoAAAAAAAAAAAAACgkeKIMAENLTk7+7LPPOnXqlNuS6dWr1+XLlw3ZkhGRSZMmKS2ZXr16Gb4lIyJWVlaenp7KvHv37oI+rnHjxiEhITVq1Hj+4sOHDz09PadOnar8KgAAAAAAAAAAAADAuFGUAWBQwcHBbm5uP/74o/I4Kxsbm59++umXX35xcHAwZIzz588rLzzSarXTpk0z5NHP69WrlzIEBgYa4Ljq1asfOXKkTp06z1/Mzs6eNm1ar1694uPjDZABAAAAAAAAAAAAAFTEq5cAGEhqauq4ceNyKzIi0qtXryVLlhi4IqPo2bPnrl27RKR///6bNm0yfABFdHS0o6OjTqczMTF5/PixnZ2dAQ6NiYnx9vb+/fffX7heo0aNzZs3u7m5GSADAAAAAAAAAAAAAKiCJ8oAMISTJ0+6urouWrRIacmUK1du3bp1hn+QjOLcuXPKq460Wu3EiRMNHyBXhQoVlLc+5eTk7N271zCH2tvbHz58uHXr1i9cv3XrVuvWrX/44QfDxAAAAAAAAAAAAAAAw6MoA6BgZWRkjB8/vn379jdu3FCu+Pr6hoeHDx48WK1IkyZNUvo6AwYMaNiwoVoxFL6+vsqgvArKMGxsbPbv39+xY8fcKyVKlBCRjIyM0aNHDxkyJCUlxWBhAAAAAAAAAAAAAMBgePUSgAJ06dKlYcOGXbhwQVlaW1t/++23I0aMUDHS2bNnmzdvrtfrTUxMLl26VLduXRXDiMjVq1eVDKVKlYqJibG0tDTY0enp6X379t2zZ4+ytLOzi42NVeZ69ept2bKlXr16BgsDAAAAAAAAAAAAAAbAE2UAFIjs7OwZM2a4u7vntmTeeeed8PBwdVsyIjJ58mSlIDhw4EDVWzIiUqdOnTp16ohISkrKwYMHDXm0hYXFL7/80q9fP2UZGxtbu3ZtZY6IiGjevPmaNWsMmQcAAAAAAAAAAAAAChpFGQD57/bt2x06dJg8eXJWVpaIWFpazpo1KygoyMnJSd1goaGhQUFBImJiYjJ58mR1w+Tq1auXMhjy7UsKMzOzDRs2+Pn5Kctr1661bNmyZMmSIpKSkjJs2LARI0akpaUZOBUAAAAAAAAAAAAAFBCKMgDy2erVqxs1ahQcHKwsW7VqdfHixXHjxmm16v/BmT59ujIMGjSoVq1a6obJ5evrqwy7du3Kyckx8OkmJibLly8fPny4sjx16pSHh0f9+vWV5dKlS5s2bRoREWHgVAAAAAAAAAAAAABQEDTKK0gA4O3FxMT4+/vnPhbF3Nx8xowZX375ZWGoyIjI77//7u7urtfrTUxMwsPDlRceFQZ6vb5y5cpRUVEicuLEibZt2xo+g06n+/jjj5cuXaos+/XrV7p06eXLlyvL0qVLBwQEDBw40PDBAAAAAAAAAAAAACAfFYp/vQZgBA4cOODq6prbkqlbt25ISMg//vGPQtKSEZFp06Yp1cD+/fsXnpaMiGg0mu7duyuz4d++pNBqtT/99NPf/vY3Zblly5aMjIwlS5ZYWFiISFJS0qBBg0aNGpWZmalKPAAAAAAAAAAAAADIF4XlH7ABFF0ZGRmjRo3y9vZ++PChiGg0ms8///zcuXNubm5qR/s/YWFhO3fuFBGtVjtx4kS147wo9+1LgYGBamXQaDSLFi36/PPPleW6detOnToVHBxcs2ZN5crChQvbtWt39+5dtRICAAAAAAAAAAAAwFvi1UsA3sr169cHDBhw4cIFZVmxYsUVK1Z4e3vn+0EpT1LibsbF34pPT0zPSMxQ/lNENCaaEmVKiEip8qWsKliVqVzGysGqbPWyWrP/KQIOGjRo48aNItKvX7/Nmzfne7y3lJGRYW9vn5SUJCKXL1+uV6+eWkn0ev3nn3/+448/Ksvhw4fPnTvX399/69atyhVbW9vVq1d369ZNrYQAAAAAAAAAAAAAkGcUZQDk3Zo1a/72t78lJycry969ewcEBNjZ2b39znq9Pu563INTD+6H3H945mHczbiMZxmv/3UTcxP7evblG5Z3aOxQuU3ldNv0uvXrZmdni8jZs2fd3d3fPmG+69+//5YtW0Rk5syZEyZMUDGJXq8fOXLk0qVLleXIkSOXLFmydOnSzz//XHn1kvLQoO+++87MzEzFnAAAAAAAAAAAAADwpijKAMiLtLS08ePHL1iwQFlaWFjMmjXriy++eMttM5Mzb+2/dXXH1ZtBN1NjU9865n/pzHXXM69HSqRjR8dfDv2SX9vmr3Xr1g0ZMkREWrZsGRISom4YnU7n7++/fPlyZTl27Njvvvvu7Nmz/fv3v337tnKxffv2GzZscHR0VC8mAAAAAAAAAAAAALwZijIA3tjFixcHDhx49epVZVmnTp2NGzc2btw4zxvqsnXXd13/fcXvkQcis9OzX3qPhY1F2RplbWvalrQraWFtUcK6hIWNhfLdzKRMvU6fHJ2c/Cg56WFS4v3ExLuJL91Eo9VUaVel4eCG9frWs7S1zHPggpCQkFC+fPmsrCytVvvgwYOKFSuqm0en0w0fPnz16tXK8ptvvvnnP//59OnTYcOG7d27V7no4OCwfv36Dh06qBcTAAAAAAAAAAAAAN4ARRkAb2bt2rUjR45MTf3v416GDh26ePFiKyurvO2WcDvh3NJzF1ZeSH6U/MJHJe1LOrV0cmrpVLlV5fINy5e0K/n622YkZkRfin5y6UlUaNTtw7cT773YmzEpYdJgYIOWo1s6uDrkLXlB6Nix45EjR0QkICDA399f7TiSk5MzePDgzZs3K8t58+aNHj1ar9cvWLDgq6++ysrKEhETE5OJEydOnjxZq9WqGhYAAAAAAAAAAAAA/hpFGQCvKzMzc/To0UuWLFGWpUuXXrx4sfK2oDyIuxF3/F/HL627pMvWPX/dwdWhds/atX1rOzRx0Gg0bxv6/58VeTDy6o6rkQcj9Tn/80evesfqrb9sXdOnZr4c9JZ++OGH0aNHi0j37t137dqldhwRkczMTF9f33379omIRqNZvnz5+++/LyJHjhwZPHjw48ePldt69eq1cuVKa2trFaMCAAAAAAAAAAAAwF+iKAPgtURFRfXt2/fUqVPKskGDBtu2batVq1Yetoq/FX906tFLGy4931kpXal0kw+auL7vWta5bP4kfpmUJymXN10OWxcWdTrq+etVPap2/rZzpeaVCu7o1xEZGVmjRg0RsbS0jI2NLVnyDR6iU3CSk5M7d+6s/FdvZma2Y8cOHx8fEXn06NHAgQOPHz+u3Obi4rJ169ZGjRqpmRUAAAAAAAAAAAAAXomiDIC/dvz48QEDBuQ+PmTgwIE///xzqVKl3nSfrNSs4H8Hn/zuZHZ6du5FZy/nFqNauHR10Zjkz/NjXkdUaNSpeacitkb83/NsNFKvb73OszvbVLcxWIw/atCgweXLl0Vk586dPXr0UDHJ8+Li4jw8PMLDw0XEysrq+PHjTZo0EZHs7OyJEyd+++23yv+VWFhY/Pjjjx988IHKcQEAAAAAAAAAAADgT1CUAfAX5s2b949//CM7O1tEzMzMvv32W+X1QG/qauDVfaP3Jd5NzL1S06emxyQPp1ZO+Zb1DT27/+zkdyfP/udsTmaOcsWslFmnf3Vq/nlzjdZwrZ3nTZgwYdasWSLi7+8fEBCgSoaXevToUevWre/cuSMijo6Op06dqly5svLRzp07/fz8EhISlOWIESMWLlxobm6uVlQAAAAAAAAAAAAA+DMUZQD8qaysrE8//XTp0qXK0sHBYfPmze3atXvTfTKeZQR9HnRx9cXcK04tnbrM71KphcqvOlLER8Yf/ufh8E3h8v//HFZuXbnnzz3t6toZPszJkyfbtGkjIhUrVoyKitJo1OnrvNSVK1fatGkTHx8vIvXq1fvtt99sbP779J1r16716dMnIiJCWbZo0WLr1q1OTqpVoAAAAAAAAAAAAADgpSjKAHi5+Pj4fv36HTp0SFm2adNm8+bNjo6Ob7rP/ZP3tw/ZnnD7v48bKVW+lNcsr8bvNy5UFRARiQqN2uW/KzosWlmalTTrtrhbY7/GBo6Rk5NTsWLFmJgYEQkNDW3WrJmBA7za8ePH33nnnYyMDBHx9PTcv39/7pNjkpOT/f39N27cqCwrVKiwZcuWPNSqAAAAAAAAAAAAAKDgmEydOlXtDAAKncjISC8vr9DQUGU5cODA7du3ly1b9k33CZkbsm3wtvS4dGXZ2K/x4D2DnVo5FbaWjIiUqVTG7SM3ran2/sn7+hy9Lkt3NfBq0sOkGp1raE21Bouh1WovX7588eJFEXF0dPT09DTY0a+jatWq1apVCwwMFJE7d+7ExMR0795d+cjc3Lxv3762traHDh3S6XQpKSlr164tV65cYev6AAAAAAAAAAAAACjOKMoAeFFISEjnzp3v3r0rIhqNZsqUKQsXLjQzM3ujTXIyc/Z8vCd4VrDyPiOLsha9V/du9892phamBZE5X2hMNNU8qtXtU/fu8bspT1JE5NH5RzeDbtbqUatE6RIGi5GZmbl161YRSU1N9ff3N9i5r6lRo0YmJiZHjhwRkXPnzjk6Orq7u+d+2qJFiw4dOgQFBSUnJ+t0ur179966dcvHx+dN//cDAAAAAAAAAAAAAAWBVy8B+B+bNm3y8/NT3q1jaWm5atWqfv36vekmaXFpm/psunvsrrKs3KZy3419yziVyeesBSYrJWv3x7vD1oYpS+uq1kP3Dy1Xu5xhTk9MTLS3t8/KytJqtQ8fPqxQoYJhzn0jQ4YMWbdunYiYm5sfPXq0VatWz38aFRX17rvvnj59Wlm6ublt3769atWqKgQFAAAAAAAAAAAAgOcY7n0iAAq/pUuXvvfee0pLxsHB4ejRo3loyaTGpK7quCq3JdPYr7HfIb8i1JIREbNSZr3X9O62uJvWTCsiiXcTl7ddHhUaZZjTra2tW7duLSI6nW7fvn2GOfRN/fzzz02bNhWRzMzMPn36REX9zy+nUqVKR48e9fPzU5bnz59v0aJFcHCwCkEBAAAAAAAAAAAA4DkUZQD815w5c0aOHJmTkyMi9evXP336dPPmzd90k5TolJUdVkZfjBYRjVbjNcur18peJiVM8j9uwWv6SdNBOweZlTITkdTY1NWdVt8/ed8wR3ft2lUZ9u7da5gT35SFhcW2bdvs7e1F5PHjx3379lX6Vc/fsHLlygULFigvXYqOju7UqdOKFSvUiQsAAAAAAAAAAAAAIsKrlwAoZs+ePX78eGVu2rRpUFCQnZ3dm26SEp2y0nNl7NVYEdGYaHqt7NVoSKN8DmpwUaFR67utT41NFRELGwu/I34Org4Ffejly5cbNGggIjY2NjExMaampgV9Yt4cPnzY29s7OztbRMaMGTN37tw/3hMcHNyvX7/Hjx8ry1GjRs2dO9fEpEh2pwAAAAAAAAAAAAAUdTxRBiju9Hr9mDFjclsyHh4ehw4dykNLJisla3339UpLRmuq7bO2jxG0ZESkUvNKHwR/YOVgJSLpCelrvdc+vfa0oA+tX79+1apVRSQhIeHUqVMFfVyedezYcfbs2co8f/78/fv3//Getm3bnj59unHjxspywYIFvXv3TkpKMlxKAAAAAAAAAAAAAPj/KMoAxZper//ggw/mz5+vLHv27Llv374yZcq88T45+m2Dtz08+1BEtKbavhv7NhjYIJ+zqqdc7XJDfx1qaWspIilPUta8syblSUpBH+rt7a0ML22fFB5jxozp2bOniOj1+mHDhkVHR//xnipVqvz222+9evVSlrt27WrTps2dO3cMmRMAAAAAAAAAAAAAhKIMUMyNGjVq5cqVyjxkyJBt27ZZWFjkYZ/9f99/bec1Ze66qGvdd+vmV8JConzD8u8FvWde2lxEEu8lbum3RZelK9ATc4sy+/btK9CD3pJGo/n5558rVqwoIk+ePBk+fPhL3+hXqlSp7du3T5kyRVleunSpWbNmx44dM2hWAAAAAAAAAAAAAMUeRRmg+Pr6668XLVqkzB999NGqVatMTU3zsE/4xvDTC04rc5txbdxHuudbxMKkUvNK/Tb305hoROTu8bv7/16wD3rx8vIyMzMTkfPnzz958qRAz3pL9vb2K1eu1Gg0IhIUFLR48eKX3qbRaKZOnbpq1aoSJUqISGxsrLe398aNGw2aFQAAAAAAAAAAAEDxZjJ16lS1MwBQwbx58yZNmqTM77777qpVq0xMTPKwT9zNuA09NuRk5IhIvX71uv+nu1KYMEq2NW1NS5hGHowUkajQKNuathUaVSigs0qUKHHgwIF79+7p9XpXV9dGjRoV0EH5okaNGs+ePQsJCfl/7N1XQFTX2gbgb88MMDD03nsVFRERBRQVVMRYYy8xtmjUaKzRJGo8pmhijDFGJerR2EvEXkDE3kUFEVCqgPQOwwBT9n+x+QlHjS3AoL7P1bf3rL3WO0Pxgs+1iOjChQujR4/W1dV97kgPD4/u3bsfP35cLBbL5fKwsDBNTU1fX9/mzQsAAAAAAAAAAAAAAAAA7yk0ygC83STFkuJHxUWPivJj8/Ni80rTS8syymoraolIRaTyTz0r69evnzVrFlf3799///79b7aXjLxGvitkV2l6KRHpO+mPPjFaoPYm87xFrPysChMKCx4UEFH6ufS2Y9qqaas10Vo5OTlRUVFEpKWlNXDgwCZapbF069bt6NGjeXl5Uqk0KSlp1KhR/zTS2tp66NChkZGRBQUFRBQREVFSUtK7d+93uMUKAAAAAAAAAAAAAAAAAFoIhmVZZWcAgNdQ8aQi/UJ61vWs7FvZRY+KJMWSfxopUBcYtTIy9TC18LGwD7LXs9fj7u/fv3/EiBHcz35QUNCxY8eEQuGbhYn6OurSd5eIiK/Gn3Rtkqmn6ZvN83aRiqUb220sTi4mIodeDqNPj26iDo9bt2517NiRiCwsLLKysppiicZ169atzp07y+VyIjpw4MCQIUNeMLi0tHTgwIEXLlzgLgcOHLh79251dfXmCAoAAAAAAAAAAAAAAAAA7ys0ygC8HUrTSmN3xj48+jA7Opve6KdWz16v1ZBWKu1VQiaEVFVVEZGvr29ERIRIJHqzSAXxBaGeofJaORH1Wdun42cd32yet1HmlcytAVtZOUtEfTf07TC1Q1OsIpfLjY2Ni4uLiSgxMdHFxaUpVmlcM2bM+P3334nI1NQ0ISHhnw5g4tTW1n788cd79uzhLjt16nTs2DFDQ8PmCAoAAAAAAAAAAAAAAAAA7yU0ygC0aCzLPjr66Nb6W6mRqaziOT+tKiIVPXs9dT11NR01gVAgFUtlNTJxnrgyt7KqsOq5c+ZS7nW6XuNcc/XG1Rf3Mbw42J/d/nx88TERWftbf3zx4/ft3Jwz889cXXWViIS6ws+SPtMw1GiKVQYPHnzo0CEiWrdu3fTp05tiicZVXl7u5uaWnZ1NRNOnT1+3bt2LxysUivnz569evZq7dHNzO3XqlI2NTZMHBQAAAAAAAAAAAAAAAID3EhplAFoolmUTDiZcXH4xLzav4X2+Kt+mq42Vn5VlJ0vTdqaappr/NIOkWJIXk5d7LzctKi39QnptRW3DV4VGwsBlge0nteep8N4gXsyfMYc/PkxEPBXe1LtTjdyN3mCSt5qsWrbRY2PRoyIi8p7uHbIupClW+f3332fMmEFEgwYNCgsLa4olGt1ff/01dOhQIhIIBHFxca+yEc6aNWvmzp2rUCiIyMrKKjIy0tnZucmDAgAAAAAAAAAAAAAAAMD7B40yAC1RXkzeyRknMy5n1N9h+Ixjb0ePjzwcgx3VdNRed0KFVJFyJiVuT1zCoQSpWFp/38DZoPcvvZ1CnF5rNnmN/Dfn38oyyojIf6F/4A+Br5vn3fDw6MO9A/YSEU/Amxoz1ahV43cLPXz40NXVlYh0dXULCwv5fH6jL9EUevXqdebMGSIaOnTo/v37X+WRAwcOfPTRR9XV1URkamoaERHRpk2bpk0JAAAAAAAAAAAAAAAAAO8fNMoAtCzyWnnU11HXVl9j5XU/m6qaqt7TvL2ne+tY6/z7+atLqqP/iL7x242KJxX1N9uMbhO8JvjVDw+6+dvNUzNPEZHIRDQrZZaKSOXfB3tLbQ/annY2jYic+zmPPDqyKZawsrLKzcrVJ/2tq7eaappWl1XXlNfIa+VEJFATqIhUNE00RSYibUttfUd9gVDQFBleV3R0UOxyNgAAIABJREFUtLe3N8uyqozq0Y1HDVnD4uRiSYmkpryGiFTUVbQstHRtdE3ampi2MxWo12WOiorq37+/WCwmIgMDg9OnT3fo0EGZbwMAAAAAAAAAAAAAAAAA3jlolAFoQQoTCg+OPph7N5e75KvyO33eyW+Bn7qBeuMuJKuW3Vx38/IPlyXFEu6OlrnWkL1DrLtYv/RZaZV0rcPaytxKIgpeE+wzy6dxs71d8mLzQj1DWQVLDE29N9WkrUmjTKuQKZ7ceJJ1PSvzaub98Pt8MZ8h5qVPMXzGwNnApI2JZWdL+yB7I3cjhnn5U02hMrdyXu95NbE1VmTFpxftgiMQCqz8rNwGubUa2kpkLLp161ZwcHBxcTERaWpqHjlypEePHs2VGgAAAAAAAAAAAAAAAADefWiUAWgpkk4mHRx5kNtyg4jse9qHrAsxcDZouhUlRZLwOeEx22O4S56A1/PHnp1md3rxU9fXXA+fHU5E2lbanz36rIVsYaJEB4YeiP8rnojajG4zeOfgfzOVVCx9dOLRwyMPk04lVZdU/8tgmqaajsGOrUe0tg+yZ/jN1DGTey/30veXEg8nKqSK13qQr8p3H+buO983W57du3fvgoICItLQ0Dh06FCvXr2aJiwAAAAAAAAAAAAAAAAAvHfQKAPQItz87ebp2ae545YE6oKeP/b0nu7dPNuBJJ9KPvzxYXG+mLv0menT+5feDO/5S7Msu85lXXFSMRH13dC3w1ScjEPZt7I3ddxERDwB77Okz3Rtdd9gkpw7OXc23bm/+359p1RDjIARWYhEViINEw2BpkBVS5WnwiMieY1cViWrLqqW5EskuZLKzEp6XneKyETUZmQb7+ne+o76b5DtFZVnlUfMi3iw/wE1/FeFISM3I3NvcwMnA01TTTVtNSKqrawtzyovfFiYeze3IL7gf94pj2k7tq3VeKt+I/vl5OQQkZqaWlhYWEhISNMlBwAAAAAAAAAAAAAAAID3BxplAJTvysorkQsjuVrXVnfk0ZHGbYybM0DFk4oDww5kXs3kLtuMajNo+6Dn7kGSfDp5V59dRCTUE87JmqOiodKcOVus7UHb086mEZHvPN+eP/V8rWdTIlIuLr+YcTnjqfsa5hpG3kYG7QwMPAy07LVeZUsYebW8PLm8JL6k4GZBwc2CmpL/6blheIxLf5fOcztb+7/8gK3Xdfe/d09/frq2orb+jk1XG4+PPJz7OYuMRS94sDKnMvFIYuzO2MwrmfU3hXpCzy89J/82OSMjg4iEQuGJEydwBhMAAAAAAAAAAAAAAAAA/HtolAFQsoZdMla+VsMPDX9xY0ETkdfID3106MH+B9yl5wTPfpv7PbulzZ5+ex4df0REned07vUzDsSpk3QiafcHu4lI01RzduZsnoD3Kk+ln08/u+hs1vWshje17LSsQqzMupnpur7JzjT1WAVbmlCaeSoz81Rmdf7/nOLk1NcpaEWQcevGacaSVcuOTjp6f9f9umuGWn3YquviriZtTV5rnuzb2ReWXeC+uzjOI52XXF+SnJZMRJqamuHh4b6+vo2SGQAAAAAAAAAAAAAAAADeW2iUAVCm+7vuh40N446qsethN/LoSBWR0vZoYRXsqZmnbv1+i7t8dnMUcZ74Z4ufWTnL8JgZD2c06Tk+bxeFTPGL9S+VOZVENOr4KKe+Ti8eX/GkImJeRNzeuPo7PBWeZW9L+6H2Bu0NGjcbq2ALbhQk7UjKvZxbfygSw2c8J3j2/LGnUFf4byavLq3eFbIr61pdr49RK6MPNn5g3eXNd6xJO5t2bMqxkpQS7tLc3/zHxz8mZyYTkY6OTmRkZIcOOO0LAAAAAAAAAAAAAAAAAN4c/5tvvlF2BoD3VOaVzH0f7mNlLBHZ9bAbdXyUck8yYhjGsY9jxZOKnDs5RJR5NVPfQd/E4+99QWJ3xHIbfth0tek8p7PSgrY8DI8R54m5s6vkUrn7UPcXDL698fbeAXu5D5mI+Gp8+2H2nVZ1shloo2Gm0fjZGEZkJbLua20VbCWrkpUnlRNLxFLOnZyY7TH6DvqGroZvNnN1afX2oO3ZN7O5y/aT2484NELPQe/fpNWz12s/sX3p49L8+/lEVJFR0cOxRxwbV1ZZVlNTc+jQob59+xobN+vBZAAAAAAAAAAAAAAAAADwLnml80EAoNFJiiR/jfhLXiMnIuPWxsPDhgvUBcoORQzDfLDxA7fBbtzlsU+O1fdzEFH8X/Fc0WpIKyWEa9najWvHFUknkmTVsueOEeeL9/Tfc+LTE7WVtUREDFmFWAWfDG73ZTt1U/WmTqhlp9Xh2w5BfwWZdjHl7lTmVO4btO/wuMPSKunrziavle//cH9OdA4RMTwm+Nfgfn/0a5TvYRWRyuCdgwO/DySGiKjwTuEix0VGBkZEVFhY2KNHj8TExH+/CgAAAAAAAAAAAAAAAAC8n9AoA6Achz8+XJ5VTkQaRhqjToxS01FTdqI6DJ8Z+OdA4zbGRCSrlh0ae4hr+6gqqHp88TERMTymvpMG6hm5Gxm6GRKRtEqafj792QFZ17I2ttv46Ngj7lLbUTtga0DHlR2Fxv/q8KPXpe2k7bfez3etr7pxXWtOzPaYzT6bix4WvdY8EXMj0qLSiIgY6repn89Mn8bN6b/Iv8+vfbg673Le6r6rtbW1iSg/P79Pnz55eXmNuxwAAAAAAAAAAAAAAAAAvCfQKAOgBPd33efOMCKGBm4bqGOto+xE/0NVU3XEoRGqWqpEVBBfcG7xOSJKjUxVyBREZOVrpWWupeSILZJTHyeuSD6V/NRL93fd/7PHn5U5lUREDDmOdQzcF2jo9YZnHv17Zt3Neh7uaTPAhrvMj8vf1HFTSkTKKz7+8MjDm+tucnXg94GeEzybImTHzzp2/borV6fsSNm5fKdIJCKi9PT0AQMGSCSSplgUAAAAAAAAAAAAAAAAAN5taJQBaG7VJdXhc8O52memj1OIk3LzPJeeg16vVb24+vqa6/lx+dx2MkRk39NeeblaNMc+jlyRfPp/GmUuLr8YNjaM25hHVU+1S2gXjwUePFUl//pV0VLp8G2HDt924KnxiKimvGZPvz1xe+Je+mBNec2J6Se4utWQVv4L/ZsuZLdl3Zz6OhERsZT6S+qB3QcEAgER3bhxY9y4cSzLNt3SAAAAAAAAAAAAAAAAAPBOQqMMQHO7sPyCOE9MRNpW2j2+7aHsOP+o/eT2doF2RKSQKSLmRtQ3yth0tVFqrpbLpouNikiFiIoeFXFfYiKK+jrq3JJzxBIRaTtqB+4JNO5srMSQT7EZYNNjdw8Ncw0iktfKw8aE3Vp/68WPXPr+UsWTCiLSNNPst6lfk8ZjeMyALQM0DDWIqDS9VBQr2rBhA/fSgQMHFi9e3KSrAwAAAAAAAAAAAAAAAMC7B40yAM2q4knF7Q23uTp4TbCqpqpy87wAwzDBa4J5Ah4RpUSkFCQUEBFflW/R0ULZ0VoovhrfzNOMq7NvZxPR2UVnL313ibtj4mvSbUc3DQsNpeX7BzrOOt12dNN20iYiVsGenHHy3rZ7/zRYnCe++VvdoUu9f+4t1BU2dTyRiajHd3X9ZFdXXR07dOysWbO4y+++++6PP/5o6gAAAAAAAAAAAAAAAAAA8C5BowxAs7q84jJ3BI+Fj4XbYDdlx3kJ49bGnhM96y5YIiLzDuYqGipKjNTCmXcw54rs6Owbv964vOIyd2kWYOb7m6+KZgv96NSN1bv92c2gnQEREUtHJx1NOJjw3JG3NtySVkmJyKy9mfsI9+aJ135ie0NXQyKqKau5HXp79erVAwYM4F6aMWNGVFRU88QAAAAAAAAAAAAAAAAAgHcAGmUAmk9NeU39Xh09lrfcQ5ca6rKoC0/l718Uxm1a0LFBLZCZV92OMkknksLnhNfd7G7W6ZdOPNUW/ftWRUvFf4O/rpsuEbFyNmxMGLcpTkOsnL2z6Q5X+y/0ZximebIxfMZvgR9X39l8h2GYnTt3enh4EJFUKh0+fPiTJ0+aJwkAAAAAAAAAAAAAAAAAvO1a9B9uAd4xMX/G1FbWEpFJWxP7nvbKjvNKdGx0Wg9vXX9p5GakxDAtn2k7U67IvpXNKlgiMmhn4POTT8NmoxZLoCnoEtpFy06LiGTVsn2D94nzxA0HPL74uCK7gog0TTVdB7o2Z7bWI1qr6agRUXFScfatbE1NzePHj5ubmxNRYWHh6NGj5XJ5c+YBAAAAAAAAAAAAAAAAgLfUW/C3W4B3xt2td7nCe5q3cpO8lg6fdqivDd0MlZik5dOz0+MKlmWJSNNGs/Paznw1vlJDvQZVPVW/9X6qOqpEVJ5ZfmDYAVbO1r/68OhDrnD70K2ZW38E6gK3QXVHlSWdTCIiS0vLvXv3CgQCIrpw4cLy5cubMw8AAAAAAAAAAAAAAAAAvKXQKAPQTIqTi3Pv5hKRQChoPbL1S8e3HJadLXmCut8VBk4Gyg3TwqmIVARCAVfz1fm+a33V9NSUG+l1iSxFPj/5cP84PL74+Oqqq/UvPb74mCucP3Bu/mBOIU5ckX4unSu6dOmyZMkSrl6+fPnZs2ebPxUAAAAAAAAAAAAAAAAAvF0Eyg4A8L5ICEvgCodeDmrab1PzBMMw9bXIRKTEJC1f8ulkWbWMqz2/9NSy11Junjdj3NnYfZr7g3UPiOjc0nNOfZ2MWxvLa+R5sXlExPAYK1+r+sEKhaK0tFRfX5+7rK6ulkqlWloveeNpaWkZGRkBAQEvGLNv374BAwYIhULu0trfmity7uawCpbhMUT01VdfXbx4MTIyUqFQjB49+t69e6ampkQkzhc/ufmk6GFRcUpxaXppTVlNdVm1TCITCAUCdYG6nrqmmaa2pbZJGxPjNsaGrob1rWAAAAAAAAAAAAAAAAAA8G5DowxAM0mLSuMKt8Fuyk3yumoraxUyBREJ1AUqGiovHvzgwYMdO3YQkZaWlr29fUhIiI6ODhG1adPm/v37DUdWVFQkJSW1b9/+qRny8/NHjhy5atWq33777b///e8L1nr06JGmpqa5uTkRjRkzZsGCBW3btn2tt3b9+vXDhw8Tka6urqOjY0hIiIaGRklJyYABAy5evLh+/fqtW7cGBwcvX778iy++uHDhwvjx46dMmfJPs8kkspPTT3K1VYiVzUCb1wrTorhMcsk+n10SVyKvkR+ZcGTS9UnFycXct4GurW7DTq/Hjx/7+/s/efKEu9y5c+fFixe3b9/+4vnv3r174sSJFzfKLF68uEePHvWNMppmmiITkThPXFtRW55VrmOtQ0Q8Hm/nzp3t2rXLzc0tyiua88GcUV6j0qLSipOLX/3Nqmqp2naztQ+ydxvkpm2l/eoPAgAAAAAAAAAAAAAAAMBbB/+HHqA5KGSKzKuZXG3bzVapWV5bVUEVV4iMXr6dzKNHj86fPx8UFOTg4HD58mV3d/eYmBgi+vnnn58amZSUNH/+/Gdn0NHRWbp0aXV1dW5u7ovX2rhxY3h4OFfPnDnTxua1G1Pu3r0bExMTFBRkZWV17Nix1q1bp6WlaWpq/uc//2FZdvHixVFRUcuXL8/KygoLC7ty5coLumSI6OK3F0tSS4hIVVe13cJ2rxumRWH4jPd33nw1PhFl38qO3RlbllnGvaTnoPcqM9y/f//YsWNcdxTLsjExMRKJJCoqKj4+/qmRycnJ3Nc6Ly/v9OnTDx48+Kc59ezqli57XFZ/08TEZN2idQOYAQtogUu0S/Qf0a/VJUNEtRW1j449Oj3r9BrbNdsCtt3ZfEcmkb3WDAAAAAAAAAAAAAAAAADwtsCOMgDNoeBBQW1FLRHp2Ojo2OgoO87rqa2s5YpXPDHK0NAwKCiIiEaMGGFjYzNv3rwzZ87s2rWrW7duixYtunbtmlAo/Oyzzw4cOBAXFzds2LDOnTu3bds2MTExISEhOTl57969YWFhw4cPVygUy5Yti4qKCggIWLJkSXx8/NmzZ2fPnk1Ex48fT01NPXHixOXLl0+dOvXFF1+Eh4cbGBiUl5d/9dVXqamp/v7+S5culclkX375pZeX159//mlvb7969erS0tJFixYlJSWZmZmtWrWKiCwsLLi0o0ePnjt37pIlS9avX3/w4MFdu3aJxeKJEycOHTp0y5YtpaWlI0eOXLBgQYcOHZ77riuyK679co2r28xuo6qn+q8/eCXTstdy/tg5ITSBiM5+ebb7N925+6/SLxUREbFnzx4HB4dff/3V399/8eLF/v7+AwcOtLa2PnTo0Lfffls/csuWLfv379+/f//JkycXL17cv3//NWvW+Pn5LV68+NlpRcZ1S0uKJUTEsmzSiaSLyy8+ufnEkzwbjhQIBWbtzUw9TfUd9fUd9dX11NV01FQ0VGQSmaxaJs4XV+ZVFicX59/Pz7mbU55Zzj3FKtjHFx8/vvj47KKzHaZ26PR5J3UD9Tf57AAAAAAAAAAAAAAAAACgpUKjDEBzKIgv4AozTzPlJvlXmNd+YvDgwStXriSio0ePBgYGFhcXR0REsCybm5traGiYlZUVGhqqqqq6e/fu5cuXHz9+3MHBQSKRREREDB8+/Ny5c7Nmzfriiy8+/vjjNWvWeHh4XL9+nZv24cOHVVVVgYGB7u7uo0aN0tTU/PLLL3v16jV16tT58+cPGjRowYIF8+fPX7ZsWWho6MaNG0+cODF//vy1a9c+evQoKCho+/btOTk5ampP9/0MGjRoypQpNTU1p0+fvn79+qFDh0JDQ4VCoaWl5ezZs0NDQzU1Nf/pnV767hK3DYl+G32bQW/xoUsNOU9wTgtLqy6ornhSkXQqibspUH/6H468vDxHR0euLi8vDw4O7tWrV69evYqLi4cOHdq9e/fFixeLxeKVK1eam5v7+/v/97//HTlyJMuy33zzTXx8/JEjR4RC4axZs6KioqysrGQymbOz89y5c5/NI1AXEENCXaFCrsi4nHH689M50TkNBxg4G7gOdHXu52zR0YKvyn/Ft1mSUpIamZp4ODE1MpU7XqqqsOritxdvrrvp94Vfp1mdnn3LAAAAAAAAAAAAAAAAAPCWwh//AJpDfaOMoZthY82pUCh4vDc8PY1lWYZ5/baX16etrV1SUiKTyYjIzMzs8uXLe/bs6dOnj5OTU0VFhYqKip5e3WE6/fr14zZrycmpa31o06ZNnz59iGjevHlz5szx8PBoODOPxxMKhRoaGvUz5ObmVlVVjRo1ioi++uorb2/vZcuWGRgYTJgwgYgGDBiwdetWa2vrvXv36unpBQUFaWlpPZu2rKzuTB8dHR2GYbjJtbW1BQJB/ULPKntcdmfzHa5uNaNV83y2zUCgIXCf4R69NJqIUiNTuZusnH1qmImJSXJyMldv3rz54sWL165dmzFjhq2tLbfNj1gs1tPTMzc35waXlJQQ0bFjx4yNjWNjY/l8vlgszsjI4L5SRKSurl7/bdCQQqoglqpLqm/8euPxpcf0/0EE6oLWI1p7Tfay7Gz5Bm9Tz0HPy8HLa4pXVUFV7M7YG7/dKE0rJaLq0uqzi87e2Xyn3x/97HrYvcHMAAAAAAAAAAAAAAAAANDSvOFf2QHgtZRl1LVfGDgZPHfA8ePHR4wY4ePjM3jw4CNHjqSnp3t6ej53ZD1Dw+f03Fy9enXYsGHDhg0bN27c3r17n/vg4cOHJ06c+Drx31xMTIyNjY1AICCinj177tq1Kzk5uWvXrtw2Mw3p6+s/dUckEtUXYrGYYRiWreuM4DpvnlJdXV2/44umpqZYLG44iYqKilQq/eGHHz799NOjR4+6ubldvHjx2bTOzs5v8DZv/n5TXisnIoP2Bia+Jm8wQ4tl099Gw0yDiGrKa7g73LFHL/bTTz+tWLHi4MGDv/76q0wme25jVr9+/YKDg8eMGSOVSjU0NEQiUVhY2JkzZ86cOfPgwQMHB4dnp60uq+aKxxfrumRUNFQ6z+k8K3XWgP8OeLMumYY0jDQ6ze40M2nmkH1DDFzqflRLUkq2B20/8ekJWfVzvusAAAAAAAAAAAAAAAAA4O2CRhmA5iAuEHOFhpHGs69u27Zt7ty5U6dOPXPmzOLFi48cOfIqc167du3ZmxkZGRUVFStWrJgwYcKXX355/PjxfxObw1Op+0Xxuo0CCQkJc+fOnTVrFndZVVXVsWPHlStXHjp06ODBgyKRqKCg4AWP3717t7CwkIgiIiK8vb1NTU25bUtYlr1w4QIRaWhocAM4FhYWOTk5mZmZRHTy5MmOHTs+O2d1dfWgQYO2bt06e/bs8PDwhi/dvn17yZIlkydPfq33SEQyiezulrtc7TrR9XUfb+EYAeP0kVP9JUtseVb5S58yMzM7fvz4rVu3Zs6c+U/7HjEM8/PPP7dr1+7DDz+sra2dNWvW6NGjL1++fOnSpe+///7Z8ayczb2Ty9Lf+9m4D3ef8XBGr597aZr+45FYb4DhM+7D3KfFTeu7oa9QT0hExNLtjbe3+G4pSS1pxIUAAAAAAAAAAAAAAAAAoPnh6CWA5iApqtuEQ8PwOY0yixcv3r17d5cuXYjI09Pzv//9b3p6ukKhWLRo0dmzZw0MDHbu3JmWljZ37tzq6mpVVdVVq1b5+PhMnTp17969M2fOdHJyioiI0NPT27VrFxGJRCJ7e3t7e/s+ffpER0cHBQUNHjz45MmTRHT//v0tW7Z069aNiORy+fLly0+dOsWy7NSpUydMmHDgwIHly5erqampq6ufOXNGTU3tqcxVhVWv8mbPnTvn4OAgEom0tLRmzpzJ7V7D4/G2bdu2detWS0vLR48eff31146OjjY2Nh4eHiEhIfb29vWPMwzDtVa4uLiMGzeOYZisrKzjx49bWlra2tp26tRJU1PTwMCAiIYMGTJmzJi9e/euWbOGO4lp3bp1PXv2dHBwyMzMfO6GOpMmTcrNzVVXV8/Kytq3b9+5c+f2799/4cIFNTU1IyOjFStWDB8+vKio6LXOtLq/5z63yYrISmTi/05tJ8Ox+9Aufn28tEJKRAwxhQ8LWQXL8Op2iNHX11+4cGH9YG9vbxMTk4CAgDVr1uzfv3/8+PGenp5CoXDJkiXcAAsLi0mTJrVu3VpdXZ2Ivvjii927d9+7d2/p0qVHjhw5ePAgwzD+/v5ENHfu3PoNgWorag8MOyApkTDEEJGWudaArQMcej1n15nGwhPwOkzt4DrQ9eT0kwlhCUSUezf3jw5/jDwy0rqLddOtCwAAAAAAAAAAAAAAAABN6u+jTACg6YS2D829m0tEU+5MMfU0bfhScXGxqampRCLh8/n1N9PT052cnC5cuODr6zt37lxDQ8OJEyeKRCKRSBQXFzd69OiYmBh9ff2YmBg7O7vz58/7+/svWLBAW1vb0dFx06ZNP/74Y1FR0dSpU7ds2eLj4+Pi4sLts3Lt2rXvvvtu0qRJR48e9fLyunPnzh9//FFdXd29e/dt27YFBwffuXPH0NCwuLhYV1e3vlmEVbDLVZezcpYYWly7mCd4852oJBJJQUGBiYlJfRfOS+Xn5xsbGze8NDAwaPhZPUUulxcUFJiamv7TgJKSkqqqKjMzs9fqhnmB7YHb06LSiKjtvLZO45xeOv5tdGfZnbS/0uovp9ydYtruHz/hRicpluzotSMnOoe7dOnvMuC/A9QN1JstQPQf0adnneZ2VBIIBR/u+dB14Lu2dRAAAAAAAAAAAAAAAADAewJHLwEoWXV1tUAgeLZpw9HR0dfXl4j8/PxSUlLU1dVXr149ePDg2bNnJyUlyWR1pyDZ29tz22/4+/unpKQQ0aNHj1auXDlnzhxfX9/u3bv/07rHjh3j8/mrVq1at26dSCS6cuWKp6fn2LFjt23bxrJswzwMj1HXUyciYv/eGufNqKurW1tbv3qXDBE17JLhLl/QJUNEfD7/BV0yRKSnp2dhYdFYXTJVBVXpF9KJiOExViFWjTJnC2Q70LbhZWpkarMtXVVYtT1we12XDENdvuwy/NDw5uySISKvT7wmXJ6gaaZJRLJq2YGhBxIPJzZnAAAAAAAAAAAAAAAAAABoLGiUAWgOPH7dz5pCpnjqJTMzMzU1tYSEhKfuC4VCruDz+XK5fNGiRQqFYvPmzdyhSFKp9LnDiMjHx2f//v3R0dHx8fGHDx/m8XgKRd2iNTU19fNLpVI3NzcvLy8vL6+vvvqqd+/eYWFh8+bNu3PnjoeHR3p6esMwXIsAEZVllP2rD+Kdk3AogZWzRKTfTl9oJFR2nKai76GvYfH3qWHxf8U3z7rSKumukF2593KJiOEx/Tf17/Fdj/pTn5qTmZfZxCsT9Z30iUghU/w14q/m7BYCAAAAAAAAAAAAAAAAgMaCRhmA5qCuX7cBRlVh1VMvMQwza9asadOmFRYWElF1dfXPP//87AwZGRne3t76+vrHjh0rLS196Ypqamrr1q1bsGCBQCAgoqysLCIKCwurH9C7d+/Y2Nju3bsHBQV17NhRR0cnOzs7MDBw7dq1np6eDx8+bDiboashVxQkFLz6u34fJJ9O5grLnpbKTdLUzLqa1ddPbjwpeNDk3wmsnD046mD2rWwiYvjMwG0DPSd6NvWiL6Brpzvh0gQDFwMiktfI9w3eV5hQqMQ8AAAAAAAAAAAAAAAAAPAG0CgD0Bw0DOt243i2UYaIlixZEhwc3KNHDxcXl3bt2tXW1goEAgsLC+5VkUhkZGQ0f/78OXPmBAQEnDlzxsfHh2EYW1tbgUBgaVnXn6GhoWFkZKSpqWliYsLd8fX19fDwiIyMXLFiRdeuXQMCAoyNjU1NTbkJZ8+ebWBg0KFDB29v75CQkIKCgpEjR3p5ebVu3drQ0LBHjx4NExq5GXEFOgMaYlk241IGV5v4mig3TFMz9f+fA62u/XKtqVe88J8LD4/UNWyFrAtpO7ZtU6/4UiIT0UdnPtKx0SGi2oravQP31pTVvPS8jrYiAAAgAElEQVQpAAAAAAAAAAAAAAAAAGg5GJZllZ0B4N0X+UXklR+vEFG3b7oFLA1QdpzXFrc37uDIg0TkMsBlxOERyo7TUhTEF6x3X09EqnqqH1z4gGGUcCRQs5FL5Ec6H+HOmSIingpv+oPp3FFETSH9XPr2ntu55fwW+AWtDGqihd5A/v38zZ03S8VSInIf7j5k7xBlJwIAAAAAAAAAAAAAAACAV4UdZQCag6Hb2310kXFrY654cvOJcpO0KFnXs7jCyMvo3e6SISK+Ol/LXqv+UiFVnJp5qonWqimvCRsbxnXJ2AXaBf4Q2EQLvRnjNsYDtw4khojowb4HD/Y9UHYiAAAAAAAAAAAAAAAAAHhVaJQBaA71RxflxeYpN8mbMWplxFPlEVFlTmXRoyJlx2kpCuLr2p50XXWVm6R56LnrcQXDY4go+XTyva33mmKhqK+iKp5UEJHIWDR4x2BuuRal1dBW7Se15+oT008890g1AAAAAAAAAAAAAAAAAGiB0CgD0BxMPEz4anwiKkwsrCp4+/6mzvAYHr/u10XGpQzlhmk5ChMKuULTTlO5SZqHrktdP5CRe13j18nPTubFNHLvV86dnFsbbnF1n9/6aJq10M+298+9de10iUhSJLmw7IKy4wAAAAAAAAAAAAAAAADAK0GjDEBzEAgFFt4WREQsPb74WNlxXlthQqFMIuPq9PPpSs3SghQ+rGuU0bbXVm6S5iGyEHGFtrk21ysjFUt3f7C7PLO8EVeJ+iqKO3TJsY+j+zD3Rpy5calqqQavCebq2xtvFyYWKjcPAAAAAAAAAAAAAAAAALwKNMoANBPbbrZckXgkUalB3kTSqaS/65NJCqlCiWFajsqcSq7QMNNoeF+hUCQkJJw7dy49Pf3Zpx4+fBgVFfXqqxQUFMTHx8fHx2dmZr7K+JMnTz5+3CTNWOqm6lxR/qR82IFhQl0hEZVnlW/rtq00vbRRlsi8kpl8OpmIGD4T/Etwo8zZdFz6u9gF2hGRQqa49P0lZccBAAAAAAAAAAAAAAAAgJdDowxAM3Ed5MoVD48+lNfKlRvmdT3Y94AllqslxZLUs6nKzdMSyCQyaZWUiHgqPIFIUH+/pKRkxIgRv//++/3797/88st58+YpFIqsrKxJkyZxA1JTU69evfrqC4WFhS1ZsuTAgQMLFy6cOHFibW3ti8efP3/+FVtqXpeGaV0/UEVOhaGb4bC/hnEHipWklmzutDnzaiMsennFZa7wGOth4GLw7ydsaoHfB3JF3N64ssdlyg0DAAAAAAAAAAAAAAAAAC/F/+abb5SdAeC9oGWmFbsztrqkWl4jN/EwMWplpOxEr6owofDsl2cZYnh8HsuyRKSiruLS30XZuZSsMrfy+i/XiUhoKHQa51R/f+XKlTY2Nt99952Pj8/AgQP37dsnl8vz8vLOnz9vZGRUVlYmFotzcnL09fUjIyM1NDT09fWJKDk5OTw8PDc3187OjmGYq1evCoXC8+fPSySSrKwsMzOzhQsXDh48eMeOHaampiUlJVFRUSkpKebm5kKhkIgSExPPnDmTmppqamp66dIlFxcXa2vrqqqq8+fPW1lZZWVlRUZGxsTEaGpq6unp1Y8XCARPnjxRU1NTV1ePiYk5e/ZsRUWFtbU1EaWkpJw6derBgwfq6upcQiIihhI3JRIRsdT166569nrmXuYJBxMUMoVULI3ZEcPj86w6WzE85s0+0tK00lOfnSKWGB4zdN9QdQP1f/H1aSbaFtoZlzJK00pZBcvwGYdeDspOBAAAAAAAAAAAAAAAAAAvgh1lAJpP2zFtueLW77eUm+S1RP8RzRXWXay5IuFQgqxaprxELUL9J8BX5ze8Hx4ePmbMmLqX+PyRI0eGh4dXVVXJ5fLy8vKqqioiun79+pkzZ/h8/ieffJKXlxcREbFs2TJNTc27d+/Onz+fiFatWrVo0aLy8vKGMzMMo6KiIpVKw8PDdXR0SkpKxo0bV11dffDgwWXLlmloaFRUVCQkJHCDCwsLp0yZIpPJVFVVT58+zefz1dTUPv/884SEhJs3by5cuFAkEh05cmTBggUpKSlbtmzZvHmzjo7O0aNH161bl5mZOWfOHFVVVQ0NjZiYmPoAPDUe1wQjq5axCpaIHPs4jj0zVsNIg4gUUkXUV1GhnqEp4Slv9pFGzI/gpnXo7aDvpP/S8S1E57mdueL+rvsKGU4lAwAAAAAAAAAAAAAAAGjRBC8fAgCNxOsTr0vfX1JIFenn03Pv5Zq2M1V2opeTFEnubL7D1b4LfEsfl5amlUqKJHF749p93E652VqgiooKsVhsavr3V9bU1DQnJ8fX1/fo0aNDhgwholOnTllZWc2ePZuIkpKSoqOjN27cGBoaamZm1q9fv+HDh+fm5hLR5MmTfXx8iOj69eu3b9/+5ZdfkpKSZDJZly5devTokZ6ebmhoeOXKlZiYmLVr1x44cMDY2Jhb8dChQ5mZmevXr587d66XlxcRTZkyJSMjIz8/v0uXLhEREampqbNnzw4ICOjfv//Vq1elUumuXbvCw8NVVFSCg4P79Onj7e2tpaUVEBBQPyeHYRhGhSEpCXWF8lq5QCggImt/609ufRI2JizjcgYR5cfl7wzeaeFj0XF6x1ZDWgnUX/6vTG1FbfzB+Btrb+TezeXueH/q3QhfjObi2NtRy1yrIruiMrcyJTzFqa/Ty58BAAAAAAAAAAAAAAAAACVBowxA89Ey13Ib7PZg3wMiOrfk3MijI5Wd6OVurL1RW1lLRCYeJo7Bjh2mdoj8IpKIbv1+C40yz9LU1FRTUysqKjIyqjtaq2Fdz9zcnCu0tLQqKyuzsrKWLFnC3dHW1haLxUTEHYFUP75z5849e/Zs1apVYWHhtGnTPD09jYyMJBJJbm6uXC5/qqNl8+bNffv25bpkFArFrFmz1NXVbW1tCwoKNDQ0CgoKLCwsuJEWFhYlJSVisXjatGncHVtbW3d3965du37yySeqqqrTp08PCAjgXmJZlpWyrIKVFEv4Kn/vo6Njo/Px+Y+v/XLtwn8u1FbUEtGTG08O3Th0fOpxh14ONgE25h3MDZwNRMaiunkUrDhfXJhYmHs3Ny0qLfVsqkzy9wZFQl2hQ++36QAjhs+0HdP2yo9XiCjxSCIaZQAAAAAAAAAAAAAAAABaMjTKADSrrl93jT8QzyrYR8ceZV3LsuxsqexEL1LxpOLqz1e52m+BH8Mw7Se2P//NeZlEln07+8mNJxY+FspNqER81bpOEXmNvP4mwzBdunQ5fPjw5MmTuTuHDx/u2rWrQCCoqalpOKzhVGZmZl9//bWNjU3Dmzze30fjmZubd+rUiasvXrzo5+fHbUhz7do1VVVVPp+fn5/fsFfm66+/PnTo0MaNG6dOnZqdnZ2Xl7d//34iCg0Nzc3NtbKySk5OdnR0VCgUqampurq6ampqa9euVVdXr5/hk08++eSTTx48eDB9+vTz589zNxW1Cu5oJL4an+H/z1tg+IzvPN82o9pc/uHync13uHOppFXSxMOJiYcT/38QCXWFRFRbUfuCI4pc+rvUf7ZvC5cBLlyjTPKpZGVnAQAAAAAAAAAAAAAAAIAXQaMMQLMybm3cekTr+7vvE9GJ6Scm35zME/Be+pSyRC6KlIqlRGTiYdJ6eGsiUjdQbz2i9b2t94jo0veXRhwZoeSIyqNhqMEVtSW1De/Pmzdv/Pjx+fn5Li4uly5dKikpGT16NBGVlZWtXbvW1dX12almzZo1b968UaNG8Xi8mzdvLl++/AXrOjg4/Pnnny4uLnFxcVlZWUT02Wefff7558OHD6+qqrKzsyMiFRWVn3/++Ysvvvjll1+mTp1aUlKyd+9emUx2+vTpdu3ajR8/fu7cucnJyenp6WpqakKhcNy4cTNmzBg0aFBNTU1SUlLXrl3v3r3r4OAQHx/fMLCsqm7fFxUNledm0zLX6vNbn65fd7237V7M9piC+IL/eZml6pLq5z5o4mEiq5IVJRUR0du1nQzH0sdSXV9dUiwpzyovTCg0dDNUdiIAAAAAAAAAAAAAAAAAeD6GZVllZwB4v5Sml653Xy+tkhJRr597dZ7TWdmJni/pZNLuD3YTS0T00dmP7HrYcffz4/I3emzkdhaZdH3S+7ypzPei77mv44AbAwQaf/cdisXi69ev5+TkODg4+Pj4cHvDFBYW3r9/X0dHx9zcvKSkxM3NjYiSkpKEQqGVlVVaWtrNmzflcnnbtm1bt2599epVLy8vNTU1IkpNTZXL5U5Ofx/oc+fOnQcPHrRp00ZNTU1PT8/U1DQhISE6OlpTU7Nbt24ZGRnm5uaGhoZyufzcuXMdO3asqKiIioqysLBwdXUtLS1t1apVUVFRQkKCq6vrtGnTVq9ebWlpef/+/ZiYGFVVVW9vb0NDw6tXr2ZnZ5uYmAQGBnIxiKjsUVnkh5FEZOhqOD1h+ks/n+Kk4pQzKVnXswriC0pSSqpL/+6S0TDS0LHWMfUwtfCxsA+y17HSWaG3guvKmp05W9tSuzG+Ps1q36B93N45A/8c6PGRh7LjAAAAAAAAAAAAAAAAAMDzoVEGQAmurLwSuTCSiARCwaTrk0w8TJSd6GmSYsmGNhsqsiuIyH2Y+5B9Qxq+enDUwbg9cURk39N+bMRY5URsAX61+7U0vZSIeh/rrWmrqew4r6qwsPDo0aO2trY3b95MTk7evHnzKz6YcyHn6oyrROTQ22HM6TGvuy6rYGvKaohIRaTy1OFKhQmFv7f6nYh0rHU+f/z5687cElz89uK5xeeIyGemT/CvwcqOAwAAAAAAAAAAAAAAAADP13LPfAF4h3We09msvRkRyaplB4YfqK2ofekjzYmVswdHHeS6ZEQmopDfQ54a0O2bbtyJUalnUlPPpCohYsugY6PDFRVpFcpN8lq0tbVNTU3T09M9PDw2bNjw6g9WZVdxha6N7husy/AYoZ5QqCd8qkuGiAoS6g5pMmpl9AYztwRmnmZckf8gX7lJAAAAAAAAAAAAAAAAAOAFBC8fAgCNjafCG7J3SKhXaG1FbdHDogPDDow8NpJrPWkJIhdFpoSnEBEx1H9zfw1DjacGGDgbeIzzuLvlLhGdmH7i09hPBcL38ZeJOF/MFeWp5WbdzZQb5tWpqqqGhDzd/PQqypLKuELfUb9RE1HRoyKuMHQzfHrRsrLk5GQi0tbWtra2rj8HaunSpcuWLcvIyNi+fbuWltasWbNiY2PDwsKcnZ2Dg4MTExPrZ7C1tTU3N39q2sTERLFYzE3b8FirN6Znr1cX+HHZv58NAAAAAAAAAAAAAAAAAJpIS/nDPMD7Rt9Jv19oP2KIiJJPJ5/49EQLOQft2s/Xrv50lasDFgc4f+D83GGB3wUK9YREVJxUfPmHy82Xr8VIi0orTCzk6rJH70VvRMmDEq4w82rkrqD6liNtC+2nXrp48eLAgQP/+OOPBQsWuLq6jhkzpqKigojy8vKIaMyYMTo6Or169SKiPn36eHh4dOrU6fz588OGDdvw/+7du/fsih999NF//vOf0NDQ8ePHBwYG1tb+212d6rcXKsssYxUt4mcZAAAAAAAAAAAAAAAAAJ71Pm4CAdBCtB7ZujCx8MJ/LhDRnc13GD7Td31fhscoMdLtDbcj5kdwtcsAl4ClAf80UmQiCvoh6PjU40R0eeXl1iNaP7sXyDusprzmyIQj9P/tEIXRhUqN0xwUtYrypHIiIobMvZ7eoOVfqiqoO9Tp2e2LiMjd3T00NJSIqqurR48ePWfOnE2bNgUHB0dGRsbFxU2ZMqWmpmbPnj21tbUymYxrOGvXrt2OHTsaTnL79u2bN2927dq1qKjIw8ODiBYsWODn58eybKtWrWJiYlxcXCIiIjIzM52cnPr27cswzO3bty9fviwUCgMDA52cnKqrq48ePVpQUNC7d29HR8enQqpoqKiIVKRiqbxGLhVLVbVUG/cjAgAAAAAAAAAAAAAAAIBGgR1lAJQp4JsAj3EeXB0dGn1k/BF5rVxZYS7858KJaSe45g+bAJshe4a8uGun/eT2lp0tiUheI/9rxF+yalnz5GwJwueE152wwxARSfIk4iyxciM1taK7RQqpgogMXQzVdNQad/KaihquePHMQqFw6dKlp06dIqLx48dXVFTI5fLS0lKJRFJSUsKybElJSU1NDRFJJJL0/yeRSE6dOjVhwgRNTc1ff/111KhRaWlpRJSdnZ2amnr+/HmxWGxra3v9+vWUlBQzM7Ndu3bNmzfv5s2bkydP1tLSUlVVvX37dk1NTbdu3RISEkQi0eDBg2NiYp6Np6KhwhXSKmnjfj4AAAAAAAAAAAAAAAAA0FiwowyAMjEMM2DLAFbBxu6IJaKY7TElaSXDDw7XMHrOvhpNRyaRnZh+4t7WuhNqLHwsRh4dKVB/ye8Hhsf039T/D+8/ZBJZXmxe+Jzwvuv7Nn1Y5Yv/K/7ulrtcbdbOLOduDhHlX8+3G2Kn1FxNK+dSDlc49HJoulUY5iU7KtnY2GRnZ0ulUiIaNGjQtGnTJk6cKBQKW7Vq9eOPP37yySdElJiYGBsbO2nSJO6Rb7/9dvXq1b/++mv37t1HjRplbW3N3f/xxx91dXVzc3N79eqlq6vbq1cvb2/vxMTEcePGTZo0qUOHDmZmZgMGDDA0NCSi7du3t2/ffunSpUSkpaUVGhq6fv36p7KpqKsQQ0JdoUKmaNRPBQAAAAAAAAAAAAAAAAAaDRplAJSM4TMDtw1UUVeJ/iOaiDIuZYS2Dx3450C7Hs3UdVGcVHxg2IHce7ncpUMvh2EHh6lqvtLBMUbuRsFrgo9POU5Etzfctutu12poqybM2gLkx+UfGX+Eq92Hu1t1tuIaZZ6cefJuN8rkXc7jCsc+T5861Jyys7ONjY1VVFRePMzHx+f48eP1l5mZmdxhSQKBwM6u7su0Zs0aPz8/Iho6dOjmzZu1tbXXrFkTGBiop6dXXFz84YcfxsbG+vn5GRgYrFy5Mjk5OTIysmfPntyznTp1enZRWY2MWKouqWb4yjxADQAAAAAAAAAAAAAAAABeAEcvASgfw2M+CP2g5089ub+vl2eV7+i5I3xOeG1FbZOuy8rZqz9d3eCxob5Lpt34diOPjXzFLhmO1yde7sPcufrw+MPZt7IbP2iLUV1SvXfg3trKWiLSd9L/YOMHboPduNOX8m/m15Y17ddLicoelpWnlBORioaKbYBto8+vKqr7lqspr3nBMJZlV69e3b1799ed38rKKjk5mYhkMhl37lJDBgYGubm5mzZt2rJly4oVK0aNGiWVSlVVVX/44YeHDx9+9dVXn332ma2trZ+f35n/t3z58mdXkYqlT70dAAAAAAAAAAAAAAAAAGhpsKMMQEvhO8/X2N348MeHxfliVsFe/+X6g30PglYEtRndhuE1/gYVyaeSIxdG5sXWbRMiEAr6rO3TfnL7N5iq3x/9cu7mFCcVS8XSPf33TLw2UddWt1HDtgjyGvn+oftLUkqISFVLdcShEUJdoVBXaOljmXU9i5WxWeFZ9sPslR2zSaQfSecK14GuLz2T6w1oGNadNVZVWPXsq48ePVq4cGFlZeWVK1f09fX37t370gkTExMXLVrE1V26dJk9e/bs2bPnzZt36dKl+jEbN248duxYQUFBRETE2bNnCwsLV61aFRwcvH//flVV1f379yclJTk6Op47d65du3ajRo3auHHjggUL2rdvn5qaamVlNXbs2IYryqplteJaIuKp8FQ0XrLhDQAAAAAAAAAAAAAAAAAoC/+bb75RdgYAqKPvpO/xkUf+g/zi5GIiqq2oTTyU+GD/A6GO0NDNkMdvhC2gWAWbfDL52CfHLn1/SZwn5m6atTcbdWyUU4jTm80pEAocezve33NfJpHVVtamnkltPbK1ivo71SugkCn+Gv5X8qlkIiKGhuwZYtPVhntJJpFx96tyqhyGOygxZBNRSBXRS6LlEjkR9VrVS99Bv9GXyLmTkxaVRkQmbU0cev7PZ6ilpeXi4mJubt6xY8dPP/105syZIpGIiFxdXV1dXV1cXFxdXXk8Hp/Pd3V1dXZ2JiJtbW1nZ2ez/2dnZ9elSxcvL6+UlJShQ4dGR0f36tXLz8/P2tqam/aHH34wMzPr2bNnVVWVRCL5/PPPO3fu3LVr1+Li4qysLG9v73nz5qmpqY0bN04sFmdmZtrZ2YWEhAiFwoY5S9NKb/52k4h0rHU6zX7OwUwAAAAAAAAAAAAAAAAA0BIwLMsqOwMAPC1uT1zE/IiKJxX1d0Qmonbj2nl85GHkbvRmc5Y9LovbG3d3692ih0X1N1U1Vbt+3bXz3M48wb/twsm4nLGj5w5ZtYyITNuZjj0ztn6bkLcdq2APjzscuzOWuwxaEeT3hV/9qzVlNastV3PnMQVsDTDsYKiclE3m8eHHtxffJiJtS+3P0z/nDghrXA/2P/hr+F9E5NzPeeTRkY0+f0OBgYGrVq3y9PRs3GlTIlJ29t5JRDYBNh+f/7hxJwcAAAAAAAAAAAAAAACAxoKjlwBaotYjWzv3c77609Uba29Ul1YTkThPfOXHK1d+vKLvqO/8gbN1F2vLTpZa5lovnkdSJMmOzk4/l54amZodnU0N+uJ4Krz2k9oHLAnQNNVslMzW/tYDtw08OOogq2Bz7+X+2ePPjyI/EhmLGmVyJVJIFUcmHKnvkvFf6N+wS4aI1HTU2o5te3vDbSJ6tO3RO9YowyrYh1sfcrX3dO+m6JIhIiO3uvavgviCppi/ocmTJ5ubmzf6tLn3crmi/r0AAAAAAAAAAAAAAAAAQAuEHWUAWrSaspqb627e3ni7PKv82Vc1DDX0HfV17XTV9dTVdNSIiFWw8lq5OF9cnllenFLccE+aemo6al6TvTp+1lHHWqfRA8dsjzky4QgrZ4nIqJXRqBOjdG11G32VZiMVS/cP2Z98Opm79J7mHfJ7yLPDChMK17dezypYIuq+q7t+28Y/nEhZsiKybsy9QURqOmqzH8/mvs0anbxG/oPOD/IaOTE0L3fe29hfdWDYgfgD8UTUb1O/9pPaKzsOAAAAAAAAAAAAAAAAADwfGmUA3gKsnE06lXTyh5P5V/PV6A07FXgqPIdeDm1GtnEZ4KKqqdq4CRuK2xN36KNDCpmCiETGouFhw638rJpuuaYjzhfv6bfnyc0n3GWHqR1Cfg9heM/fUuXQ2EPcrjPGnYy7bOrSfCmbkkKqODPwTGVGJRH5L/QP/CGw6dba2nVrxqUMIhr21zC3D92abqGmwLLsavPVlbmVRDTl7hTTdqbKTgQAAAAAAAAAAAAAAAAAz8dTdgAAeDmGzzj0cdhUuukn+mkn7az2rrbtZvsqzS4qGirmHcw7ftZxxOERCwoXjDo+qs3oNk3aJUNErUe2/nD3h3w1PhGJ88V/Bv4Zsz2mSVdsCplXMkPbh9Z3yQQsDei7oe8/dclwA3gCHhHlX8/PjspuppRNLGV3Ctclo66v7jvft0nXsulqU7doREqTLtQUcu/mcl0yImORSVsTZccBAAAAAAAAAAAAAAAAgH8kUHYAAHglW7ZsiY+PJ6I8rbzZx2cbGxsTUcWTipVfrDy265gaqfUN7BsYGMjwGDkrn/317FJ5aQWvIvlJsraudvOnbTW0lbal9t5Be8V5YnmN/PC4w6mRqX3X923qHp3GEv1H9KnPTslr5UTE8JmQdSEdpnZ48SP6jvrtJ7e/veE2Ed37/p6xj7FA9Hb/gpXkSeI3xnN1wJIAdX31Jl3OMdjx0neXiCjxcGLf9X0Z/j/2JLVAD48+5AqH3g4v6KYCAAAAAAAAAAAAAAAAAKXDjjIAb4Hy8vJvvvmGqxcuXMh1yRCRloXWw5qHsRR7i245jnf0X+Tv94Vf14VdZe6yVEotUBTE3FfaVi6WnS0nXZ9k3KYuauyO2FDP0Cc3nigrzysqyyjbFbLr+JTjXJeMhpHGmFNjXtolwwn8LlBkIiIiSZ4kbm1c0wZtYizLRi+JllXKiMjQ1dB7mndTr2jla6VlrkVE4nxx+vn0pl6uEbEsG7sjlqtd+rkoNwwAAAAAAAAAAAAAAAAAvBgaZQDeAkuWLMnJySEic3Pzzz//vOFLSUlJXOHo6Fh/s0OHusaO27dvN1fG59C11Z14ZWKb0W24y/9j7z7jqy7vN45fJ3uQwQorrIAgI0yBBALIRkaYIkNsa1upVVscdbf619KWim0VpVJnZUT2kqkgyggjYYUVCDMQIEAGCdnnnP+DXxoXYMY555fA5/3qg+8553fu+0oK+sDrdd9pSWkfRX30xR++KMgqMDHVzdjt9t2zds9qOytpbZLxTmhk6JQ9U8IGhJVyBZ/qPoP+MciYT8ScuPD1BacEdYlTi05d2n5JksXNEv1BtJun0/9lYXGztB7b2pjj3jPzz21Znf3mbPrJdEk+1X1aDG9hdhwAAAAAAAAAAAAAt0JRBqjsDhw48O677xrzm2++6efnV/KR3W4/efKkMd91110l75cUZWJjY10V88a8ArxGzx09et5o7yBvSbYi2/YZ29+5+52EeQl2u93cbN+VvC35ox4frXlsjVHisbhZIp+O/PnmnweGlu3iqvCJ4S2jW0qSXXEvx+VeynVGWmdLP5S+/+/FZxFFPhXZsEdD1+zb+ZHOskjS0eVHs85nuWbTiov9Z/Hfsrbj23r4VO37tgAAAAAAAAAAAIDbHkUZoFKz2WxTpkwpKiqSNGDAgPHjx3/30wsXLmRlZUmqXr16jRo1St7v0aOHMWzZssWFYW8qfGL4o/sfbdKnifEyKyVr6YNLP4z88Pia42bGkiRdOXplwagFH0V9dC72nPFO7da1H9728MAZA9293Mux4IiPRwQ2DJRUkFEQOzXWmmd1ZFzny0/Lj50aa8u3SQoJD+nzeh+XbV27Te0m9zaRZCuybVWbmFMAACAASURBVPv7NpftWxGXD19OXJkoSRZ1fbyr2XEAAAAAAAAAAAAA/ASKMkCl9u9//3vHjh2SvL29Z86c+YNPS+5datHiexe+tG3bNjg4WNLFixdLjpwxV1DjoIc2PjRm/piABgHGO+d3np8/dP77Xd5PXJlot5lwukzy9uRF9y+a1XbW0eVHjXfcvd17/bHXlD1TQiNCy72sbw3fMfPHuHm4SUo/mL7r2V2yOSawC1hzrdt/tz33Yq4k3xq+45eNd/ERKT3+UNzxipsddy35miu3Lp+v/viV7JLUMrpl7da1zY4DAAAAAAAAAAAA4CdQlAEqr0uXLr388svG/MILL7Rs2fIHDyQlJRlD8+bNv/u+m5tbZGSkMW/dutXJMUvLYrG0ndD28aOP93i2R0n9IiUu5bMRn73d/O0t07Zkpbjitp3C64UJ8xI+iPjgox4fHV582G61S7K4WdpNbvdE4hN9Xuvj7l2eg2S+q1FUoyHvDjHmlK9S9ry+p1LdM3UztgLb9t9vT9ufJsnibhkTM6Z6s+ouztD8vuYNuzeUZM23fvn8ly7evazOfH3myNIjkmRRr5d6mR0HAAAAAAAAAAAAwE+jKANUXo899lhGRoakFi1aPP/88z9+4OjR4qNQfnCijL5z+9K2bZXrChuval79p/f/3Ynfdft9Nw/f4rpMxqmMTS9v+lfjf8UMj9n74d7rqdcdvq8133p89fGlk5a+UeeNpQ8uPb/zfPEHFjW/r/kj8Y+M+nRUUOMgR23X+ZHOUS9EGfOpxaf2/t9eU07NKT1rvnXnMztTY1ONl/e9dV+zgc1MSdJ3Wl9jSJifcPKLSnEe0g1Z861rnlhjzO0mtavfpb65eQAAAAAAAAAAAACUhkvv1ABQevPmzVuyZIkki8Uya9Ysb2/vHz9z5MgRY2jduvUPPoqKKm5pVLaijCGgfsDgfw2Oej4q9h+xez/am3s1V5KtyHbs82PHPj9mcbOERoQ2G9gsNDI0tFuod9ANfvbSsBXZLh24dGrTqZNfnjy75WxhTuF3P/Xw8QifFB4xNSKkbYgDfqQf6Tutb1ZK1v7/7pd0askpW6Gt8/91tnhYnLFXBRVlF23/3fbLuy8bL/v+uW+Xx7qYFabJvU3CJ4UnzEuQtGrKqt/s/U25/wA41eZXN6cmpEry9Pfs95d+ZscBAAAAAAAAAAAAUCqWKnEhCHCnSUlJCQ8PT0tLkzRlypT33nvvho81a9bs5MmTkg4ePNimTZvvfpSbmxscHFxQUGCxWC5fvlyzZk0XxC6foryiI0uP7Hl/z+mvT+tH/0CyuFlqtapVJ7xOjeY1jP/5h/h7B3n7BPl8946k/Mz8nCs5WReyslKyriVfu5RwKTUhNfVQqjXf+uMdQ9qGhE8M7/jLjv4h/k790ew2+8pfrdz38T7jZe0utSP+EeEV7OXUTcsq92Lutse3ZSZmGi+jno/q91eTax/XU6+/2+rd3LRcSW3GtRm7YKy5eX7s1KZTcwbOMe7tGvLukC6/Na1XBAAAAAAAAAAAAKBMKMoAlY7dbh86dOjatWslNW3adP/+/QEBAT9+LC8vr1q1alar1cPDIzs7+8dHzkRGRu7YsUPSypUrhw8f7oLkFZR+Mv3o8qOJKxPPbj1rVBBuzc3TzVZoK/36NVvUbDW6VfjE8JBwpxwhc0N2m331o6vj/xNvvPRv6B/5r8igFg6746mCLm2/tPv53fnp+ZJk0YDpA7r/obvZoSTpyJIjC8cuNOZB/xgU8WSEuXm+K+N0xvtd3s+5kiMpbEDYg+sftFgq40FBAAAAAAAAAAAAAH6Mq5eASmf27NlGS8bNze3jjz++YUtG0tGjR61Wq6SwsLAbXszUu3dvoyizcePGKlGUqR5WPfKpyMinInOv5p7YcCJ5e3JybPKl/ZdsRTduw5SmJRPUOKhRj0Zh/cPC+ocFNgx0dOSfZnGzDJs9LLhp8KaXNtlt9uvJ1zdN2NT2922bT25ubrvCVmg7MvtI4vuJdptdkpunW/QH0e0fam9ipO9qNaZVl9922T1rt6QNz2wIahzUanQrs0NJUm5abkx0jNGSCagfMOq/o2jJAAAAAAAAAAAAAFUIJ8oAlcuJEyc6dOiQnZ0t6ZlnnnnjjTdu9mRMTMzEiRMljRgxYvny5T9+YMOGDYMGDZIUHh5+4MABp0V2rsKcwkv7L11JvJKWlJaWlJZ5JjPnSk7+tfy8zLzvXqvkFeDlW903MDTQv45/YIPAmi1r1mlXp067Oj7BPiaG/66jy48um7ysILvAeFm7W+1OL3eq1qSaKWHSEtLi/xR/Lema8TIwNHDsgrENuzc0JczNFOUVzRkw5+zWs5I8fD3GLxvfbFAzcyMVZBd82v/T8zvPS3L3dv/5Vz8PjQw1NxIAAAAAAAAAAACAMqEoA1QihYWFPXv23Llzp6Q2bdrExcX5+Ny05/HKK6+89tprkp5//vm//vWvP34gJyenRo0a+fn5FoslJSWlbt26zktuCmu+1d3b3ewUZXD12NWlDy5N2Z1ivHTzdGs+uXmrKa08/Fx3uFfe5bzD/z58askp/e84nqb9mo6ZP8Y/xN9lGUov92ruh90/vHrsqiQPH49xi8fdNfQus8LkXMmZP2y+0ZKxuFlGzx3ddkJbs8IAAAAAAAAAAAAAKB83swMA+NaLL75otGS8vLw+/fTTW7RkJB06dMgYWrdufcMH/Pz8IiIiJNnt9s2bNzs4ayVQtVoykmq2qPnLbb/s+WJPi7tFkq3QduyjY2sHrz320bGi60XO3j0/Pf/QO4fWDV13alFxS8armtd9b983ecPkytmSkeRb0/fBdQ8GNwmWVJRX9NnIz+L+HWdKkoxTGR/3/NhoyciiobOG0pIBAAAAAAAAAAAAqiKKMkBlsXbt2jfffNOY//rXv3bq1OnWz5fcphQeHn6zZ/r162cMGzdudERGVJSbp1vfaX2nxE9pFNXIeKcgvSDhnwlrB689+NbB6+euO2PTrJNZe17bs2bAmqOzj1pzi++ran5f898e/G3XJ7pa3CzO2NRRgpsG//zrn1cPqy7JVmRb/dvVqx9dXZTn9F7Rdx1ffXx259lXjl6RZHG3DH13aOcpnV0ZAAAAAAAAAAAAAICjcPUSUCmcP3++Q4cOV65ckTRkyJDPP//cYrlVfSE3NzcgIMBqtXp4eGRlZd3s7JnY2Nju3btLaty48enTp50QHOVkt9sT5iZ89aevMk5nlLxpcbPU7la70ZBG9XrX86ruVcEt8i7nnVt/LnltctqBtO++X7dj3QHTB4QNCKvg+q6UfSE7ZkRMya1VtdvUHjNvTJ32dZy9b+H1wo0vbtw5c6fskuTu7T5m3phWY1o5e18AAAAAAAAAAAAATkJRBjCfzWYbMGDApk2bJIWGhu7du7dWrVq3/kpcXFyXLl0ktWrV6vDhwzd7rKioqGbNmteuXZN04sSJsLCq1I24E9gKbfvn7N/yly3pJ9K/94GbanWoVbtr7Zrta9ZoV8Mz0LOUC+Zdzss4kpG6KzV1R2rmsUx9/x/w9TrX6/5M9zbj2lTyU2RuqCi3aMXDKw5+dtB46ebp1u2Jbr1f6e0d6O2kHY99fmzt79ZmnCpuMgU1Dhq3aFz9LvWdtB0AAAAAAAAAAAAAF/AwOwAAvfLKK0ZLxsPDIyYm5idbMpISEhKM4Rb3LhkL9urV6/PPP5e0YcOG3/zmN47IC4dx83Tr+HDH9g+1P776ePz78UnrkuxWuyTZdGXPlSt7rkiSRX51/ao1qubf0N83xNejmodXgJeHn0dRXpGtwGYrsOVdyctNzc25mJN5LLMgveDHu7h7u7cY1qLb77o17tXYtT+fI3n4eoyJGdO0b9N1T64rvF5oK7TF/iM2YX5Cj+d6dH6ks6dfabtEpZG8PXnjixvPfH2m5J0Ww1qM/GSkb01fB+4CAAAAAAAAAAAAwPUoygAmW7Vq1V/+8hdj/r//+7+oqKjSfKukKNO2bdtbPzlw4ECjKLN27VqKMpWTm4dbyxEtW45oeS352qGFhxJXJp7ddra4MSPJrpwLOTkXcrSzbMu6e7k36tkofEJ4q9GtfKrf+HKuKqfTrzs1ubfJqimrTn91WlL2xez1T67f+retnR/p3OmXnYIaB1VkcWu+NXFlYuw/Y8/Fnit507em7+B/Dm43uV0FkwMAAAAAAAAAAACoDLh6CTDT8ePHu3btmpGRIWnQoEFr1qxxc3MrzRcHDhz4xRdfSFq2bNnIkSNv8eSpU6eMG5f8/f2vXr3q7e2se2rgQDlXck5+efJc7LlzO89d2HPBVmgr5Re9ArzqhNcJjQgN6x/WuFdjT39HnrNSqRxacGjDHzZcS75W8o7FzdLk3iZ3j7y7xfAWwU2CS79UQXbBma/PHF1+9PCSw3npeSXvu3m6dX6k872v3OtX28+R0QEAAAAAAAAAAACYh6IMYJrs7OzIyMiDBw9Katy4cVxcXGkuXTLUrVv30qVLkpKSkpo1a3brh1u0aHH8+HFJX3zxRf/+/SuWGq5mK7RlnM5IO5GWlpSWczknLzMvPzO/4HqBh4+Hp6+nm4ebfx3/wAaB1epVq3V3reCmwRaLxezILmLNt+79eO/Wv23NPJP5g48CGwY2jGxYr1O96s2qVw+r7lvD1yfIx83TzVZosxXZrqdez0rJSj+ZfnHfxYv7L6bsTrEVfa+K5O7tHj4hvOdLPWs0r+HCHwgAAAAAAAAAAACA01GUAcxht9vHjx+/cOFCST4+Plu3bu3cuXMpv5uSktKgQQNJgYGBGRkZP1mMmDp16ltvvSXpqaeeevPNNysWHKhcbIW2xFWJe97fc2LDCbutov9Gq96sevvJ7TtP6VytbjWHxAMAAAAAAAAAAABQqVCUAczx97///bnnnjPmjz/++Oc//3npv7tmzZqhQ4dK6tWr19dff/2Tz69fv37w4MGS7r777iNHjpQnLlDpZaVkHVt17OiKo2e+OVN4vbD0X7S4Wep2qNu0X9NWo1uFRoQ6LyEAAAAAAAAAAAAA03mYHQC4E33xxRcvvviiMT/xxBNlaslI2rt3rzF06NChNM/37t3bz88vJyfn6NGjJ0+eDAsLK9N2QJUQUD+g85TOnad0thXZUhNSz+08d/XY1bSktMwzmcZ9VdZCq7uXu8Vi8a/jX61OtaBGQbXb1K7bvm69zvX8avmZHR8AAAAAAAAAAACAK1CUAVwtMTHxgQcesFqtknr27FmOu5D2799vDO3bty/N8z4+Pn369Fm9erWkdevW/fa3vy3rjkAV4ubhVrdj3bod65odBAAAAAAAAAAAAECl42Z2AODOkp6eHh0dnZ6eLik0NHThwoWenp5lXWTfvn3G0LFjx1J+ZciQIcawatWqsm4HAAAAAAAAAAAAAMDtwWK3283OANwpioqK7rvvvi+//FKSr6/v119/3aVLl7Iukp2dHRQUZLPZPD09s7KyvL29S/Ot8+fPN2zY0G63e3t7p6amBgYGljk9AAAAAAAAAAAAAABVHCfKAK7z+9//3mjJWCyWjz/+uBwtGUn79u2z2WySWrVqVcqWjKQGDRp06NBBUn5+/vr168uxLwAAAAAAAAAAAAAAVR1FGcBF3n333VmzZhnza6+99sADD5Rvnbi4OGPo1KlTmb44YsQIY1i5cmX5tgYAAAAAAAAAAAAAoEqjKAO4wvr166dOnWrMEyZMeOmll8q91J49e4yh3EWZzz//vLCwsNwBAAAAAAAAAAAAAACooijKAE6XkJAwbty4oqIiSV27dv3www8tFku5V4uPjzeGzp07l+mLHTp0aNKkiaSMjIytW7eWOwAAAAAAAAAAAAAAAFUURRnAuVJSUoYOHXrt2jVJjRo1Wr58ua+vb7lXy8nJSUxMlOTu7t6+ffuyfn3YsGHGsGLFinJnAAAAAAAAAAAAAACgiqIoAzhRTk7OqFGjkpOTJQUEBKxatapevXoVWXDv3r1Wq1XS3Xff7e/vX9avl9y+tHLlyorEAAAAAAAAAAAAAACgKqIoAziL1WqdOHHirl27JHl6ei5evLhdu3YVXLPc9y4ZevfuHRwcLOnUqVN79+6tYBgAAAAAAAAAAAAAAKoWijKAs0ydOrXkhqO333574MCBFV+zpN3SqVOncnzd09Nz+PDhxrxkyZKK5wEAAAAAAAAAAAAAoAqhKAM4xYwZM9555x1jfvHFF3/zm984ZNm4uDhjKN+JMpLGjBljDIsWLXJIJAAAAAAAAAAAAAAAqgqL3W43OwNwu4mJiZk0aZLxl2v8+PHz58+3WCwVXzY7Ozs4ONhqtbq7u2dmZvr7+5djkby8vJCQkKysLEkHDx5s06ZNxYMBAAAAAAAAAAAAAFAlcKIM4GCbN2/+xS9+YbRkevXq9cknnzikJSMpPj7earVKatOmTflaMpJ8fHyGDBlizNy+BAAAAAAAAAAAAAC4o1CUARzp0KFDo0aNys/Pl9SmTZvly5d7e3s7avHdu3cbQ5cuXSqyTsntSxRlAAAAAAAAAAAAAAB3FIoygMOcP3/+vvvuy8jIkFS/fv01a9ZUr17dges7qigzdOhQPz8/SQcOHEhMTHRAMgAAAAAAAAAAAAAAqgKKMoBjpKenDxo0KDk5WVJQUNC6desaNWrk2C0cVZTx8/MbOHCgMS9btqyisQAAAAAAAAAAAAAAqCIoygAOkJeXN2LEiEOHDkny9vZetmxZeHi4Y7e4evXq6dOnjfXbtm1bwdVKbl9asGBBBZcCAAAAAAAAAAAAAKCqoCgDVJTVap04ceKWLVskubm5ffLJJ3369HH4Lrt377bb7ZLat2/v5eVVwdVGjBjh6+srad++fUeOHHFAPgAAAAAAAAAAAAAAKj2KMkBFPfnkkyUXGM2YMWP8+PHO2GXHjh3GEBERUfHVAgICBg8ebMyLFy+u+IIAAAAAAAAAAAAAAFR+FGWACnn11VdnzpxpzM8999yTTz7ppI127dplDF27dnXIgg888IAxzJ8/3yELAgAAAAAAAAAAAABQyVmMy1wAlMP777//yCOPGPPEiRPnzp1rsVicsZHdbg8JCbly5YqkY8eO3XXXXRVfMycnJyQk5Pr165IOHjzYpk2biq8JAAAAAAAAAAAAAEBlxokyQDktX7780UcfNebBgwd/8sknTmrJSDpx4oTRkqlRo0bz5s0dsqafn9/QoUONecGCBQ5ZEwAAAAAAAAAAAACAyoyiDFAeW7ZsmTBhgtVqldSlS5dFixZ5eno6b7vv3rvkwDrOuHHjjIGiDAAAAAAAAAAAAADgTkBRBiizhISE6OjovLw8SXfdddfq1aurVavm1B1LijLdunVz4LJDhgwJCAiQdOzYsX379jlwZQAAAAAAAAAAAAAAKiGKMkDZnDt3bujQoRkZGZJCQkJWr15du3ZtZ29aUpTp0qWLA5f19fUdMWKEMX/22WcOXBkAAAAAAAAAAAAAgErIYrfbzc4AVBlXrlzp2bPn0aNHJQUGBm7evLljx47O3rSgoCAoKCgvL89isaSmptaqVcuBi69Zs2bo0KGSGjZsePr0aTc3ynMAAAAAAAAAAAAAgNsW/1EcKK2cnJwRI0YYLRkvL6/Fixe7oCUjae/evcY1T82bN3dsS0bSwIEDQ0JCJCUnJ2/bts2xiwMAAAAAAAAAAAAAUKlQlAFKpbCwcOzYsdu3b5fk7u4+f/78AQMGuGbrHTt2GENERITDF/fw8BgzZowxx8TEOHx9AAAAAAAAAAAAAAAqD4oywE+z2+2/+tWv1q5da7ycOXNmSbnEBXbu3GkM3bp1c8b6EyZMMIZFixYVFhY6YwsAAAAAAAAAAAAAACoDijLAT3vhhRc+/fRTY37llVceffRRV+7u1BNlJEVFRTVp0kTSlStXvvjiC2dsAQAAAAAAAAAAAABAZUBRBvgJs2bNmj59ujE/8sgjr776qit3T01NPXXqlCRfX9927do5YwuLxTJu3Dhj5vYlAAAAAAAAAAAAAMBtjKIMcCsLFix44oknjHn48OHvvvuuiwOU3LvUqVMnT09PJ+1ScvvSihUrcnJynLQLAAAAAAAAAAAAAADmoigD3NTGjRsfeughm80mKSoqasGCBR4eHi7O4Ox7lwwdOnRo3bq1pKysrBUrVjhvIwAAAAAAAAAAAAAATERRBrixffv2jR49uqCgQFKbNm1Wrlzp6+vr+hjbt283hsjISKduNGnSJGOYN2+eUzcCAAAAAAAAAAAAAMAsFrvdbnYGoNI5c+ZM9+7dU1JSJDVs2HD79u2hoaGuj2G1WoODg7OzsyWdO3euQYMGztvr7NmzTZo0sdvtHh4e586dq1OnjvP2AgAAAAAAAAAAAADAFJwoA/xQZmbm8OHDjZZMUFDQ559/bkpLRtKBAweMlkyjRo2c2pIxtoiKipJUVFS0aNEip+4FAAAAAAAAAAAAAIApKMoA35OXlzds2LCEhARJXl5eS5YsadeunVlhduzYYQzOvnfJwO1LAAAAAAAAAAAAAIDbG0UZ4Fs2m23y5Mlbt26V5ObmNnfu3H79+pmYJzY21hgiIiJcsN24ceO8vb0l7dix49ixYy7YEQAAAAAAAAAAAAAAV6IoA3xr6tSpixcvNuY333zz/vvvNzdPyYkyrinKVK9efciQIcY8f/58F+wIAAAAAAAAAAAAAIArUZQBiv3zn/+cOXOmMT/11FNTp041N8/Vq1eTkpIkeXt7d+zY0TWbfvf2Jbvd7ppNAQAAAAAAAAAAAABwDYoygCQtW7bsmWeeMeYHHnjgjTfeMDePpNjYWKOq0qlTJ+NGJBcYOnRocHCwpKSkpJKLnwAAAAAAAAAAAAAAuD1QlAEUHx8/efJkm80mKSoq6pNPPnFzM/+vRsm9S5GRkS7b1MfHp+TCqblz57psXwAAAAAAAAAAAAAAXMD8NgBgrtOnTw8dOvT69euSwsLClixZ4uPjY3YoSSo50CUiIsKV+z744IPGsGDBgvz8fFduDQAAAAAAAAAAAACAU1GUwR3t2rVr0dHRly5dklSzZs21a9eGhISYHUqSrFZrXFycMbvyRBlJPXv2bNq0qaS0tLR169a5cmsAAAAAAAAAAAAAAJyKogzuXIWFhaNHj05ISJDk4+OzfPnyFi1amB2q2MGDB69duyapfv36oaGhrtzaYrFMnDjRmOfMmePKrQEAAAAAAAAAAAAAcCqKMrhzPfHEExs3bpRksVg+/vjjqKgosxN9q+TepR49erh+95/97GfGsGrVqqtXr7o+AAAAAAAAAAAAAAAAzkBRBneomTNnzp4925hff/318ePHm5vnB3bu3GkM3bp1c/3ud911V5cuXSQVFBQsWbLE9QEAAAAAAAAAAAAAAHAGijK4E33xxRdPPfWUMd9///0vvviiuXl+bMeOHcYQGRlpSoDJkycbw6effmpKAAAAAAAAAAAAAAAAHM5it9vNzgC4VGJiYkREREZGhqTOnTt/8803fn5+Zof6nrS0tFq1atntdi8vr8zMTB8fH9dnuHz5coMGDQoLCy0Wy/Hjx5s1a+b6DAAAAAAAAAAAAAAAOBYnyuDOkpaWNnz4cKMlU79+/RUrVlS2loykHTt2GA22jh07mtKSkVS7du1BgwZJstvt8+bNMyUDAAAAAAAAAAAAAACORVEGdxCr1Tpu3Ljjx49L8vf3X716dYMGDcwOdQOm37tkKLl9ac6cORw9BQAAAAAAAAAAAAC4DVCUwR3khRde2LhxoyQ3N7c5c+Z06NDB7EQ3VlKUiYiIMDFGdHR0cHCwpKSkpJJIAAAAAAAAAAAAAABUXRRlcKdYuHDhjBkzjPnVV18dNWqUuXluxmaz7dq1y5jNLcr4+PiMHTvWmOfMmWNiEgAAAAAAAAAAAAAAHMLCjSq4Exw9erRbt27Xrl2TFB0dvWzZMje3StoSO3ToUNu2bSXVq1cvJSXF3DDffPNN7969JVWvXv3ChQve3t7m5gEAAAAAAAAAAAAAoCIqaVcAcKCsrKzRo0cbLZkWLVp8+umnlbYlo0pz75KhZ8+eYWFhktLT09esWWN2HAAAAAAAAAAAAAAAKqTy1gUAh7Db7T/72c+OHDkiqVq1akuXLg0KCjI71K1UqqKMxWKZNGmSMXP7EgAAAAAAAAAAAACgqqMog9vcX//612XLlkmyWCyffPJJmzZtzE70EypVUUbSgw8+aAyrV69OS0szNwwAAAAAAAAAAAAAABVBUQa3sy1btrzyyivG/Oyzz44ZM8bcPD8pKyvLOPzGw8Ojc+fOZseRpBYtWnTr1k1SQUHBwoULzY4DAAAAAAAAAAAAAED5UZTBbSs9PX3y5MlFRUWSevXqNW3aNLMT/bSdO3darVZJ7dq18/f3NztOscmTJxsDty8BAAAAAAAAAAAAAKo0ijK4Pdnt9l/84hdnzpyRVKNGjTlz5ri7u5sd6qdVtnuXDBMmTPDy8pK0ffv248ePmx0HAAAAAAAAAAAAAIByoiiD29M//vGPFStWSLJYLB9//HGjRo3MTlQqO3fuNAbjtqNKokaNGoMGDTLmmJgYc8MAAAAAAAAAAAAAAFBuFGVwG9q9e/eLL75ozM8880x0dLS5eUqvchZl9P3bl+x2u7lhAAAAAAAAAAAAAAAoHwv/zRu3mevXr3fo0CEpKUlSRETEN9984+npaXaoUjl58mSzZs0kBQcHp6WlWSwWsxN9Ky8vr169ehkZGZJiY2Mr1c1QAAAAAAAAAAAAAACUEifK4Hbzhz/8wWjJBAcHx8TEVJWWjL5/nEylaslI8vHxuf/++415zpw55oYBAAAAAAAAAAAAAKB8KMrgtvLll1++9957xjxz5swmTZqYGqdsdu3aZQyV7d4lw4MPPmgMCxcuLCwsNDcMAAAAAAAAAAAAAADlQFEGt4/MzMyHH37YuE1sxIgRJcWOqqLkj13ujAAAIABJREFURJmuXbuam+SGevbsaRSPrly5sn79erPjAAAAAAAAAAAAAABQZhRlcPt47LHHkpOTJdWuXXv27NlmxymbwsLCffv2GXOXLl3MDXNDFotlwoQJxjxv3jxzwwAAAAAAAAAAAAAAUA4UZXCbWLJkSUl74z//+U+dOnXMzVNW+/fvz83NldS0adOQkBCz49zY5MmTjWH58uWZmZnmhgEAAAAAAAAAAAAAoKwoyuB2kJGR8fjjjxvzQw89NHLkSHPzlMPu3buNoXLeu2Ro1apVhw4dJOXl5S1btszsOAAAAAAAAAAAAAAAlA1FGdwOXnrppYsXL0pq0KDB22+/bXac8qgSRRlJkyZNMgZuXwIAAAAAAAAAAAAAVDkUZVDlxcXFzZ4925j/9a9/BQUFmZunfHbt2mUMlbwoM3HiRHd3d0mbNm06f/682XEAAAAAAAAAAAAAACgDi91uNzsDIKWna8cOHTqkpCSdOKELF5SZqcxMZWWpWjV5esrHR/XqqX59hYaqXTuFh6tdOwUGWq3Wrl277tmzR9KgQYPWrVtn9k9SHtevXw8KCrJare7u7pmZmf7+/mYnupUBAwZ8+eWXkmbMmPH000+bHQcAAAAAAAAAAAAAgNLyMDsA7mB5efrqK61YoW++0dGjullnKzu7eLhwQXv2fPu+u7vuuSc+ICBgzx43ydvXd9asWU7P7Bzx8fFWq1VS69atK3lLRtKkSZOMokxMTAxFGQAAAAAAAAAAAABAFUJRBi5nt2vzZn3wgVau/LYEUw5Wq3bu7CptllKkc506hWVmOiyka+3evdsYunTpYm6S0hg9evRvf/vb3Nzc+Pj4xMTEli1bmp0IAAAAAAAAAAAAAIBSoSgDF8rL0/vv6513dOzYDz/y9FSnTrrnHrVooebN1bixAgMVFKTAQGVlqahIOTk6f14XL+rYMR04oAMHdPCgrFbj2/Wl+tu2qVMn9eypJ5/UiBFyc3P1T1cBcXFxxlAlijKBgYFDhgxZsmSJpJiYmFdffdXsRAAAAAAAAAAAAAAAlIrFfrP7bgAHysvTv/+tN97QhQvfe79FC40YoWHD1LWrfHzKtmZGhjZv1hdfaOlSXbz4vY9attRf/qJRo2SxVDS5SzRv3vzEiROS4uLiOnfubHacn7ZkyZKxY8dKat68+fHjx82OAwAAAAAAAAAAAABAqVCUgfOtWqWpU3Xy5LfvBAdr0iT9+tdq394B61ut2rRJn36qhQtVUPDt+5GReustVfozWtLT02vWrGm32729va9du+bl5WV2op+Wl5dXt27dzMxMVZ1yDwAAAAAAAAAAAAAAVel6GlQ9Fy8qOlrR0d+2ZEJD9fbbOn9e77zjmJaMJHd3DRigOXN0+rReeknBwcXvx8YqMlLPPKOcHMds5Bzx8fFGXy08PLxKtGQk+fj4jBo1yphjYmLMDQMAAAAAAAAAAAAAQClRlIHTrFihdu20alXxy5o19c47SkrSE0/Iz88pO9arpz//WSdO6Omn5e0tSVar3nxTHTpo716n7OgIe/bsMYaqdS7LhAkTjCEmJsZqtZobBgAAAAAAAAAAAACA0qAoAycoKtLvf6+RI3X5siS5uWnKFCUm6rHHivsrTlWjhmbM0JEjGjiw+J3jx9W9uz780Olbl0t8fLwxdOrUydwkZdKvX786depISklJ2bJli9lxAAAAAAAAAAAAAAD4aRRl4GgZGRo2TG+/XfyyUSNt3Kj33lPNmi6N0bSp1q3TRx8pKEiS8vL0q1/pscdU+c4+KSnKVK0TZdzd3ceOHWvMCxYsMDcMAAAAAAAAAAAAAAClYbHb7WZnwG3kwgX176/Dh4tfjhmjDz5QcLCZkZKSNHas9u8vfjl6tObNk4+PmZG+IzMzs3r16na73cvL69q1a94uOHHHcbZs2dKrVy9JtWrVunDhgoeHh9mJAAAAAAAAAAAAAAC4FU6UgeOcP6977y1uyVgseuUVLVpkcktGUvPmio3VxInFL5cu1dChys01NdO34uPjjbJa27Ztq1ZLRlKPHj0aNGgg6cqVK1999ZXZcQAAAAAAAAAAAAAA+AkUZeAgFy/q3nt17JgkeXpq3jy9+qosFrNjSZJ8fTV3rp56qvjlpk26/34VFpqaqdi+ffuMoVOnTuYmKQc3N7f777/fmLl9CQAAAAAAAAAAAABQ+VGUgSNcv67hw5WUJEleXlq4UBMmmJ3p+ywWvfmm/vKX4perV+uhh1QJ7h3bu3evMVTFooykcePGGcPSpUsLCgrMDQMAAAAAAAAAAAAAwK1RlEGF2WyaMEFxcZLk4aFFizRypNmZbuKFF/Tyy8XzZ59p2jRT00jfOVGmQ4cO5iYpn4iIiCZNmkhKT0//8ssvzY4DAAAAAAAAAAAAAMCtUJRBhU2bplWriud331V0tKlpfsrrr+uxx4rnV17R6tUmZsnPz09MTJTk5uYWHh5uYpJys1gsY8eONWZuXwIAAAAAAAAAAAAAVHIWeyW4fQZV2JYt6ttXRUWS9Oyzmj7d7EClUFSkwYO1caMkVa+uhAQ1aGBKkLi4uC5dukhq2bLl0aNHTclQcSU/RXBw8KVLl7y8vMxOBAAAAAAAAAAAAADAjXGiDCogK0uTJhW3ZHr31l/+Ynag0vHw0IIFatJEktLT9etfy6S6WFW/d8lwzz33NGvWTFJGRsamTZvMjgMAAAAAAAAAAAAAwE1RlEEFvPyykpMlqVYtzZsnd3ezA5VazZr69FO5uUnS2rX66CNTUuzfv98Y2rdvb0oARxk1apQxLFmyxNwkAAAAAAAAAAAAAADcAkUZlFdcnN59t3ieOdOs24vKr2dPTZ1aPD//vDIyXB+hpChTpU+UkTRmzBhjWLZsWZFxwhAAAAAAAAAAAAAAAJUPRRmU11NPyWqVpEGDNH682WnK5c9/VtOmknTlil5/3cWb2+32hIQEY27Xrp2Ld3esbt26NWrUSNLVq1e/+eYbs+MAAAAAAAAAAAAAAHBjFGVQLuvXa8sWSfL2/vZcmSrH11fTpxfP77yjU6dcuXlycnJGRoakGjVqNKhy5/F8n8ViGTlypDFz+xIAAAAAAAAAAAAAoNKiKINy+eMfi4df/1rNmpkapWLuv19RUZJUUKA333TlzrfNcTKGktuXli5darPZzA0DAAAAAAAAAAAAAMANUZRB2W3erN27JcnPTy++aHaaCnvlleLho4+UmuqybUuKMuHh4S7b1Hl69OhRp04dSRcvXty+fbvZcQAAAAAAAAAAAAAAuAGKMii7kruWfv5z1atnahRH6N9f99wjSbm5eu89l217mxVl3N3dS25fWr58ublhAAAAAAAAAAAAAAC4IYoyKKPz52XUICwWPfaY2Wkc5Omni4f//ld2u2v2vM2KMpIoygAAAAAAAAAAAAAAKjmKMiijhQtVVCRJ996r1q3NTuMgI0eqenVJOnlSW7a4YMPCwsLExERJFoul9e3ya+zTp09gYKCkEydOHD582Ow4AAAAAAAAAAAAAAD8EEUZlNHixcXDpEmm5nAoHx+NH188f/aZCzZMSkoqKCiQ1KhRI6Ncchvw9vYeNGiQMa9cudLcMAAAAAAAAAAAAAAA/BhFGZRFSop27JAkDw+NGGF2God64IHiYe1aF+x25MgRY7htjpMxREdHG8OKFSvMTQIAAAAAAAAAAAAAwI9RlEFZbNokm02SevVSrVpmp3Go7t0VFCRJp0/rfy0W5ykpyrRq1crZe7nSsGHDPD09Je3atevChQtmxwEAAAAAAAAAAAAA4HsoyqAsvvmmeOjb19QcTuDpqf79i+dNm5y92+1alAkODo6KipJks9k+//xzs+MAAAAAAAAAAAAAAPA9FGVQFlu2FA+9epmawzlKfqi4OGdvdbsWZSSN+N+dXCtXrjQ3CQAAAAAAAAAAAAAAP2Cx2+1mZ0AVkZuratVks8nDQ1lZ8vExO5CjbdumqChJCg/XgQPO28dmswUGBl6/fl3SlStXatas6by9XO/kyZPNmjWT5Ofnd/XqVZ/b788JAAAAAAAAAAAAAKDK4kQZlFpiomw2SWrW7DZsyUjq0EHu7pJ05IgKCpy3z9mzZ42WTEhIyG3WkpEUFhZ29913S8rJyfn666/NjgMAAAAAAAAAAAAAwLcoyqDU/ndbkG6724KK+furfn1JKirSuXPO2+fYsWPGYBRKbj9DhgwxhrVr15qbBAAAAAAAAAAAAACA76Iog1JLTi4ewsJ+/GFCQsL+/0lPT3dqkMWLF58/f/7YsWPx8fGHDx82Tmcx7Nq166uvvpK0YcOG6dOnHzp0yGazLViwYPr06RcuXMjPz9++ffuGDRvS0tJuvHTjxsXDmTPOy3/8+HFjaNGihfN2MdF9991nDBRlAAAAAAAAAAAAAACViofZAVB1XLlSPISE/PjDLl26DBw40MPDQ9ITTzzRp08f5wX573//W69evZdeeslut9eqVevcuXOZmZkzZswYNmzY1atXMzIy4uPjn3zyyb/97W81a9ZcsGDB+++//+yzz/r7+7ds2TIyMjIgIODhhx9etGhRZGTkD5du2LB4cOaJMiVFmebNmztvFxP16tWrWrVq2dnZx44dS0pKul1/TAAAAAAAAAAAAABAlUNRBqWWmlo81K59w8/nzp0bGBhozIWFhWvWrLl27VqfPn1CQ0OvXr2akpLi6ekZGxvbs2dPT0/Pxo0bX758OTExMSoqStLWrVsjIiJOnz4dGxsracCAAXXr1j1+/Lifn19SUlJmZmZ0dPTRo0d37twZERFRsuPLL788YMAASdu3bx80aFBcXFzr1q0vX768ZMmS5s2b+/r65ubmrl27tlWrVt7e3oGBgQkJCQEBAZJmzZr19ttv36Ao87/8ys521K/tx5KSkozhrrvuct4uJvLy8urbt+/KlSslrVu37vHHHzc7EQAAAAAAAAAAAAAAElcvoQxyc4uHatVu+HlqaurFixcvXrxotVoHDBiwfv36y5cv9+7d++DBg/Hx8cOHD582bVpGRsa+ffteffVVSR9++OGAAQMyMzMzMzMfeughDw+PmTNnXrt27dKlSz179jx37tzs2bMHDhy4YcOG7Ozsbdu2jRw5MicnZ/r06QkJCT/Yunv37tHR0evWrVu1atXcuXMvXbqUlZV18uTJ9PT0tLS0tLS0U6dOSTJaMpLS0tJq1ap1g5/B3794yMmp6K/r5m77E2XE7UsAAAAAAAAAAAAAgEqJE2XgMJMnT3Z3d5f04osv2u32WbNmSfL39//HP/4xfvz4wMDAOXPmSMrMzHz22Wclbdq0afLkyV9//bXdbu/du7ekt956Kzk5OTU19cSJE8uXL5d03333TZs2TdLo0aOnT58+YsSIRx55JCws7Me7h4WFnT59ulmzZgEBAffcc4+fn98jjzwiqVOnTrVr13744YdLnjxw4MDs2bN37Nhxg5/Bx0eSPD2LByewWq2nT5+WZLFYmjVr5qRdTFdSlPnqq6/y8vJ8nPb7BAAAAAAAAAAAAACg9CjKwGHWr19vXL304Ycftm7d2nizTZs2n332maQWLVoY7wQFBdWuXTshIeHatWuTJk1aunSp3W7v169ffn5+dHR0YGBgWFjY8ePHQ0JCJLVs2dL41unTp1u1aiXJ3d29ZKnvunTpUmkOaElMTBw7duzixYsbNGhwg48LCiSpsNB5J8qcOXOmoKBAUv369f1LDrC57TRu3Lh169aHDx/Ozc3dtm1bv379zE4EAAAAAAAAAAAAAABXL6H0vLyKh5I7mG6iQYMGJ0+eNOYTJ06EhoZKMg6bMfTr1+/111/v3r17ZGRkbGzspk2b+vTpExcX5+HhsWjRounTpxudGElubsV/ROvXr29cn2S3243huy5cuLBkyZJevXrdOlhSUtLw4cP/85//dOvW7cZPlPRj/PxuvVS5lfxmbuPjZAx9+/Y1hk2bNpmbBAAAAAAAAAAAAAAAA0UZlFrt2sXDlSu3frB///6pqamvvfZaTEzMn//858cff/wHD/Tr12/x4sV9+/b18vKqWbOm3W5v0KBB48aNExISFi5c+NZbb61evfoHX3n88cefffbZpUuXPv300zn/q7MsWLBg2rRpU6ZM6dSp03PPPRcREXGLVEVFRX379m3ZsuXOnTunT58+b968GzyUlVU8OO2sl5KWT9OmTZ20RSVRcorMxo0bzU0CAAAAAAAAAAAAAICBq5dQarVqFQ+XL//4w/fff9/X19eYPTw8tmzZEhMTk5ycvGLFitatW587d+7Xv/51ycM9evR477337r33Xkmvvvpqdna2pNDQ0GXLlq1bt+7uu+9evnx5QUFBUVFR9erVja8MHjw4MDBwy5YtY8aMGThwYLNmzZ5++ukLFy74+fn17t17+vTpwcHBkvr375+bm1utWrWSGkp0dLQRzGKxvPzyyyUZAgICbvAznj9fPNSrV95f0084c+aMMTRp0sRJW1QS9957r7u7u9VqjYuLy8jIMP4PAgAAAAAAAAAAAADARBa73W52BlQRn3yiX/xCksaPV0yM2Wmco3lznTghSUeO6O67nbHD5MmT586dK+mjjz76hfH7vH1169Zt165dkpYvXz5ixAiz4wAAAAAAAAAAAAAA7nRcvYRSKymOHDliag6nKSxUcrIkWSxq1MhJm5w+fdoYGjdu7KQtKg9uXwIAAAAAAAAAAAAAVCqcKINSy8yUcXuOj4+ys+XubnYgR9u7V506SVKzZkpKquhqaWnasUM7dyoxUUlJSk1VZqauXcuxWPLs9ktSk8hI3w4d1L69unVT+/ayWCr+E1Q2Gzdu7N+/v6TWrVsfOnTI7DgAAAAAAAAAAAAAgDudh9kBUHUEBalRI509q7w8HTigjh3NDuRo8fHFQ+fO5V/k7FnFxGjlSu3YIZvtx5/72e1+Ug1JsbGKjS1+t3ZtDR6sCRM0YIA8bp+/lT169PDx8cnLyzty5MjFixfr1q1rdiIAAAAAAAAAAAAAwB2Nq5dQFj16FA/ffGNqDufYvr14KF9RZuNGDR2qsDA9/7y2b79hS+amLl/WnDkaMkQNG+r113X5cnkCVD4+Pj7dunWTZLfbt27danYcAAAAAAAAAAAAAMCd7vY5uwKu0KuXYmIkafNm/f73ZqdxKLtd69cXz717l+27W7bohRe0bdv31nN3z2vTJqddu9y77y5o3LiwTh1bQIC1WjW3nBy3/Hz3q1e9LlzwPn7c98gR/927Pa5eLf7axYv60580fbqefFJ/+IMCAyv+k5krKirq66+/lrRt27axY8eaHQcAAAAAAAAAAAAAcEez2O12szOg6khM1N13S5K/v1JT5edndiDH2bev+DKpWrV06ZLcSnfY0qVLevppzZ+vkr9Hbm7ZEREZw4Zl9eplDQoq1SJ2u+/Ro0Fr1watXu2Zmvrt+3XqaOZM3X9/mX6Oymbt2rVDhgyR1KVLl127dpkdBwAAAAAAAAAAAABwR6MogzJq00aHD0vS4sUaM8bsNI7z8suaNk2SJk7UvHml+sqaNXr4YV26ZLyye3mljx595Wc/KwgNLV8ES1FR4IYNtT/6yCcx8dt3x47VBx+olJ2byiczM7NmzZpWq9XDwyM9Pb1atWpmJwIAAAAAAAAAAAAA3LlKd2wGUKLk9pzPPjM1h0PZbJozp3guzfVAdrv+9CcNG1bSkskcPPjY6tUpL71U7paMJLuHR+aQIUkLF577298K69QpfnfxYnXpooSEci9rrqCgoDZt2kgqKiriRBkAAAAAAAAAAAAAgLkoyqCMxo0rHlasUEqKqVEcZ9MmnT0rSbVra+jQn3i4oEAPPKDXXzeuWyoMCTk9e3byG28U1q3rmDBubhlDhyYtX542dqwsFkk6flw9e2rzZses73JRUVHGsHXrVnOTAAAAAAAAAAAAAADucBRlUEZt2qh3b0kqLNR//mN2Ggf517+KhwkT5OV1qyfz8jR6tBYtMl5lR0UlLVmS3b27wxNZq1VLeeWVszNm2Iy7ijIzNXiw1qxx+EYu0KNHD2PYtm2buUkAAAAAAAAAAAAAAHc4i91uNzsDqppFi4rPlQkJ0YkTMpocVVdCgtq3l90ud3cdOaK77rrpk1arxozRihXGq6uTJl189lm7m3PbZj5JSY1/8xtP444nX1+tWaN773Xqjg535syZJk2aSAoODk5LS7MYx+QAAAAAAAAAAAAAAOBynCiDshs5Uk2aSFJqqt5+2+QwFTdtmnGJkkaNulVLRtJTT5W0ZFKnTLnw/PPObslIymve/OScOQUNG0pSbq5GjdLx487e1LEaN25ct25dSRkZGUlJSWbHAQAAAAAAAAAAAADcuSjKoOw8PfWnPxXPM2YoPd3UNBUTG6uFCyXJYtFzz93qyXnzSlpBV37xi9THH3d+uGKF9eqdfv/9wpAQScrI0KhRys522e4O0bFjR2OIj483NwkAAAAAAAAAAAAA4E5GUQblMnmyWrSQpPR0Pf+82WnKy27Xk08WHydz//26556bPpmUpEcfNcbMgQMvTp3qknzfKmjQ4Ow779i8vSXp0CE984yLA1TQPf/73VKUAQAAAAAAAAAAAACYiKIMysXDQ3//e/H8wQfavt3UNOX17rvauVOSfHw0ffpNH7PbNWWKsrIk5Tdpcv711+X8G5d+LLdVq5SSg3z+8x+tX+/6DOXWuXNnY6AoAwAAAAAAAAAAAAAwEUUZlNeIERoxQpJsNv3yl7p+3exAZZSU9O1ZOH/4g5o0uemTc+dq0yZJdnf35L//3ebn54p4N5IRHZ05cKAk2e363e9UUGBWkrL6blHGZrOZGwYAAAAAAAAAAAAAcMeiKIMKmDlTAQGSdPSoHn/c7DRlUVCgyZOLyz3h4XrppZs+mZ+vP/7RGK9OnpzX6v/bu884q6s7f+CfGYbeBgQEEUVEEQHRWCLrHyVZ15K1FwJi2aiggrpqYmIixo1RY4nRuGo2scQCWLCXWDAaazQqGAsgoglqpGmkCQwwzP/BXMAUlDLFcN/vJ/f8zpzf+X7v9aVP/LzO6Vkn/a3W9JEjK6t/8ylT8otf1G8za27TTTft2LFjknnz5k2dOrW+2wEAAAAAAACgSAnKsB66dMlVVxXGN96YG2+sz2bWyimn5IUXkqRhw9x0Uxo3Xu3K667LtGlJlm200azhw+uqv9Va1qbN7BNPLDxcfHEqKuq1nbXg9iUAAAAAAAAA6p2gDOvn6KNz9NGF8Qkn5Mkn67WbNfOLX+RXvyqML744O+yw2pXLl+eKK6qHs48/fnnTprXf3Bf7ePDgpR06JMn06bn55vpuZ01tv/321YM33nijfjsBAAAAAAAAoGgJyrDerr46ffokyZIlOeSQfMljEHfckVNOKYyHDMnpp3/e4scey9SpSSpbt/7ksMNqv7k1UtWw4ccrw0m//GW99rIWeq64tWrSpEn12wkAAAAAAAAARUtQhvXWokUeeiibbJIkc+bk3/89r79e3z2txoMP5sgjU1mZJDvtlGuv/YL1K85r+eSgg5Y3aVLLza2FTw45pKr6uqhXXvmyJ5NW2HbbbasHb775Zv12AgAAAAAAAEDREpShJnTpkoceSqtWSTJrVr7+9YwfX989/YNRo3LIIVm6NEm23TYPP5zPv0pp8eI8+GD1cM7++9d+f2uhsmXLeV/7WuHh7rvrtZc11bNnzwYNGiR55513Kioq6rsdAAAAAAAAAIqRoAw1ZPvt89hjKS9Pko8+yh575N5767unz7j00hx9dCEl061bxo1Lu3Zf8Mozz2T+/CQVm2++uEeP2m9x7czbc8/C6OGH67WRNdWkSZOuXbsmqaysfOutt+q7HQAAAAAAAACKkaAMNeerX824cWnbNkkWLMihh+aCC7J8eT13tWBBBg/Od7+bqqok6dMnzzxTuCjq8z39dGGD3Xarzf7W0YJ+/aoaNEiSl16qDvR8+a28fWnyH/9Yv50AAAAAAAAAUJwEZahRO+2U555L9+5Jsnx5Ro7Mnnvm/ffrrZ9XXskuu+S22wqPu++ep59eo5RMkt//vvrz0x13rJ3m1ktlq1YV1b9zZWUmTKjvdj7XpEm5+uocc8w1L788M6lMBh59dEpK0qRJttgie+6Z00/PXXflr3+t70YBAAAAAAAA2MAJylDTttkmL7yQAQMKj08+mb5986tf1fXRMosX56yzsuuumTSpMDN8+KrLodbExImFnXr2XMM3lixZUlFRsXZ9rodFvXoVRq++WmdF18IHH+S887L11tl225x8cm6+edPp0zt89j86FRX585/z29/miity2GHp0CF7751Ro7JkST12DQAAAAAAAMAGrKSq+j4aqFnLluXHP86FF2bZssLMTjvl5z/Pv/1brZdevjy33ZaRI/OnPxVmmjfPL3+ZIUPWYpN589K6dZLljRtP+sMfqkoL6Y777rvv4Ycf/uijj7p373788cd379591qxZEyZM2HvvvZPceuutH3/88cknn7xW/V566aXNmzcfPnx49eMdd9wxbty4Bg0atGvXrlu3bgcffHCbNm3+6Yvtfv3rjj/7WZKcfnqqB18S77yT88/PqFGr/un/nUaNPi8N06lTvvOdjBiRxo1rqUEAAAAAAAAAipMTZagdZWX50Y/y1FPZcsvCzMsvZ7fdss8+ee652ipaWZm7787OO2fIkFUpma9/Pa+9tnYpmSQzZlR/LuvQYWVKZtSoUbfccsv3vve92267bcCAAUOHDv3LX/4yY8aMe++9d51bnj179pNPPnnPPfcsWLCgembatGk9e/YcOXLkoEGD5s6de9hhh02fPv2fvru0U6eseGedG6hhixdn5Mj06pUbb1yVkmnZMocemssvz1NP5cMPs2xZKipSVZVPP82UKbn33pxzTvr1y4rfOdOn59vfzjbb5OGH6+t7AAAAAAAAALBBcqIDMS6kAAAXFUlEQVQMtWzx4lxySS66KIsWrZrcddcMHZpvfjPNm9dMlZkzM2ZMrroq7767arJ9+/zkJzn22JSUrPWGzz+f3XZLsmi77d4ZPTpJVVXVHnvscc011/Tu3bt6ycUXX1xSUvLuu++++eab22yzTb9+/Zo2bTp58uQ5c+b8+c9/3nnnnUeOHDlr1qzzzz//448/Li0tPfPMM7fbbrshQ4b079//2WefPfzwww888MDrr79+0aJFc+fO7dGjx2GHHZbk0ksvbdu27XHHHVdd5dxzz23VqtXAgQPPPvvsioqKqqqqU045pX///klavPBC16FDk+TrX89vf7teP2CNeOONDB6cN95YNfO1r+Wkk3LAAWt0NswHH2TUqFx9dT74YNXk8cfnyivTtGnNdwsAAAAAAABA8XGiDLWsSZP88IeZODFHHZWyssLkCy/kuOOyySYZPDi33ZY5c9Zx82nTct112WefdO6cM85YlZJp1ixnn52pU3PcceuSkkmyeHH15/IVEY2PPvpo4cKFvXr1Wrlkhx12ePvtt4cPH967d+9rr7322GOPTfLaa69deOGFd99992uvvTZ58uSRI0cefPDBo0ePvuCCC0aOHFlVVTV9+vRmzZqNGjXqwAMPTHL//ffvt99+BxxwwOqOpdlll12mTp16xx137Lfffrfffvvtt9/+la985e96y8KF6/Ida9Y992TXXVelZPr1y3PP5Ykncvjha3qD0qab5qyz8u67ueqqtG9fmLzuuuy2W/7yl1rpGQAAAAAAAIAiU/bFS2D9de2am2/OD3+YCy/MmDGpqEiSefNy22257bY0aJDevdOvX3baKVtvne7ds/JSoc9asiQzZ2bKlLz2Wl57Lc8+m6lT/35Nu3Y58cSMGJGOHWv2G1RWVjZo0KDkM7GbsrKyZStvF1qhf//+zZs3T9KzZ89p06aNHz++Z8+er776apK5c+fOnDkzyf7771+9eMKECa1ateratWuS+fPnv/POO1uuvKlqhQYNGlRWVvbt2/fSSy+dMWPGgAEDtttuu8Lfqptp1Cjl5TX7Zdfa9ddn2LAsX54kzZvnsssybNg6RpQaNsyIERk0KKeemjFjkmTChOy2W8aNy1Zb1WTPAAAAAAAAABQfQRnqUPfuueGGXHJJbr45116byZML85WV+eMf88c/rlrZsGHKy9O6dVq1Kpw3s2BBZs1a7c6lpenfP0cdlSOOqJlrelYcglKy4miZjTfeuLS0dOrUqd27d6+eee2117b6h+hGo0aNVnRUumzZsrKysr322qs6XrP33nu3adMmSbNmzarX3HvvvbNnz/7mN7+ZZNGiRffdd98ZZ5zxdxtOmDBhyy233HPPPXv16vXUU0+dd955BxxwwNFHH52ktPo2qyVLCsGj+nLzzRk6NNWXuHXvnvvvT8+e67vnRhtl9OgMGJCTT86SJZk2LXvumWeeyWabrX+/AAAAAAAAABQtQRnqXLt2OeOMnHFGXn8999+f++7L+PGprPybNUuXZvbszJ79BVs1b57dd89ee+Www7LppjXZ5EYbVX+WrbgWqqSk5Mgjjzz//POvuOKK8vLyV1555Z577hkzZszSpUv/8pe/VFRUNP6HC4YaNmzYp0+ft99++6CDDkoybdq0z65ZtGjRE0888cADD5SXlyeZMWPGEUccceqpp1b/dfny5TNmzHj88ccffvjhW2655f333+/cufOgQYNatmz5/PPPV68pnTu3sFfr1jX53dfKk0+uSsnstFMeeWTlT1cDhg7N5pvnkEPy6ad5773st1+efz4tWtTY/gAAAAAAAAAUGUEZ6k+fPunTJ2efnfnz89JL+f3vM3Fipk7NO+/k44//yfqysnTokM02S58+2W67bL99dtklK05wqWEbb1yoOWtWqqqqbxE66aSTGjduPGzYsCVLlrRr1+7yyy/v3Llzkt13333YsGF77LFH586dS0tLq1/s1KlT69atL7zwwksuueT2229fvnz5FltscdFFF2299dbVB8y8+uqr++yzT/mKW5M6duzYr1+/SZMmbbzxxg899NBjjz220UYbbbnllrfeemvnzp1vvvnmBx54oLS0tHnz5ueee271K42mTy90W1/nrMyYkcGDs2RJkvTtm3Hjav4SqL32yr33Zr/9UlGR11/P0KG59dYaLgEAAAAAAABA0Sipqj4KAr5UlizJ3LmZMyfz5qVNmyRp2jQbb5wVMZS6sPHG1Zc9vfXoo0s32aTu6q6xTc47r+3YsUly2WX5hzub6sJ+++Whh5KkU6e89FI6d66tQjfdlP/6r8L41lszaFBtFQIAAAAAAABgg1aHsQNYc40apX37bLVVdtwx3bqlW7d06lSnKZkkPXtWfzZ96606rbvGmk6cWBj17VsP5e+8s5CSKS3NqFG1mJJJcswxGTq0MD7ttMybV4u1AAAAAAAAANhwCcrAanz1q9WfzV55pX4b+adKFy5sMmVKkpSUZMcd67r8smX5/vcL45NOyte/XusVL7ssXbokycyZ+clPar0cAAAAAAAAABsiQRlYjd13r/5s8fzz9dvIP9XixRdLli5Nku23T3l5XZcfNSpTpybJRhvlggvqomLLlrn44sL46qvzySd1URQAAAAAAACADYugDKzGHnukadMkTd5+u/G0afXdzd9r+dvfFkb77lsP5a+8sjD4znfSunUdFR00KL16Jcn8+bnuujoqCgAAAAAAAMAGRFAGVqNFi+yzT/Ww9QMP1G8vf6d04cLW48YVHg4+uK7Ljx+fCROSpFmznHBC3dUtKcm3v10Y33RT3dUFAAAAAAAAYEMhKAOrd+SR1Z9t77qrcM/Rl0P5gw+WLlyYJL16Zaed6rr8nXcWBocemjZt6rT0wIFp0SJJ3nwzkybVaWkAAAAAAAAA/vUJysDqHXBANt00SdlHH5Xff399d1NQsnx5uxtvLDwMHVoPHTz2WGFw6KF1Xbp585XH/OThh+u6OgAAAAAAAAD/4gRlYPXKynLKKdXDDr/6VcmSJfXbTrXye+5p9P77SbLRRjnuuLouP39+Xn01SRo0yNe+VtfVk+y9d2Hw/PP1UB0AAAAAAACAf2Vl9d0AfLmNGJGf/SwzZzb88MN2v/717BNOqN92Shcs2Ph//7fwcNpphXuI6tIbb6SyMkl69UqrVtVzjz766KvV6ZkVDjjggJ49e67t3p988sm8efM233zzz1u0yy6Fwfjxa7s/AAAAAAAAAEXOiTLwuZo3zw9/WD3scO21jadNq992Ov30p2Uff5wkXbrkjDPqoYO33ioMtt125VyzZs3arLBo0aJzzz23oqJiHfZ+/PHHL7jggi9YtM02KS1Nkvfey9Kl61AFAAAAAAAAgKIlKANf5IQTsvPOSUoqKjY988x6vICp5e9+1+buuwsPl12WZs3qoYmZMwuDLl1WzvXv33/YsGHDhg371re+9cQTT1x55ZVbbLHFxRdfvHLBz3/+85kzZ86dO/d73/ve8ccf/8ILL5x33nlJJk6cOHz48GOPPXbcuHHLly8fPXr0H/7wh7POOuuRRx5Jcu+99x577LEjRox45513VjXQqFE6dkySyspMn14H3xgAAAAAAACADYagDHyRBg1y7bVp3DhJ00mTOl10Ub100WjatE1/8INUVSXJwIE5/PB6aSOffFIYtG37j38cPnx47969hw0b1rp16zvvvLP6PqY//elPV199dfv27YcMGdK6devvf//7o0aN+vnPfz5r1qy99957//33Hz58+CmnnPLss8/269dviy22OPzww/v06XPLLbf88pe//Pa3v33QQQftv//+CxYsWFWmvLwwmD+/lr8tAAAAAAAAABsUQRlYA3375rLLqodtx45td8MNdVy/7OOPNx8xokF1LqRr11xzTR03sEp1Uicp3H/0GVddddXEiROvuOKK6seTTjrp+uuvT3LdddcNHTp04cKFL7300ve///0tt9zyBz/4QZL777//G9/4xr777rvTTjudfvrpY8aM6datW/v27XfcccfOnTv/4he/OOqooxYvXty2bduuXbs+//zzqyo1bJgkbdqkpKS2vy4AAAAAAAAAGxJBGVgzI0bkyCOrhx2vuGKjW2+ts8oNPvmk6wknNJ42LUmaNcvdd2ejjeqs+t+rDqkkqaj47PSzzz7705/+dOzYsY0aNaqeGTx48EMPPTR37twxY8Ycc8wx8+fPb9WqVUlJSZLy8vIkc+bMad++ffXiDh06/PWvf/3shrNnz37mmWfGjh07duzY7bbbrkOHDqv+tmhRknzyyT+GdQAAAAAAAADgc5TVdwPwr+O66/L++3nqqVRVdfrJT0o//XT28cfXds2GH37Y9cQTG//pT0lSVpbRo7PDDrVd9POszOjMnr1y7r333hsyZMidd965ySabrJxs2rTpgQceeMwxx3z1q1/t0KFDVVXV0qVL33777a222mrcuHFJevXqdemll1Yvfu655/r06dOkSZM5c+ZUz+ywww79+vU7+uijqx+rVp5k89nS/+z6JwAAAAAAAABYnZK/+d/PwOebNy/77psV1wDN2X//D889d3njxrVUrfnLL3c588yyjz5KktLS3HhjjjqqlmqtqTvvzOGHJ8l//mcefLB6buDAgU8//XSfPn1Wrho6dOjAgQPffvvtHj16PPHEEwMGDEjym9/85tRTT91mm226des2duzYDz/8cODAgQsWLGjfvv2bb775+OOPL126dLfdduvRo8cRRxzRr1+/Qw89tHfv3q1bt3755Zdvv/32zTbbLEnmzk15eZI0bZpPP3X7EgAAAAAAAABrTlAG1tL8+TnwwDz5ZPVTRbduH1x00aKePWu2SMnSpe1/9av2115bUlmZJI0bZ9SoHHZYzVZZF2++md69k6RLl7z3XvXcjBkzFi5c+NlVbdu2LS8vf+uttw4++OA333yzZEWcpbKycvHixZMnTz7ttNOeeeaZJFOmTPn000/79OlTVlZWvWDmzJlNmjRp27btsmXLJk2atHDhwl69erVo0aKw9VNPZcCAJNlhh4wfXxdfGQAAAAAAAIANhauXYC21bJlHH83w4bnuuiSN33232+DBfx00aNaIEZUtW9ZIhRYvvtjxoouaTJ1aeO7YMXfckf79a2Tz9dWjR1q2zPz5ef/9TJuWzTdP0rFjx39ceMMNN1x55ZXnnHPOypTMDTfc8MILLzRv3vw3v/nNVVddVT259dZbf/atBg0arLy/qays7LOn1BQ891xhsOOONfWdAAAAAAAAACgSTpSBdfXrX+e//zvz51c/VbZq9fGRR358xBGVrVuv85bN//CH9tdf32LF1U5JssceufXWdOq0ns3WpH33zSOPJMnVV2f48NWtmjBhQsOGDXtXHz+TJFm2bNkbb7yxYMGC3r17l1dfn7QO/t//K2RlRo/OEUes4yYAAAAAAAAAFCVBGVgP77yTE0/M44+vnKhq3HjuXnvN2W+/T3feuaphwzXcpuGsWa0eeaTN/fc3eeutVbMtWuQnP8nw4Sktrdmu19c112TEiCTZffc89VSdlv7zn9OtW6qqUlaW6dPTrl2dVgcAAAAAAADgX5ygDKy3O+/M2WdnypTPzlW2aLFw550X9u27qGfPJV26LO3Y8bO5mbJPPimbMaPJlClNJ09u/uKLTaZOzWf/TSwry7e+lf/5n6y4hOjLZcaMdOmSZctSUpLXX0+vXnVX+uyzc+GFSfKNb+Shh+quLgAAAAAAAAAbBEEZqAmVlbnttlx+eV55ZXVLljdpUtWoUZLSxYtLliz554uaN88xx+S007LVVrXUac0YODBjxybJkUfmllvqqOjcuenaNXPmJMldd+WQQ+qoLgAAAAAAAAAbCkEZqFHjx2fUqNx3X959dy3eatw4e+yRQYNyyCFp3brWmqs5L7yQfv2SpEGDvPJK+vati6Lf/W4uvTRJtt46kyZ96W6kAgAAAAAAAOBLT1AGasfkyXn++bz4YqZMydSpmTkzS5eu+mt5eTbZJNtsk+22y667pn//NGtWf72uk/33z4MPJsmuu+bZZ9OgQe2We/317LxzKiqSZMyYDB5cu+UAAAAAAAAA2BAJykBdWbiwkPNo0iRNm9Z3N+vtrbfSt2/hG/3oR/nhD2ux1qJF+bd/y6uvJsluu+WZZ1JSUovlAAAAAAAAANhAubsE6kqzZmnTJm3abAgpmSQ9euSccwrj884rnC5TG6qqMnRoISXTtGmuv15KBgAAAAAAAIB1IygDrKuzzsqAAUlSWZlBg/Lss7VS5TvfyejRhfHll6dHj1qpAgAAAAAAAEARcPUSsB5mzUq/fnn33SRp0SJ33pm9966xzZcvzymn5JprCo8nnJD/+78a2xwAAAAAAACA4uNEGWA9dOiQhx9Op05JsmBB9tsvl12WGonfffRRvvGNVSmZQw/N1VfXwLYAAAAAAAAAFDFBGWD9bL11fve7bLZZkixblu98J3vtlalT12vPu+5Knz559NHC4xFHZMyYNGiwvq0CAAAAAAAAUNwEZYD1tvXWefHF9OtXeHz88fTundNPz4cfrvVWTz+dAQNy2GGZMSNJSktzzjkZNSqNGtVkwwAAAAAAAAAUpZKqGrkkBWDp0px7bi65JJWVhZlGjXLggRkyJP/xH2nW7PPe/eCD3H13brop48evmtxkk1x/ffbZpxZ7BgAAAAAAAKCYCMoANWr8+Jx8cn7/+7+ZbNIkO+6YHXbIVltl443TtGkqKrJoUT74IBMn5pVXMnny36xv2DAnnJAf/zjl5XXZOwAAAAAAAAAbNkEZoBY89FAuvjjPPLPWLzZrlqOOyplnZssta6EtAAAAAAAAAIqaoAxQayZOzOjRuf/+vPHGF6xs2jT9++fww3PooWnTpk6aAwAAAAAAAKDoCMoAtW/WrLz4YiZPzrRp+eijLFyYJk3SuHE6dkz37unTJ1/5Spo0qe8uAQAAAAAAANjACcoAAAAAAAAAAFAUSuu7AQAAAAAAAAAAqAuCMgAAAAAAAAAAFAVBGQAAAAAAAAAAioKgDAAAAAAAAAAARUFQBgAAAAAAAACAoiAoAwAAAAAAAABAURCUAQAAAAAAAACgKAjKAAAAAAAAAABQFARlAAAAAAAAAAAoCoIyAAAAAAAAAAAUBUEZAAAAAAAAAACKgqAMAAAAAAAAAABFQVAGAAAAAAAAAICiICgDAAAAAAAAAEBREJQBAAAAAAAAAKAoCMoAAAAAAAAAAFAUBGUAAAAAAAAAACgKgjIAAAAAAAAAABQFQRkAAAAAAAAAAIqCoAwAAAAAAAAAAEVBUAYAAAAAAAAAgKIgKAMAAAAAAAAAQFEQlAEAAAAAAAAAoCgIygAAAAAAAAAAUBQEZQAAAAAAAAAAKAqCMgAAAAAAAAAAFAVBGQAAAAAAAAAAioKgDAAAAAAAAAAARUFQBgAAAAAAAACAoiAoAwAAAAAAAABAURCUAQAAAAAAAACgKAjKAAAAAAAAAABQFARlAAAAAAAAAAAoCoIyAAAAAAAAAAAUBUEZAAAAAAAAAACKgqAMAAAAAAAAAABFQVAGAAAAAAAAAICiICgDAAAAAAAAAEBREJQBAAAAAAAAAKAoCMoAAAAAAAAAAFAUBGUAAAAAAAAAACgKgjIAAAAAAAAAABQFQRkAAAAAAAAAAIqCoAwAAAAAAAAAAEVBUAYAAAAAAAAAgKIgKAMAAAAAAAAAQFEQlAEAAAAAAAAAoCgIygAAAAAAAAAAUBQEZQAAAAAAAAAAKAqCMgAAAAAAAAAAFAVBGQAAAAAAAAAAioKgDAAAAAAAAAAARUFQBgAAAAAAAACAoiAoAwAAAAAAAABAURCUAQAAAAAAAACgKAjKAAAAAAAAAABQFP4/rzKTfj/a+X4AAAAASUVORK5CYII=" /> --- # What does ChainRulesCore need? A way to specify what the rule is for a given method: i.e. function + argument types. This is done by overloading `frule` and `rrule`: `rrule(::typeof(foo), args...; kws...)` `frule((ṡelf, ȧrgs...), ::typeof(foo), args...; kws...)` --- # What does a `frule` need? We know we are going to need to compute the primal; so we need the primal inputs. What else do we need to allow us to propagate the directional derivative forwards? We need that directional derivative being pushedforward. ```julia function frule((ṡelf, ȧrgs...), ::typeof(foo), args...; kwargs...) ... return y, ẏ end ``` .funfact[ We say that the **pushforward is fused into the frule**. This is required for efficient custom rules e.g. for ODE solvers. ] --- # What does an `rrule` need? Primal inputs again, but what else do we need to propagate gradient backwards? We need the gradient of the function called after this one. That's a problem, we don't have that on the forward pass, so we will need to return something to put on the tape for the backwards pass. The **pullback**. ```julia function rrule(::typeof(foo), args...; kwargs...) y = ... function foo_pullback(ȳ) ... return s̄elf, ārgs... end return y, foo_pullback end ``` --- # What do we need to represent the types of derivatives? .row[ .col[ **Primal** <br> `Float64` <br> `Matrix{Float64}` <br> `String` <br> ```julia struct Foo a::Matrix{Float64} b::String end ``` ] .col[ **Differential**<br> `Float64`<br> `Matrix{Float64}`<br> `DoesNotExist` <br> ```julia Composite{Foo} # With properties: a::Matrix{Float64} b::DoesNotExist ``` ] ] .funfact[ There are multiple correct differentials for many types and which to use is context dependent. ] --- # What do differential types need? Basically they are elements of vector spaces. Roughly speaking, **every differential represents the difference between two primals**. .funfact[ The differential for `DateTime` is `Period` (e.g. `Millisecond`). It is my favorite example of a differential for a primal that is *not* a vector space. ] --- # What do differential types need? `zero` They need a **zero**. Since the primals that it is the difference of could be equal. E.g. when the function being differentiated is a constant. .funfact[ There is thus the trival differential. `Zero()`, which can be added to anything and it won't change. Its a valid differential for all primals. ] --- # What do differential types need? `+` They need to be able to be added to each other. For $u = \sin(x)$, $v = \cos(x)$, $y = u + v$ $$ \dfrac{\partial y}{\partial x} = \dfrac{\partial u}{\partial x} + \dfrac{\partial v}{\partial x} $$ .funfact[ An advantage of ChainRule's differentiable types over Zygotes use of `NamedTuples` (Chainrule's `Composite`) and `Nothing` (ChainRule's `AbstractZero`) is that they actually overload `+` ] --- # What do differential types need, to be useful for gradient based optimization Vanilla Gradient Descent: $ x \leftarrow x + 0.1 \tilde x $ So need to be able to **add to primal**, and **multiply by a scalar** Add to primal is the inverse of it being a difference of primals. Multiply by scalar is natural to define from limits of additions. .funfact[This is also useful **difference** based (gradient-free) optimization like **particle swarms** and the **Nelder–Mead method**. Further, it is planned to switch FiniteDifferences.jl to use differentials, rather than converting to and from vectors. ] --- <br> <br> .row[ .col[ # How is the world now? <br> .image-30[] ] ] --- # How many AD systems do we have? .row[ .col[ **Reverse Mode:** * AutoGrad * Nabla * ReverseDiff * Tracker * Yota * Zygote ] .col[ **Forward Mode:** * ForwardDiff * ForwardDiff2 * Zygote ] ] .unfunfact[ Julia suffers from the LISP Curse. It is too easy to make an AD. ] --- # What Rules exist: * Nabla.jl has ~300 rules * Zygote.jl has ~500 rules * ChainRules.jl has ~200 rules so far * DiffRules.jl has ~50 rules <br> .funfact[The intersection of Zygote and Nabla's rules is not much more than what both have from DiffRules.] <br> .unfunfact[A great way to get a lot of custom rules written is to throw errors (the *Zygote Strategy*)] --- # ChainRules vs DiffRules: **ChainRules** is the successor to **DiffRules** * **DiffRules** only handles *scalar rules* * Any rule defined in **DiffRules** can be defined using the `@scalar_rule` macro in **ChainRules** without change. * **DiffRules** is *not designed* to have its list of rules extended by other packages. **ChainRulesCore** is. --- # ChainRules vs ZygoteRules: * **ChainRulesCore** and **ZygoteRules** are very similar * ChainRules wasn't quite ready when ZygoteRules was created. * ChainRules is not Zygote specific, it works with everything. .col[<br> .image-30[  ] ] .col[ ### ZygoteRules is effectively deprecated, and all new rules should be written using ChainRulesCore ] --- <br> <br> .row[ .col[ # The Future <br> .image-30[] ] ] --- ## Deeper Integration into Zygote. * Use in Forward Mode * Use ChainRule's differential types * `nothing` -> `AbstractZero` * `NamedTuple` -> `Composite` <br> * Convenience macro for easy translating of ZygoteRules .col[.image-30[  ] ] --- # Better support for Overloading based AD * Need to improve support for generating overloads from rules. * Will also solve inference related issues. ### 🔜 ReverseDiff.jl ### 🔜 Nabla.jl --- ## ForwardDiff 🤷 *maybe* Its so stable. ForwardDiff2 might take its place. .funfact[ ForwardDiff was released on 13 April 2013. Julia v0.2 was released 19 November 2013. 31 weeks later. Which included such features as Pkg2, keyword arguments, and suffixing mutating functions with `!`. ] --- ## Calling back into AD ```julia function rrule(::typeof(map), f, x) res = map(xi->rrule(f, xi), x) ys, pullbacks = unzip(res) function map_pullback(ȳ) s̄elf, x̄ = unzip(map(pullbacks, ȳ) do pullback_i, ȳi pullback_i(ȳi) end return NO_FIELDS, s̄elf, x̄ end return y, map_pullback end ``` **But this calls `rrule(f, xi)` which might not be defined**. May need to use an AD to find it. Could hard-code a given AD, but probably you want to keep using the one you are already using when you called `rrule(map, f, x)` --- # Rules Everywhere Just like **TimeZones.jl** depends on **RecipesBase.jl** to make `ZonedDateTimes` plot-able. Packages like **DiffEqBase** depends on **ChainRulesCore.jl** and provide rules to make their functions differentiable, where required or where there are smart domain-knowledge ways to make it faster. The future is more packages doing that. --- # How to get involved * Write rules for Base and StdLibs in ChainRules * Write rules for your package with ChainRulesCore * Incorporate ChainRules support in to your favourate AD .funfact[There are 500 rules in Zygote that need migrating.] --- # Summary * AD needs rules * There will always be more AD systems. * One set of rules to rule them all .row[ .col[ <br> .image-30[] ] ]