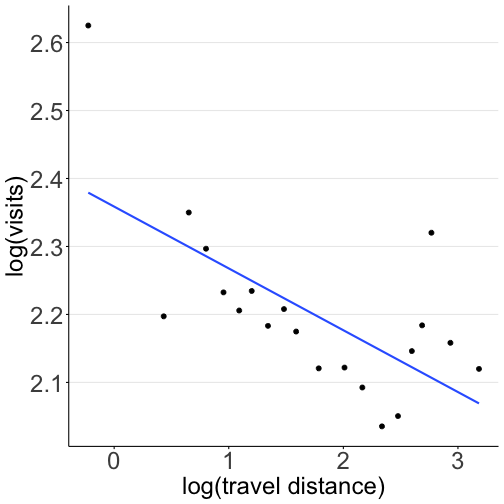

class: center, middle, inverse, title-slide .title[ # Lecture 10 ] .subtitle[ ## Travel cost method ] .author[ ### Ivan Rudik ] .date[ ### AEM 4510 ] --- exclude: true ```r if (!require("pacman")) install.packages("pacman") pacman::p_load( tidyverse, magrittr, xaringanExtra, rlang, patchwork, broom, viridis, fixest, maps, tigris, sf, vembedr ) set.seed(1) options(htmltools.dir.version = FALSE) knitr::opts_hooks$set(fig.callout = function(options) { if (options$fig.callout) { options$echo <- FALSE } knitr::opts_chunk$set(echo = TRUE, fig.align="center") options }) red_pink <- "#e64173" blue <- "#3C93DC" red <- "#ff0000" ``` ``` ## Warning: 'xaringanExtra::style_panelset' is deprecated. ## Use 'style_panelset_tabs' instead. ## See help("Deprecated") ``` ``` ## Warning in style_panelset_tabs(...): The arguments to `syle_panelset()` changed in xaringanExtra 0.1.0. Please refer to the documentation to update your slides. ``` ```r red_pink <- "#e64173" # A blank theme for ggplot theme_empty <- theme_minimal() + theme( legend.position = "none", title = element_text(size = 24), axis.text.x = element_text(size = 24), axis.text.y = element_text(size = 24, color = "#ffffff"), axis.title.x = element_text(size = 24), axis.title.y = element_text(size = 24), panel.grid.minor.x = element_blank(), panel.grid.major.y = element_blank(), panel.grid.minor.y = element_blank(), panel.grid.major.x = element_blank(), panel.background = element_rect(fill = "#ffffff", colour = NA), plot.background = element_rect(fill = "#ffffff", colour = NA), axis.line = element_line(colour = "black"), axis.ticks = element_line(), ) theme_blank <- theme_minimal() + theme( legend.position = "none", title = element_text(size = 24), axis.text.x = element_blank(), axis.text.y = element_blank(), axis.title.x = element_blank(), axis.title.y = element_blank(), panel.grid.minor.x = element_blank(), panel.grid.major.y = element_blank(), panel.grid.minor.y = element_blank(), panel.grid.major.x = element_blank(), panel.background = element_rect(fill = "#ffffff", colour = NA), plot.background = element_rect(fill = "#ffffff", colour = NA), axis.line = element_blank(), axis.ticks = element_blank(), ) theme_regular <- theme_minimal() + theme( legend.position = "none", title = element_text(size = 14), axis.text.x = element_text(size = 24), axis.text.y = element_text(size = 24), axis.title.x = element_text(size = 24), axis.title.y = element_text(size = 24), panel.grid.minor.x = element_blank(), panel.grid.minor.y = element_blank(), panel.grid.major.x = element_blank(), axis.ticks = element_line(), axis.line = element_line(), panel.background = element_rect(fill = "#ffffff", colour = NA), plot.background = element_rect(fill = "#ffffff", colour = NA) ) ``` ```r # initialize seed and data set.seed(1000) num_trips <- 100 trip_data <- expand.grid( house_num = seq(from = 1, to = num_trips), site = 1:26 ) # make fake dataset trip_data <- trip_data %>% as_tibble() %>% mutate( trips = sample(0:8, num_trips * 26, replace = TRUE), income = exp(rnorm(num_trips * 26)) * 3000 + 50000 + rnorm(num_trips * 2) * 15000, income = ifelse(income < 10000, 10000, income), travel_cost = exp(rnorm(num_trips * 26)) * 30, travel_cost_other = exp(rnorm(num_trips * 26)) * 35, trips = round(-.01 * travel_cost + .01 * travel_cost_other + 3 / 100000 * income), water_clarity = runif(num_trips * 26) ) %>% group_by(site) %>% mutate(water_clarity = mean(water_clarity)) %>% ungroup() %>% mutate(trips = case_when( trips <= 0 ~ 0, TRUE ~ trips + 3 * round(water_clarity) )) ``` --- # Roadmap - How do we estimate the value of recreational goods? --- class: inverse, center, middle name: background # Background <html><div style='float:left'></div><hr color='#EB811B' size=1px width=796px></html> --- # Should we separate the Great Lakes and Mississippi? <div class="vembedr" align="center"> <div> <iframe src="https://www.youtube.com/embed/lIRXDDG6yB8" width="533" height="300" frameborder="0" allowfullscreen="" data-external="1"></iframe> </div> </div> --- # Should we separate the Great Lakes and Mississippi? .center[ <img src="files/12-carp1.png" width="70%" /> ] --- # Should we separate the Great Lakes and Mississippi? .center[ <img src="files/12-carp2.png" width="50%" /> ] --- # Should we separate the Great Lakes and Mississippi? Benefits from barriers accrue to anglers in the Great Lakes, both commercial and recreational Costs come from cost of building the barriers plus cost of maintaining them, plus costs of reduced shipping (if any), plus any other costs associated with the barriers How do we figure out the benefits from recreational anglers? --- # Why do we need travel cost? Recreational areas .hi[have value] -- Their quality also has value -- Not placing a value on recreation is essentially giving it a value of .hi-purple[zero] -- This is likely inappropriate -- If someone dumped toxic waste in Taughannock does that have zero cost? --- # What is the travel cost method? The travel cost method uses observable data on recreation visitation to infer the recreational value of environmental amenities -- The central idea is that the time and travel cost expenses that people incur to visit a site represent the .hi[price] of access to the site -- This means that people's WTP to visit can be estimated based on the number of visits they make to sites of different prices -- This gives us a demand curve for sites/amenities, so we can value changes in these environmental amenities --- # Hotelling After WWII, the U.S. national park service solicited advice from economists on methods for quantifying the value of specific park properties -- Would total entrance fee that people pay measure the value? -- .hi-red[No!] -- Harold Hotelling proposed the first indirect method for measuring the demand of a non-market good in 1947 --- # Hotelling > Let concentric zones be defined around each park so that the cost of travel to the park from all points in one of these zones is approximately constant. The persons entering the park in a year, or a suitable chosen sample of them, are to be listed according to the zone from which they came. The fact that they come means that the service of the park is at least worth the cost, and this cost can probably be estimated with fair accuracy. --- # Hotelling > A comparison of the cost of coming from a zone with the number of people who do come from it, together with a count of the population of the zone, enables us to plot one point for each zone on a demand curve for the service of the park. By a judicious process of fitting, it should be possible to get a good enough approximation to this demand curve to provide, through integration, a measure of consumers’ surplus.. -- About twelve years after, Trice and Wood (1958) and Clawson (1959) independently implemented the methodology --- # Theoretical foundation How can we use observed data to tell us something about willingness to pay? -- Consider a single consumer and a single recreation site -- The consumer has: - Total number of recreation trips: x, to site of quality: q - Total budget of time: T - Working time: H - Non-recreation, non-work time: l - Hourly wage: w - Money cost of reaching the site: c - Expenditures on other market goods: z --- # Theoretical foundation This lets us write down the consumer's utility maximization problem: `$$\max_{x,z,l} U(x,z,l,q) \,\,\,\, \text{subject to: }\,\,\, \underbrace{wH = cx + z}_{\text{money budget}}, \,\, \underbrace{T = H + l + tx}_{\text{time budget}}$$` -- Multiply the time budget by `\(w\)` and substitute the money budget in: -- `$$\max_{x,z,l} U(x,z,l,q) \,\,\,\, \text{subject to: }\,\,\, \underbrace{wT = (c+wt)x +z + wl}_{\text{combined money/time budget}}$$` Where now we have one constraint on the dollar value of time --- # Theoretical foundation `$$\max_{x,z,l} U(x,z,l,q) \,\,\,\, \text{subject to: }\,\,\, \underbrace{wT = (c+wt)x + z + wl}_{\text{combined money/time budget}}$$` `\(wT\)` is the consumer's .hi[full income], their money value of total time budget `\(c+wt\)` is the consumer's .hi[full price], their total cost to reach the site `\(z\)` is their consumption of other goods `\(wl\)` is the opportunity cost of non-recreation site leisure --- # Theoretical foundation Let `\(Y = wT\)` be the consumer's .hi[full income], their money value of total time budget -- Let `\(p = c+wt\)` be the consumer's .hi[full price], their total cost to reach the site Then we can write the problem as: -- `$$\max_{x,z,l} U(x,z,l,q) \,\,\,\, \text{subject to: }\,\,\, \underbrace{Y = z + px + wl}_{\text{combined budget}}$$` -- Solve the constraint for `\(z\)` and substitute into the utility function... --- # Theoretical foundation `$$\max_{x,l} U\left(x,Y-px-wl,l,q\right)$$` Choose trips `\(x\)` and leisure `\(l\)`, this implies an amount of money left over -- This has first-order conditions: `$$[x] \,\,\, U_x - pU_z = 0 \rightarrow \frac{U_x}{U_z} = p$$` -- and `$$[l] \,\,\, -wU_z + U_l = 0 \rightarrow \frac{U_l}{U_z} = w$$` --- # Theoretical foundation `\(\frac{U_x}{U_z} = p\)` tells us the consumer equates the marginal rate of substitution between recreational trips and consumption to be the full price of the recreational trip -- What does this mean? -- .hi[The value of the marginal recreational trip to the consumer, in dollar terms, is revealed by the full price p] --- # Theoretical foundation `$$U_x - pU_z = 0 \qquad -wU_z + U_l = 0$$` The above FOCs are two equations, the consumer had two choices (x,l) so we had two unknowns -- If we know the functional form of `\(U\)` we can use the FOCs to solve for x (and l) as a function of the parameters (p,Y,q): `$$x = f(p,Y,q)$$` -- This is simply the consumer's .hi-blue[demand curves] for recreation as a function of the full price p, full budget Y, and quality q --- # Theoretical foundation `$$x = f(p,Y,q)$$` If we observe consumers going to sites of different full prices `\(p_1,p_2,\dots,p_n\)`, we are moving up and down their recreation demand curve -- This lets us trace out the demand curve -- Changing Y or q shifts the demand curve in or out: these are income and quasi-price effects -- Once we have it, we can compute surplus! --- # Zonal (single-site) model Here's the most basic travel cost model to start -- - Construct distance zones (i) as concentric circles emanating from the recreation site - Travel costs from all points within each zone to the site are sufficiently close in magnitude to justify neglecting the differences -- - From a sample of visitors `\((v_i)\)` at the recreation site, determine zones of origin and their populations `\((n_i)\)` -- - Calculate the per capita visitation rates for each zone of origin `\((t_i = (v_i/n_i))\)` --- # Zonal (single-site) model - Construct a travel cost measure `\((tc_i)\)` that reflects the round-trip costs of travel from the zone of origin to the recreation site (time and gas), + an entry fee `\((fee)\)` which may be zero and does not vary across zones -- - Collect relevant socioeconomic data `\((s_i)\)` such as income and education for each distance zone -- - Use statistical methods to estimate the trip demand curve: the relationship between per-capita visitation rates, cost per visit, [and travel costs to other sites `\((tc_{si})\)`] controlling for socioeconomic differences -- - `\(t_i = g(tc_i + fee; tc_{si}, s_i) + \varepsilon_i\)` where `\(g\)` can be linear --- # Zonal (single-site) model Here's a simple example of a set of zones 1-5: <img src="11-slides-travel-cost_files/figure-html/sample2 scatter-1.svg" style="display: block; margin: auto;" /> --- # Zonal (single-site) model Suppose we have the following data: ``` ## # A tibble: 5 × 5 ## zone dist pop cost vpp ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 A 2 10000 20 15 ## 2 B 30 10000 30 13 ## 3 C 90 20000 65 6 ## 4 D 140 10000 80 3 ## 5 E 150 10000 90 1 ``` If we plot cost by visits per person, we have a measure of the demand curve... --- # Zonal (single-site) model .pull-left[ This is a very simple example where it happens to be an exactly straight line, most likely the data won't be this perfect The line is simply: `$$t_i = \beta_0 + \beta_1 tc_i$$` where `\(\beta_0\)` is the intercept and `\(\beta_1\)` is the slope ] .pull-right[ <img src="11-slides-travel-cost_files/figure-html/zonal example-1.svg" style="display: block; margin: auto;" /> ] --- # Zonal (single-site) model .pull-left[ The data will most likely look like this, but even this is probably too clean It ignores things like income, other sites, other household characteristics For now, we'd continue by fitting a line through the points (OLS/regression) ] .pull-right[ <img src="11-slides-travel-cost_files/figure-html/zonal example 2-1.svg" style="display: block; margin: auto;" /> ] --- # Zonal (single-site) model Based on the estimate model coefficients, construct the (inverse) demand curve -- .hi[For each zone:] predict total visitation given various fees -- Entry fee on the y-axis (price), and the number of predicted total visits on the x-axis (quantity) -- The demand curve is different for different zone because different social economic variables -- The (use) value of the park/site to each zone is given by the area underneath the corresponding demand curve --- # Issues with the single-site model What are some potential issues and concerns with this approach? -- It ignores non-use value (automatically zero for non-users) -- What are the right zones to choose? -- What is the right functional form for demand? -- How do we measure the opportunity cost of time? -- How do we treat multi-purpose trips? -- How do we value particular site attributes? Can't disentangle them at a single site --- # Multi-site model To value particular site attributes we need to have multiple sites (with different attributes!) -- We can answer questions like: -- What is the benefit of a fish restocking program? - Need to know the value of fish catch rate for visitors -- What is the benefit of water clarity? -- What is the benefit of tree replanting? --- # Multi-site model Suppose we have a dataset with a large number of individuals and sites -- Individuals are given by `\(i=1,\dots,N\)` and sites are given by `\(j=1,\dots,J\)` -- We observe the number of times each individual visited each site -- The multi-site model works as follows --- # Multi-site model .hi[Step 1:] Do the single-site estimation for each site: `$$t_{ij} = \beta_{0j} + \beta_{1j} tc_{ij} + \beta_{2j} tc_{sij} + \beta_{3j} s_i + \varepsilon_{ij}$$` -- .hi[Step 2:] Recover all the `\(\beta\)`s from each step 1 regression so that we have a set of J `\(\beta_{0j}\)`s for `\(j=1\dots,J\)`, `\(\beta_{1j}\)`s for `\(j=1\dots,J\)`, etc -- These `\(\beta\)`s tell us the slope `\((\beta_{1j})\)` and intercept `\((\beta_{0j}, \beta_{2j}, \beta_{3j})\)` -- `\(\beta_{2j}, \beta_{3j}\)` capture how the cost of substitute sites and household characteristics matter: they shift demand up and down --- # Multi-site model .hi[Step 3:] Take each set of `\(J\)` coefficient estimates and use them as the dependent variable in a regression on site attributes `\(z\)`: `$$\hat{\beta}_{0j} = \alpha_{00} + \alpha_{01}z_j + \epsilon_{0j}$$` `$$\hat{\beta}_{1j} = \alpha_{10} + \alpha_{11}z_j + \epsilon_{1j}$$` `$$\hat{\beta}_{2j} = \alpha_{20} + \alpha_{21}z_j + \epsilon_{2j}$$` `$$\hat{\beta}_{3j} = \alpha_{30} + \alpha_{31}z_j + \epsilon_{3j}$$` The `\(\alpha_{\times1}\)` coefficients tell us how the demand curve shifts `\((\alpha_{00}, \alpha_{02}, \alpha_{03})\)` or rotates `\((\alpha_{01})\)` as we change site attribute `\(z\)` --- # Valuing attributes with a multi-site model .pull-left[ If we improve the quality of a site from z<sub>1</sub> to z<sub>2</sub>, demand for that site shifts up The gain in CS, holding the cost fixed, is given by the blue area Once we estimate demand curves, we can see how welfare changes when we alter quality characteristics! ] .pull-right[ <img src="11-slides-travel-cost_files/figure-html/multi site example-1.svg" style="display: block; margin: auto;" /> ] --- # Multi-site example ```r trip_data ``` ``` ## # A tibble: 2,600 × 7 ## house_num site trips income travel_cost travel_cost_other water_clarity ## <int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 1 1 4 40450. 38.9 16.4 0.506 ## 2 2 1 5 60304. 29.8 37.5 0.506 ## 3 3 1 5 66681. 42.2 67.2 0.506 ## 4 4 1 5 52886. 11.0 51.3 0.506 ## 5 5 1 5 69282. 15.7 7.72 0.506 ## 6 6 1 5 36948. 4.30 48.0 0.506 ## 7 7 1 6 60866. 5.31 91.0 0.506 ## 8 8 1 5 35557. 65.0 161. 0.506 ## 9 9 1 5 64880. 14.5 24.3 0.506 ## 10 10 1 4 38491. 13.6 26.5 0.506 ## # … with 2,590 more rows ``` --- # First stage estimation ``` ## # A tibble: 26 × 5 ## intercept own_price cross_price income site ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 2.99 -0.0161 0.0106 0.0000321 1 ## 2 2.45 -0.0117 0.0101 0.0000397 2 ## 3 2.37 -0.0197 0.0111 0.0000450 3 ## 4 2.33 -0.0187 0.0119 0.0000438 4 ## 5 2.05 -0.0143 0.0139 0.0000450 5 ## 6 -0.236 -0.00668 0.00972 0.0000321 6 ## 7 2.67 -0.0210 0.0118 0.0000395 7 ## 8 -0.346 -0.00395 0.00987 0.0000324 8 ## 9 2.98 -0.0133 0.0107 0.0000315 9 ## 10 -0.103 -0.00943 0.0105 0.0000302 10 ## # … with 16 more rows ``` --- # Second stage ``` ## Joining, by = "site" ``` ``` ## # A tibble: 4 × 3 ## term estimate coeff ## <chr> <dbl> <chr> ## 1 water_clarity 48.0 intercept ## 2 water_clarity -0.171 own_price ## 3 water_clarity 0.0241 cross_price ## 4 water_clarity 0.000165 income ``` The estimates column tells us how a change in water clarity (from 0 to 100%), shifts or rotates our demand curve Clearer water `\(\rightarrow\)` more demand, more responsive to price, attracts higher-income people more --- # Real world data: central park Standard travel cost method is costly -- Need to survey households -- This takes time and money -- What alternatives do we have? --- # Mobility data from cell phones .hi[Cell phones] track where people live, go, etc -- We can use these data to do the travel cost method -- Same data used by NYT, WaPo, etc for COVID analysis of restaurants, etc -- Here we will be looking at visits to central park --- # Mobility data from cell phones ``` ## # A tibble: 22,972 × 13 ## visitor_cbgs year month location_name latitude longitude scaled_visits visits travel_distance_km travel_time_minutes visits_per_person median_age median…¹ ## <dbl> <dbl> <dbl> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 340030032003 2018 8 Harlem Meer 40.8 -74.0 34.8 4 22.9 29.3 0.0286 44.9 NA ## 2 340030032003 2018 8 Harlem Meer 40.8 -74.0 69.5 8 22.9 29.3 0.0571 44.9 NA ## 3 340030032003 2018 8 Harlem Meer 40.8 -74.0 34.8 4 22.9 29.3 0.0286 44.9 NA ## 4 340030034011 2018 11 Diana Ross Playground 40.8 -74.0 59.8 5 25.3 29.4 0.0510 39.9 107353 ## 5 340030034011 2019 8 Diana Ross Playground 40.8 -74.0 46 4 25.3 29.4 0.0392 39.9 107353 ## 6 340030034011 2019 11 Central Park 40.8 -74.0 92.9 8 25.3 29.4 0.0792 39.9 107353 ## 7 340030034023 2018 9 East 72nd Street Playground 40.8 -74.0 257. 16 22.4 25.9 0.143 31.5 138068 ## 8 340030035002 2018 3 East 72nd Street Playground 40.8 -74.0 184. 20 22.2 28.4 0.0873 35.1 42500 ## 9 340030035002 2019 5 Cherry Hill Fountain 40.8 -74.0 38.4 4 22.2 28.4 0.0183 35.1 42500 ## 10 340030040022 2018 1 Central Park 40.8 -74.0 110. 8 21.1 23.6 0.0748 37.1 83257 ## # … with 22,962 more rows, and abbreviated variable name ¹median_income ``` --- # Real world data: central park The data tells us for each .hi[census block group (CBG)] (600-3000 person locations): - visits per month to a particular location in central park by all cell phones in the CBG - how far the CBG is from the central park location (time and distance) - The median income of the CBG - The median age of the CBG ``` ## # A tibble: 22,972 × 13 ## visitor_cbgs year month location_name latitude longitude scaled_visits visits travel_distance_km travel_time_minutes visits_per_person median_age median…¹ ## <dbl> <dbl> <dbl> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 340030032003 2018 8 Harlem Meer 40.8 -74.0 34.8 4 22.9 29.3 0.0286 44.9 NA ## 2 340030032003 2018 8 Harlem Meer 40.8 -74.0 69.5 8 22.9 29.3 0.0571 44.9 NA ## 3 340030032003 2018 8 Harlem Meer 40.8 -74.0 34.8 4 22.9 29.3 0.0286 44.9 NA ## 4 340030034011 2018 11 Diana Ross Playground 40.8 -74.0 59.8 5 25.3 29.4 0.0510 39.9 107353 ## 5 340030034011 2019 8 Diana Ross Playground 40.8 -74.0 46 4 25.3 29.4 0.0392 39.9 107353 ## 6 340030034011 2019 11 Central Park 40.8 -74.0 92.9 8 25.3 29.4 0.0792 39.9 107353 ## 7 340030034023 2018 9 East 72nd Street Playground 40.8 -74.0 257. 16 22.4 25.9 0.143 31.5 138068 ## 8 340030035002 2018 3 East 72nd Street Playground 40.8 -74.0 184. 20 22.2 28.4 0.0873 35.1 42500 ## 9 340030035002 2019 5 Cherry Hill Fountain 40.8 -74.0 38.4 4 22.2 28.4 0.0183 35.1 42500 ## 10 340030040022 2018 1 Central Park 40.8 -74.0 110. 8 21.1 23.6 0.0748 37.1 83257 ## # … with 22,962 more rows, and abbreviated variable name ¹median_income ``` --- # Visits by where people live <img src="11-slides-travel-cost_files/figure-html/unnamed-chunk-12-1.png" style="display: block; margin: auto;" /> --- # Travel cost estimation with cell data We don't have the exact cost of households going to central park, but we have variables that are a good proxy Regression: `\(log(visits) = \beta_0 + \beta_1 log(travel\_distance\_km)\)` ``` ## NOTE: 237 observations removed because of infinite values (RHS: 237). ``` ```r central_park_demand ``` ``` ## # A tibble: 2 × 2 ## term estimate ## <chr> <dbl> ## 1 (Intercept) 2.10 ## 2 log(travel_distance_km) -0.0593 ``` What do the estimates mean? --- # Visualizing the relationship .pull-left[ <!-- --> ] .pull-right[ The number of visits decreases in distance The slope is the elasticity (-0.0593) A 1 percent increase in distance decreases visits by 0.0593 percent ] --- # The elasticity and omitted variables Other things probably affect how far someone lives from central park and how often they visit central park -- Ideas? -- New regression controlling for these factors: `$$log(visits) = \beta_0 + \beta_1 log(travel\_distance\_km) + \\ \beta_2 log(median\_income) + \beta_3 log(median\_age)$$` --- # The elasticity and omitted variables ``` ## NOTE: 2,036 observations removed because of NA and infinite values (RHS: 2,036). ``` ``` ## # A tibble: 4 × 2 ## term estimate ## <chr> <dbl> ## 1 (Intercept) 0.578 *## 2 log(travel_distance_km) -0.0252 versus -0.593 ## 3 log(median_income) 0.0858 ## 4 log(median_age) 0.134 ``` The elasticity dropped by two-thirds! -- Why? --- # The elasticity and omitted variables Rich people go to central park more than poorer people Older people go to central park more than younger people Where do richer older people tend to live? --- # The elasticity and omitted variables `$$log(travel\_distance\_km) = \beta_0 + \beta_1 log(median\_income)$$` ``` ## # A tibble: 2 × 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) 7.65 0.0942 81.2 0 ## 2 log(median_income) -0.520 0.00831 -62.6 0 ``` `$$log(travel\_distance\_km) = \beta_0 + \beta_1 log(median\_age)$$` ``` ## # A tibble: 2 × 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) 5.95 0.0913 65.1 0 ## 2 log(median_age) -1.15 0.0250 -46.2 0 ``` Richer and older people live closer to central park --- # The elasticity and omitted variables Why does this matter? -- Rich people can afford to live in Manhattan and they also like parks a lot -- Ignoring this makes it seem like the average person visits a lot less if they live further away -- But it is just the fact that poorer households tend to live in the outer boroughs of New York and likely cannot afford as many trips as richer households