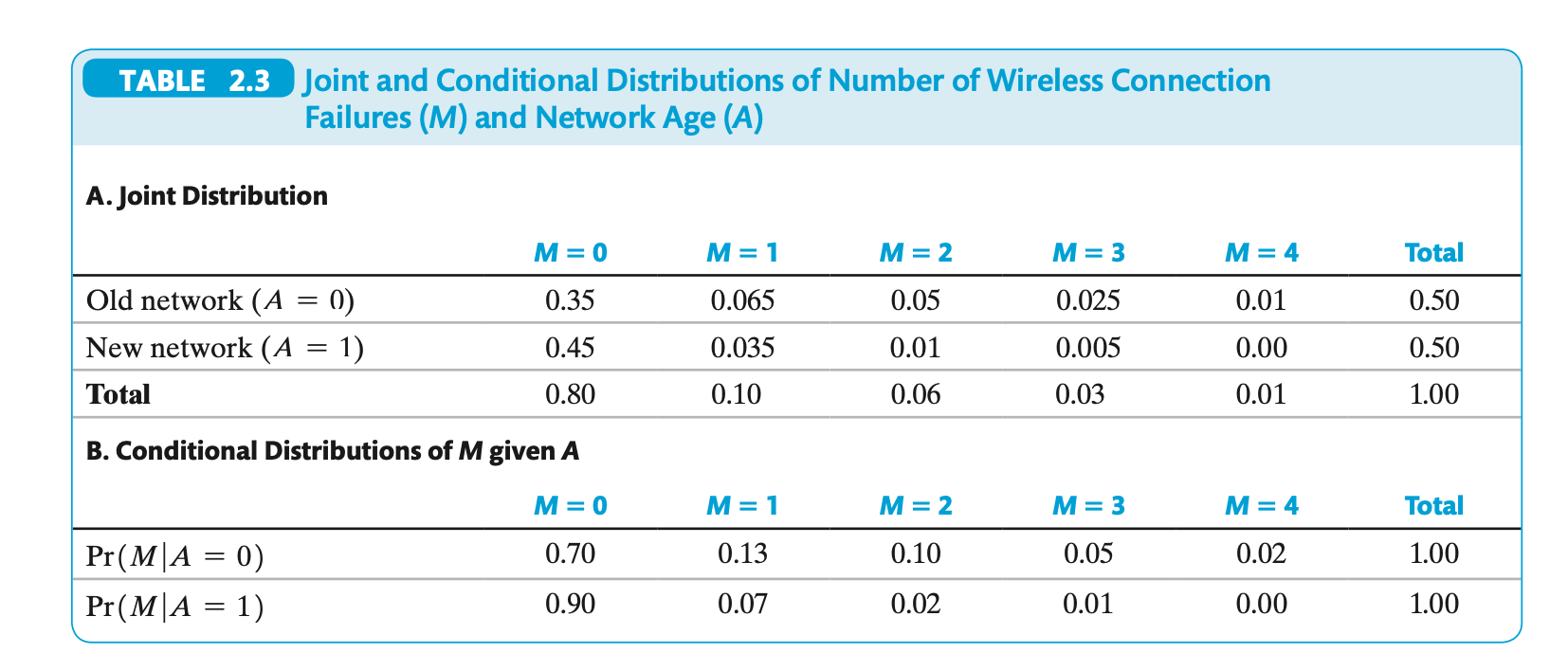

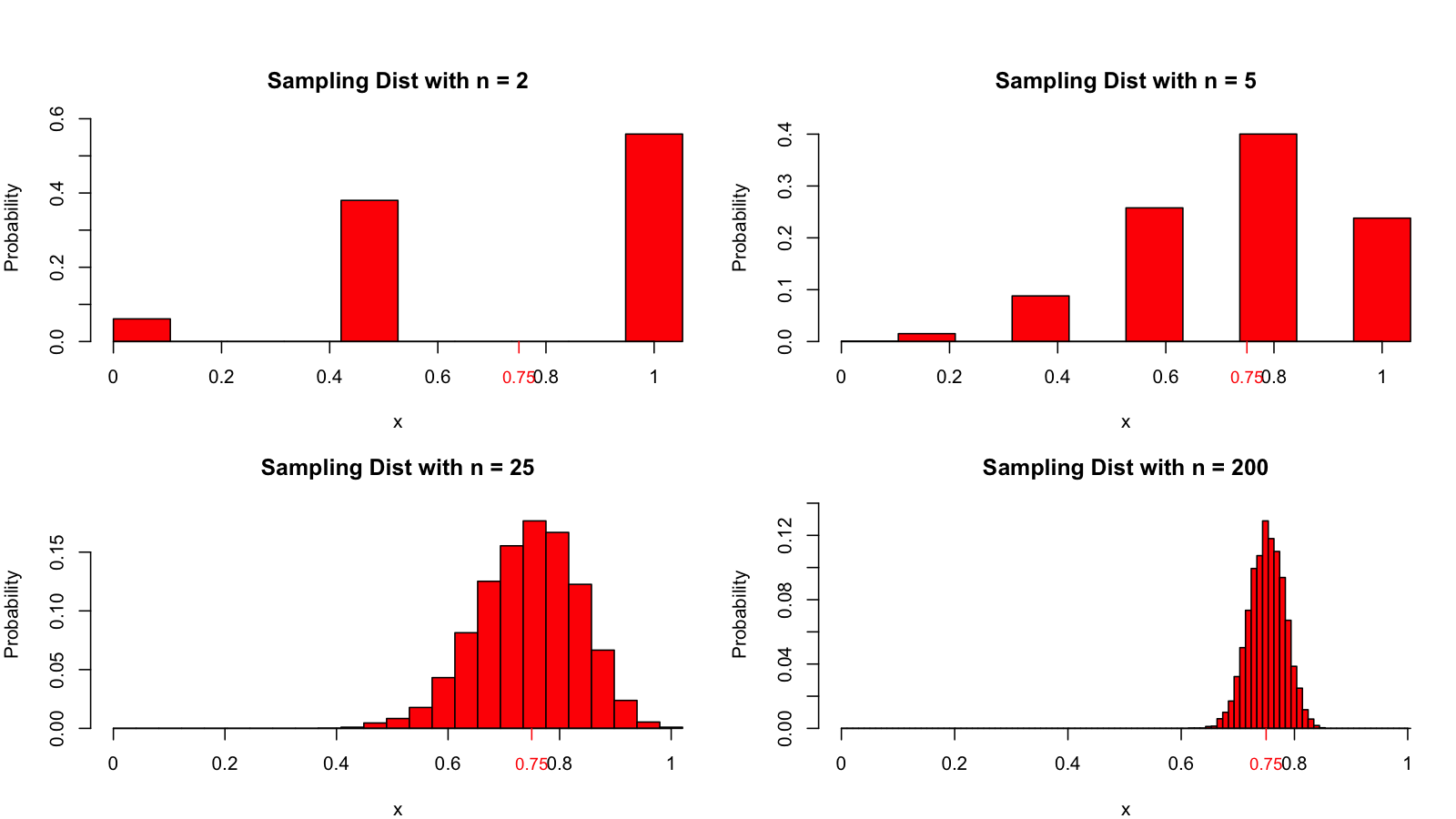

class: center, middle, inverse, title-slide .title[ # Econometrics ] .subtitle[ ## Probability: 🎲, 🔮, 🪙 ] .author[ ### Florian Oswald ] .date[ ### UniTo ESOMAS </br> 2025-10-09 ] --- layout: true <div class="my-footer"><img src="../img/logo/unito-shield.png" style="height: 60px;"/></div> --- # Probability This set of slides covers concepts from Stock and Watson chapter 2. --- # Probabilities *Probability* is a function that assigns a value in `\([0,1]\)` to a *set* (representing *events*). Consider a fair 6-sided dice. 🎲 * Outcome space: `\(\Omega = \{1,2,3,4,5,6\}\)` * Event: a partition of `\(\Omega\)`. -- For example, the events A ("the outcome is even") and B ("the outcome is odd") are: `$$\begin{align} A &= \{2,4,6\},\\ B &= \{1,3,5\}. \end{align}$$` Having a *fair* dice means that `$$\Pr(1) = \dots = \Pr(6) = \frac{1}{6}$$` --- class: inverse # Task 1: Probabilities Given a fair 6-sided dice 🎲, i.e. an outcome space `\(\Omega = \{1,2,3,4,5,6\}\)` and events `$$\begin{align} A &= \{2,4,6\},\\ B &= \{1,3,5\}. \end{align}$$` how does one determine the ***likelihood*** (or the **probability**) of event A occuring? --- # Discrete Random Variables A *Discrete Random Variable* is a function mapping outcomes to measurements. For example, we might call * `\(X\)` the number we obtain from throwing the dice once. * `\(X_1,X_2\)` the 2 numbers we obtain from throwing the dice two times. * `\(D\)` an indicator (either `0`, `1`) for whether a randomly sampled person answers "yes" or "no" when asked whether they have children. --- class: inverse # Gambler's Ruin The dealer tosses a fair coin. If it comes up tails (`T`) you win, if it's heads (`H`)you loose. Suppose we've seen the following sequence of tosses so far: ``` outcome: H H H H H H H H H H ? toss num: 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. ``` You have lost 10 times in a row now. Does this increase the probability that you will win the next round? -- Which sequence is more likely to occur? 1. `TTTTTTTTTT` (10 `T` in a row)? 2. `HTHTTHTHHT` (random occurence of `T` and `H`) --- ## Gambler's Ruin The probability of hitting 11 `H` in a row is $$ \Pr(\text{11 Heads in a Row}) = \frac{1}{2^{11}} = 0.00048 = 0.05\% $$ -- Ok, but I already had 10 `H`! What's the next toss likely going to be given that miserable history? $$ \Pr(\text{toss 11 is Heads} | 10 \text{ Heads before}) $$ Let's calculate that probability on the board! --- class: inverse # Task 2: 2 Dice Given two fair 6-sided dice 🎲 🎲, what is the probability of obtaining *at least* once the face "5"? --- layout: false class: title-slide-section-red, middle # Probability Distributions --- layout: true <div class="my-footer"><img src="../img/logo/unito-shield.png" style="height: 60px;"/></div> --- ## PDF/PMF and CDF of discrete RVs * PDF/PMF: Probability Density/Mass Function. Table listing each outcome and the associated probability of observing it. * CDF: Cumulative Distribution Function. Probability that a given RV takes on a value *less than or equal a certain value* (requires a notion of *ordering* - e.g. what about a dice with 6 different **colors**?) ### For our 6-sided fair dice ``` ## outcome pdf cdf ## 1 1 1/6 1/6 ## 2 2 1/6 2/6 ## 3 3 1/6 3/6 ## 4 4 1/6 4/6 ## 5 5 1/6 5/6 ## 6 6 1/6 6/6 ``` --- ## PDF/PMF and CDF of discrete RVs * PDF/PMF: Probability Density/Mass Function. Table listing each outcome and the associated probability of observing it. * CDF: Cumulative Distribution Function. Probability that a given RV takes on a value *less than or equal a certain value* (requires a notion of *ordering* - e.g. what about a dice with 6 different **colors**?) ### For our 6-sided fair dice .pull-left[ <img src="chapter_probability_files/figure-html/unnamed-chunk-2-1.svg" style="display: block; margin: auto;" /> ] .pull-right[ <img src="chapter_probability_files/figure-html/unnamed-chunk-3-1.svg" style="display: block; margin: auto;" /> ] --- ## PDF/PMF and CDF of discrete RVs * PDF/PMF: Probability Density/Mass Function. Table listing each outcome and the associated probability of observing it. * CDF: Cumulative Distribution Function. Probability that a given RV takes on a value *less than or equal a certain value* (requires a notion of *ordering* - e.g. what about a dice with 6 different **colors**?) ### For our fair coin ``` ## outcome pdf cdf ## 1 H(0) 1/2 1/2 ## 2 T(1) 1/2 2/2 ``` --- ## PDF/PMF and CDF of discrete RVs * PDF/PMF: Probability Density/Mass Function. Table listing each outcome and the associated probability of observing it. * CDF: Cumulative Distribution Function. Probability that a given RV takes on a value *less than or equal a certain value* (requires a notion of *ordering* - e.g. what about a dice with 6 different **colors**?) ### For our fair coin .pull-left[ <img src="chapter_probability_files/figure-html/unnamed-chunk-5-1.svg" style="display: block; margin: auto;" /> ] .pull-right[ <img src="chapter_probability_files/figure-html/unnamed-chunk-6-1.svg" style="display: block; margin: auto;" /> ] --- ## Bernoulli Distribution * A special discrete RV with 2 outcomes: `0` and `1`, where event `1` ("success") occurs with probability `\(p\)`. For example: * Tomorrow it will rain with probability `\(p\)` (it will *not* rain with `\(1-p\)`). -- What kind of RV is flipping a *fair* coin? What about an *unfair* coin? --- ## PDFs and CDFs of continuous Variables * slightly more complicated because we need calculus and integration. * The basic idea is the same! --- layout: false class: title-slide-section-red, middle # Expected Value and Variance --- layout: true <div class="my-footer"><img src="../img/logo/unito-shield.png" style="height: 60px;"/></div> --- ## Expected Value and Variance We write `$$E(Y) = y_1 p_1 + \dots + y_n p_n$$` For example for our dice example, where `\(X\)` is the result of throwing the dice: `$$\begin{align} E(X) &= 1 \times \Pr(1) + 2 \times \Pr(2) + \dots + 6 \times \Pr(6) \\ &= \end{align}$$` **Everybody calculate this result now!** --- # Expected Value and Variance We write $$ E(Y) = y_1 p_1 + \dots + y_n p_n $$ For example for our dice example, where `\(X\)` is the result of throwing the dice: `$$\begin{align} E(X) &= 1 \times \Pr(1) + 2 \times \Pr(2) + \dots + 6 \times \Pr(6) \\ &= 1 \times \frac{1}{6} + 2 \times \frac{1}{6} + \dots + 6 \times \frac{1}{6} \\ &= 3.5 \\ \end{align}$$` * So: We need to know ***all*** weights and values (`\(\Pr\)` and `\(X\)`) in order to compute this quantity. --- # `\(E(X)\)` is a Theoretical Concept. Mission Impossible? * Imagine you get this message: -- 1. Your mission, should you choose to accept it, is to inspect this device : 🔮. Whenever you touch it, it displays a number in `\(\{1,\dots,10\}\)`. E.g. `4,8,4,9,1,5,10,9,1`...kind of random. 2. We must know the long-run average, i.e. E(🔮). Or something terrible will happen! 3. This message will destroy itself in 10,9,8,...💣 -- * You **don't know** the theoretical distribution of all the numbers in 🔮 (the `\(\Pr\)`'s). Time is running ⏰ -- * What could you do? 🤔 --- <iframe src="https://giphy.com/embed/plVdDRfj5WV47sIAsh" width="960" height="538" style="" frameBorder="0" class="giphy-embed" allowFullScreen></iframe> --- # `\(E(X)\)` is a Theoretical Concept. Mission Impossible? * If we had a huge number of observations from 🔮 (a *sample*), we could come up with a *guess* of E(🔮), based on the data we got. Empirical, like. ``` r set.seed(12345) # to ensure reproducibility Ps = runif(10) Ps[c(2,3)] = 0 Ps = Ps / sum(Ps) # generate random weights x = sample(1:10, size = 10000, replace = TRUE, prob = Ps) xbar = mean(x) xbar ``` ``` ## [1] 6.3418 ``` -- * `xbar` = `\(\bar{x} = \frac{1}{N} \sum_i^{N} x_i\)` is called *Sample Mean*, *Arithmetic Average*, *Sample Average* * `\(\bar{x}\)` is an ***estimator*** for `\(E(X) = \mu_X\)`. (`\(E(X) = \mu_X\)` by the way.) --- # Central Tendency - Mean and Median .pull-left[ `mean(x)`: the average of all values in `x`. `$$E(X) = \mu_X = \frac{1}{N}\sum_{i=1}^N x_i = \bar{x}$$` ***The second equality 👆 is correct only if...???*** ``` r x <- c(1,2,2,2,2,100) mean(x) ``` ``` ## [1] 18.16667 ``` ``` r mean(x) == sum(x) / length(x) ``` ``` ## [1] FALSE ``` ] -- .pull-right[ `median`: the value `\(x_j\)` below and above which 50% of the values in `x` lie. `\(m\)` is the median if `$$\Pr(X \leq m) \geq 0.5 \text{ and } \Pr(X \geq m) \geq 0.5$$` The median is robust against *outliers*. ``` r median(x) ``` ``` ## [1] 2 ``` ] --- ## Quick Review 1. EV of a bernoulli 2. Continuous RV --- # Variance and Standard Deviation * A measure of *spread* of a distribution. * The definition of *variance* is `$$var(Y) = E[(Y-\mu_Y)^2]$$` if `\(Y\)` is discrete, `$$var(Y) = \sum_{i=1}^N (y_i-\mu_Y)^2 \times p_i.$$` -- * There is an issue with scaling: we *square* deviations. * The standard deviation scales back to units of the data: `$$\sigma_Y = \sqrt{var(Y)}$$` ??? * why squared? * because deviations of same magnitude but opposite sign cancel out * mean([5,15]) also because want to "exaggerate" larger deviations * could also take absolute value of deviations no? --- ## Variance .pull-left[ Consider two `normal distributions` with equal mean at `0`: ] -- .pull-right[ <img src="chapter_probability_files/figure-html/unnamed-chunk-11-1.svg" style="display: block; margin: auto;" /> Compute with: ``` r var(x) ``` ] --- ## Example of other Spread Measures .pull-left[ ``` r # % catholic in 47 french-speaking # swiss cantons in 1888 plot(swiss$Catholic,rep(1,nrow(swiss)),pch = 3, cex = 2,xlab = "% Catholic",yaxt = "n",ylab = "") ``` <img src="chapter_probability_files/figure-html/unnamed-chunk-13-1.svg" style="display: block; margin: auto;" /> ] .pull-right[ How do the values in column `Catholic` *vary*? | Measure | `R` | Result | |:---------:|:-------------------:|:---------------------:| | Variance | `var(swiss$Catholic)` | 1739.29 | | Standard Deviation | `sd(swiss$Catholic)` | 41.7 | | IQR | `IQR(swiss$Catholic)` | 87.93 | | Minimum | `min(swiss$Catholic)` | 2.15 | | Maximum | `max(swiss$Catholic)` | 100 | | Range | `range(swiss$Catholic)` | 2.15, 100 | ] --- class: inverse # Task 3: Computing Variance by hand 1. Compute the variance of our 6-sided dice! 2. compute the variance of `\(X \sim \text{Bernoulli}(p)\)`! Bonus question: what value of `\(p\)` maximizes this variance? --- layout: false class: title-slide-section-red, middle # Two Random Variables <br> `\((X,Y)\)`, (🎲, 🎲), (🎲, 🪙), (🪙, 🪙) --- layout: true <div class="my-footer"><img src="../img/logo/unito-shield.png" style="height: 60px;"/></div> --- # Two Random Variables: Example .pull-left[ <img src="chapter_probability_files/figure-html/x-y-corr-1.svg" style="display: block; margin: auto;" /> ] .pull-right[ * Here, `\(X\)` and `\(Y\)` are ***joint normally*** distributed. * We would write $$(X, Y) \sim \mathcal{N}\biggl( \begin{bmatrix} \mu_X \\ \mu_Y \end{bmatrix}, \, \Sigma \biggr)$$` where `\(\Sigma\)` is a *matrix* `$$\begin{bmatrix} \sigma_X^2 & \rho \, \sigma_X \sigma_Y \\ \rho \, \sigma_X \sigma_Y & \sigma_Y^2 \end{bmatrix}$$` ] Taking as example the data in this plot, the concepts *covariance* and *correlation* relate to the following type of question: --- # Tabulating Data `table(x)` is a useful function that counts the occurence of each unique value in `x`: ``` r # install.packages("dslabs") in order to use this command data("gapminder",package = "dslabs") table(gapminder$continent) ``` ``` ## ## Africa Americas Asia Europe Oceania ## 2907 2052 2679 2223 684 ``` -- The same can be achieved using the `count` function (from `dplyr`) ``` r gapminder %>% count(continent) ``` ``` ## continent n ## 1 Africa 2907 ## 2 Americas 2052 ## 3 Asia 2679 ## 4 Europe 2223 ## 5 Oceania 684 ``` --- # Tabulating Data Given two variables, `table` produces a contingency table: ``` r gapminder_new <- gapminder %>% filter(year == 2015) %>% mutate(fertility_above_2 = (fertility > 2.1)) # dummy variable for fertility rate above replacement rate ``` ``` r table(gapminder_new$fertility_above_2) ``` ``` ## ## FALSE TRUE ## 80 104 ``` ``` r table(gapminder_new$continent,gapminder_new$fertility_above_2) ``` ``` ## ## FALSE TRUE ## Africa 2 49 ## Americas 15 20 ## Asia 20 27 ## Europe 39 0 ## Oceania 4 8 ``` --- # Cross-Tabulating Data: A joint distribution! The probability that `\(X = x\)` and `\(Y=y\)` is `$$\Pr(X=x,Y=y)$$` .pull-left[ ``` r # table with absolute numbers abstab = table(gapminder_new$continent, gapminder_new$fertility_above_2) (jd <- round(prop.table(abstab),2)) # proportions in each cell ``` ``` ## ## FALSE TRUE ## Africa 0.01 0.27 ## Americas 0.08 0.11 ## Asia 0.11 0.15 ## Europe 0.21 0.00 ## Oceania 0.02 0.04 ``` * check: proper probability distribution? ``` r sum(jd) # sums up all elements ``` ``` ## [1] 1 ``` ] -- .pull-right[ * for instance `$$\Pr(\text{Asia},\text{TRUE}) = 0.14673$$` * You can see 👀 that this is just the **share** of cases in each bin! ] --- # Tabulating Data: marginal distributions! .pull-left[ ``` r # proportions by row (m1 = prop.table(abstab, margin = 1)) ``` ``` ## ## FALSE TRUE ## Africa 0.03921569 0.96078431 ## Americas 0.42857143 0.57142857 ## Asia 0.42553191 0.57446809 ## Europe 1.00000000 0.00000000 ## Oceania 0.33333333 0.66666667 ``` check? ``` r rowSums(m1) ``` ``` ## Africa Americas Asia Europe Oceania ## 1 1 1 1 1 ``` ] -- .pull-right[ ``` r # proportions by column (m2 = prop.table(abstab, margin = 2)) ``` ``` ## ## FALSE TRUE ## Africa 0.02500000 0.47115385 ## Americas 0.18750000 0.19230769 ## Asia 0.25000000 0.25961538 ## Europe 0.48750000 0.00000000 ## Oceania 0.05000000 0.07692308 ``` check? ``` r colSums(m2) ``` ``` ## FALSE TRUE ## 1 1 ``` ] --- # Joint Distributions The probability that `\(X = x\)` and `\(Y=y\)` is `$$\Pr(X=x,Y=y)$$` ``` ## Rain (X=0) No Rain (X=1) ## Long Commute (Y=0) 0.15 0.07 ## Short Commute (Y=1) 0.15 0.63 ``` for instance, * `\(\Pr(Y = 1, X = 0) = 0.15\)` --- # Marginal Distributions `$$\Pr(Y = y) = \sum_{i=1}^k \Pr(X=x_i, Y = y)$$` ``` ## Rain (X=0) No Rain (X=1) ## Long Commute (Y=0) 0.15 0.07 ## Short Commute (Y=1) 0.15 0.63 ``` * Probability of long commute, *irrespective of rain*, is 0.15 + 0.07 = 0.22 * Probability of rain, *irrespective of length of commute*, is 0.15 + 0.15 = 0.3 --- class: inverse # Task 4 : Computing Marginal Distributions <div class="countdown" id="timer_96c01882" data-update-every="1" tabindex="0" style="top:0;right:0;"> <div class="countdown-controls"><button class="countdown-bump-down">−</button><button class="countdown-bump-up">+</button></div> <code class="countdown-time"><span class="countdown-digits minutes">05</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> .pull-left[ ``` r r ``` ``` ## Rain (X=0) No Rain (X=1) ## Long Commute (Y=0) 0.15 0.07 ## Short Commute (Y=1) 0.15 0.63 ``` ] .pull-right[ 1. Compute the marginal distribution of `\(X\)` 1. Compute the marginal distribution of `\(Y\)` ] --- # Conditional distributions * Conditional distribution is `\(\Pr(X=x|Y=y) = \frac{\Pr(X=x,Y=y)}{\Pr(Y=y)}\)` * We **fix** the value of one variable, and look at the resulting distribution of the other variable. * Notice, the result must be a *valid* probability distribution, i.e., it must sum to 1. * We compute the probability as before, but restrict attention to where the condition we impose is true. --- class: inverse # Task 5: compute conditional distribution <div class="countdown" id="timer_63a8f2f9" data-update-every="1" tabindex="0" style="top:0;right:0;"> <div class="countdown-controls"><button class="countdown-bump-down">−</button><button class="countdown-bump-up">+</button></div> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> Consider ``` ## Rain (X=0) No Rain (X=1) ## Long Commute (Y=0) 0.15 0.07 ## Short Commute (Y=1) 0.15 0.63 ``` * Suppose we know that it does not rain `\(x = 1\)`. What is the distribution of `\(Y\)`, *given* this knowledge, i.e. what is the distribution `\(\Pr(Y|X=1)\)` ? --- class: inverse # Task 6: Computing More Conditional Distributions <div class="countdown" id="timer_647061a8" data-update-every="1" tabindex="0" style="top:0;right:0;"> <div class="countdown-controls"><button class="countdown-bump-down">−</button><button class="countdown-bump-up">+</button></div> <code class="countdown-time"><span class="countdown-digits minutes">05</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> .pull-left[ ``` r jd ``` ``` ## ## FALSE TRUE ## Africa 0.01 0.27 ## Americas 0.08 0.11 ## Asia 0.11 0.15 ## Europe 0.21 0.00 ## Oceania 0.02 0.04 ``` ] .pull-right[ 1. Compute the Distribution of countries conditional on low fertility? 2. Compute the Distribution of countries conditional on being in Asia? 3. Compute the marginal distribution of high/low fertility ] --- # Conditional Expectation We define `$$E(Y|X = x) = \sum_{i=1}^k y_i \Pr(Y = y_i|X=x)$$` * Notice the `\(y_i\)` there! * Basically this is expected value of `\(Y\)`, but **under the assumption that** `\(X\)` takes on the value `\(x\)`. * We *fix* the joint distribution of `\((Y,X)\)` at a certain value `\(X=x\)`. --- class: inverse # Task 7: Conditional Expectation  1. Compute `\(E(M)\)` 2. Compute `\(E(M|A = 0)\)` --- # Law of Iterated Expectations * We can decompose the expected value of `\(Y\)` into subgroups, see what fraction of total probability each group makes up, and compute their weighted average. -- ``` ## name sex height ## 1 Peter M 1.90 ## 2 James M 1.75 ## 3 Mary F 1.68 ## 4 John M 1.86 ## 5 Amy F 1.59 ``` * The average height here is `\((1.9 + 1.75 + 1.68 + 1.86 + 1.59) / 5 = 1.756\)`: --- # Law of Iterated Expectations > The average height here is 1.756 * What is the average for each **group** by sex? ``` ## # A tibble: 2 × 3 ## sex n mean_height ## <chr> <int> <dbl> ## 1 F 2 1.64 ## 2 M 3 1.84 ``` -- * Can we recover the unconditional mean from this information? Yes we can! * We need again *weights* and *values*, like for the standard `\(E(Y)\)` ``` r weights = c(2/5, 3/5) values = c(1.635, 1.836667) sum(weights * values) # 2/5 * 1.635 + 3/5 * 1.836667 ``` ``` ## [1] 1.756 ``` --- # Law of Iterated Expectations * What did we just do? * We established that we can get the unconditional mean of `\(Y\)` from the *conditional* one, if we know the *weights* we have to give to each subgroup. More precisely: .pull-left[ 1. We know `\(E(Y|X)\)`: 1. `\(E(Y|\text{male})= 1.836667\)` 2. `\(E(Y|\text{female})= 1.635\)` 2. We know the proportion of both groups: 1. `\(\Pr(\text{male})= 3/5\)` 2. `\(\Pr(\text{female})= 2/5\)` ] .pull-right[ Therefore, we can recompose `\(E(Y)\)`: `$$\begin{align} E(Y) &= \sum_{i=1}^k E(Y|X = x_i) \Pr(X = x_i)\\ &= E_X\left[ E(Y|X) \right] \end{align}$$` ] --- # Law of Iterated Expectations * One particular version of this comes up often in econometrics: `$$E[Y|X] = 0 \Rightarrow E[Y] = 0$$` * Why? Well, now you know that `\(E[Y|X] = 0\)` for all possible `\(X\)` is zero. * Compute `\(E(Y) = \sum_{i=1}^k 0 \times \Pr(X = x_i)\)`! --- # Conditional Expectation and Prediction Errors * We said that the the squared error makes sense (talking about variance) * Suppose we want to design a **predictor** `\(g(X)\)` which can **predict** `\(Y\)` reasonably well. One criterion for that might be the *average squared error of prediction*. -- * In other words, what is the solution to this problem? `$$\min_m E\left[ (Y - m)^2 \right]$$` --- # Independence * We call 2 RVs *independent* if knowing the value of one of them is not informative about the value of the other. * The precise definition of this statement is `$$\Pr(X=x | Y = y) = \Pr(X=x)$$` -- * or: `$$\Pr(X=x , Y = y) = \Pr(X=x) \Pr(Y=y)$$` --- # Covariance * Covariance tells us how two RVs co-vary. `$$\begin{align} cov(X,Y) = \sigma_{XY} &= E\left[ (X - \mu_X) (Y- \mu_Y) \right] \\ &= \sum_{i=1}^k \sum_{j=1}^m (x_i - \mu_X) (y_j- \mu_Y) \Pr(X = x_i, Y = y_j) \end{align}$$` * Notice how this object tells us how large deviations of `\(X\)` from its mean *jointly* occur with such deviations of `\(Y\)`. * This confounds the scale of `\(X\)` and `\(Y\)`, however, so not easy to interpret --- # Correlation * Correlation fixes this issue. `$$corr(X,Y) = \frac{cov(X,Y)}{\sqrt{var(X)var(Y)}} = \frac{\sigma_{XY}}{\sigma_X \sigma_Y}$$` * Correlation is always between -1 and 1. * `\(corr(X,Y) = 0\)` means they are *uncorrelated*. --- # Correlation and Conditional Mean * If the conditional mean of `\(Y\)` does **not** depend on `\(X\)`, then they must be uncorrelated. * That is, if `$$E(Y|X) = \mu_Y \Rightarrow cov(X,Y) = 0 \text{ and } corr(X,Y) = 0$$` --- class: inverse # Task 8 : Show Correlation and Conditional Mean Show this result! `$$E(Y|X) = \mu_Y \Rightarrow cov(X,Y) = 0 \text{ and } corr(X,Y) = 0$$` --- # Random Sampling (SW KC 2.5) * Suppose we **randomly** select `\(n\)` objects from a large population. * `\(Y_i\)` is the value of `\(i\)`-th object chosen. * Each object `\(i\)` has the same chance of getting drawn, which means that the *distribution of the `\(Y\)`s is the same*, and object `\(i\)` being drawn does not depend on object `\(j\)` being drawn. * **Same** means **identical**. So, the `\(Y\)`s are ***identically and independently*** distributed. --- # Sampling Distribution of Sample Average * We already defined the **sample average**: `$$\bar{y} = \frac{1}{n}(y_1 + y_2 + \dots + y_n) = \frac{1}{n}\sum_{i=1}^n y_i$$` -- * A core concept is to realize that the value of `\(\bar{y}\)` is itself ***random***. Just imagine drawing a second sample of `\(y\)`'s -- * Because `\(\bar{y}\)` varies with each sample, the object `\(\bar{y}\)` has a **sampling distribution**. * Every well-defined distribution has a **mean** and a **variance** - same for this sampling distribution. Let's figure it out! --- # Mean of the Sample Average * The mean of the sample average (*the mean of the mean!*) is just `$$E(\bar{y}) = E\left[\frac{1}{n}\sum_{i=1}^n y_i\right] = \frac{1}{n}\sum_{i=1}^n E[y_i] = \frac{1}{n} n \mu_Y$$` -- * So that's good news. The expected value is equal to the population mean. * This statement is important. We say that `\(\bar{y}\)` is an ***unbiased estimator*** for `\(E(y)\)`. --- class: inverse # Task 9: Variance of the Sample Average * Similarly, `$$var(\bar{y}) = var\left( \frac{1}{n}\sum_{i=1}^n y_i \right) = \frac{\sigma_Y^2}{n}$$` * Show this result! --- # Properties of the Sample Mean The following facts **always** hold for the sample mean: 1. `\(E(\bar{y}) = \mu_Y\)` 2. `\(var(\bar{y}) = \frac{\sigma_Y^2}{n}\)` 3. `\(std.dev(\bar{y}) = \frac{\sigma_Y}{\sqrt{n}}\)` -- Those are true ***whenever `\(y\)` is i.i.d***. In particular, we do **not** need the `\(y\)` to be normally distributed! --- # Sampling from a Normal Distribution 1. The sum of normally distributed RVs is itself normally distributed. -- 2. Suppose we draw `\(Y_i\)` i.i.d from a normal distribution. -- 3. We already know the mean and variance of the sample mean `\(\bar{y}\)` in this case (previous slide.) -- 4. In this **special case** we actually ***know*** the full distribution of `\(\bar{y}\)`, which has to be *normal* itself (see point 1). That is `$$\bar{y} \sim \mathcal{N}\left(\mu_y, \frac{\sigma_Y^2}{n} \right)$$` -- What if we **don't know** the distribution of our `\(Y_i\)`? --- # **NOT** Sampling from a Normal Distribution * This is clearly the empirically relevant case. We almost **never** know the precise distribution of our data. * Let us repeat that: > The following facts **always** hold for the sample mean: > 1. `\(E(\bar{y}) = \mu_Y\)` > 2. `\(var(\bar{y}) = \frac{\sigma_Y^2}{n}\)` > 3. `\(std.dev(\bar{y}) = \frac{\sigma_Y}{\sqrt{n}}\)` > Those are true ***whenever `\(y\)` is i.i.d***. In particular, we do **not** need the `\(y\)` to be normally distributed! --- # Asymptotic Distributions * If we don't know the *exact* distribution of the data, we can still derive an **approximate** distribution. * The approximation requires that we have a lot of data, i.e. `\(n\)` is large. * We are using 2 key tools for this: The Law of Large Numbers and the Central Limit Theorem. --- # The Law of Large Numbers * We say that a sequence of RVs `\(z_n\)` **converges in probability** to the constant `\(a\)` if the probability that `\(z_n\)` comes to lie in the interval `\(\left[a - c, a + c\right]\)` goes to 1 as n goes to infinity. * We would write that as `$$z_n \overset{p}{\to} a$$` -- The Law of Large Numbers says that *if* `\(Y_i\)` are i.i.d. with `\(E(Y)=\mu_Y\)`, then - if large outliers are very unlikely (if `\(var((Y) \sigma_Y^2 < \infty\)`)) - the sample mean converges in probability to the population mean, i.e. `$$\bar{y} \overset{p}{\to} \mu_Y$$` * This property (`\(\bar{y} \overset{p}{\to} \mu_Y\)`) is called ***consistency***: `\(\bar{y}\)` is **consistent** for `\(\mu_Y\)`. --- # Demo ``` r set.seed(45) n = 2 p = 0.78 s = sample(c(0,1), size = n, prob = c(1-p, p), replace = TRUE) print(s) ``` ``` ## [1] 1 1 ``` ``` r mean(s) # ybar ``` ``` ## [1] 1 ``` ``` r n = 10 s = sample(c(0,1), size = n, prob = c(1-p, p), replace = TRUE) print(s) ``` ``` ## [1] 1 1 1 1 1 1 1 1 1 0 ``` ``` r mean(s) # ybar ``` ``` ## [1] 0.9 ``` ---  --- # The Central Limit Theorem * This important theorem says that under relavitely mild conditions, the distibution of `\(\bar{y}\)` is **normal** when `\(n\)` is large. * We already knew from above that the mean and variance of `\(\bar{y}\)` are `\(\mu_Y\)` and `\(\frac{\sigma_Y^2}{n}\)` - *whatever* the underlying distribution of `\(y\)` was. -- * But that `\(\bar{y}\)` would converge to a normal if `\(n\)` large, that is new! -- * This holds for ***any*** distribution that `\(Y\)` might have! --- # Demo ``` r library(shinyCLT) CLT() ``` --- ``` r library(CLT) CLT() ``` class: inverse # Task 4: Summarising data <div class="countdown" id="timer_dc73eb64" data-update-every="1" tabindex="0" style="top:0;right:0;"> <div class="countdown-controls"><button class="countdown-bump-down">−</button><button class="countdown-bump-up">+</button></div> <code class="countdown-time"><span class="countdown-digits minutes">10</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> 1. Compute the mean of GDP in 2011 and assign to object `mean`. You should exclude missing values. (*Hint: read the help for `mean` to remove `NA`s*). 1. Compute the median of GDP in 2011 and assign to object `median`. Again, you should exclude missing values. Is it greater or smaller than the average? 1. Create a density plot of GDP in 2011 using `geom_density`. A density plot is a way of representing the distribution of a numeric variable. Add the following code to your plot to show the median and mean as vertical lines. What do you observe? `geom_vline(xintercept = as.numeric(mean), colour = "red") +` <br> `geom_vline(xintercept = as.numeric(median), colour = "orange")` 1. Compute the correlation between fertility and infant mortality in 2015. To drop `NA`s in either variable set the argument `use` to "pairwise.complete.obs" in your `cor()` function. Is this correlation consistent with the graph you produced in Task 3? --- class: title-slide-final, middle background-image: url(../img/logo/esomas.png) background-size: 250px background-position: 9% 19% # That's all for Probability! | | | | :--------------------------------------------------------------------------------------------------------- | :-------------------------------- | | <a href="https://github.com/floswald/Econometrics-Slides">.ScPored[<i class="fa fa-link fa-fw"></i>] | Slides | | <a href="https://floswald.github.io">.ScPored[<i class="fa fa-link fa-fw"></i>] | My Homepage | | <a href="https://scpoecon.github.io/Econometrics/">.ScPored[<i class="fa fa-github fa-fw"></i>] | Book |