Using APIs

SICSS, 2022

Christopher Barrie

Introduction

- Why count tweets?

Test your API call

Of substantive interest

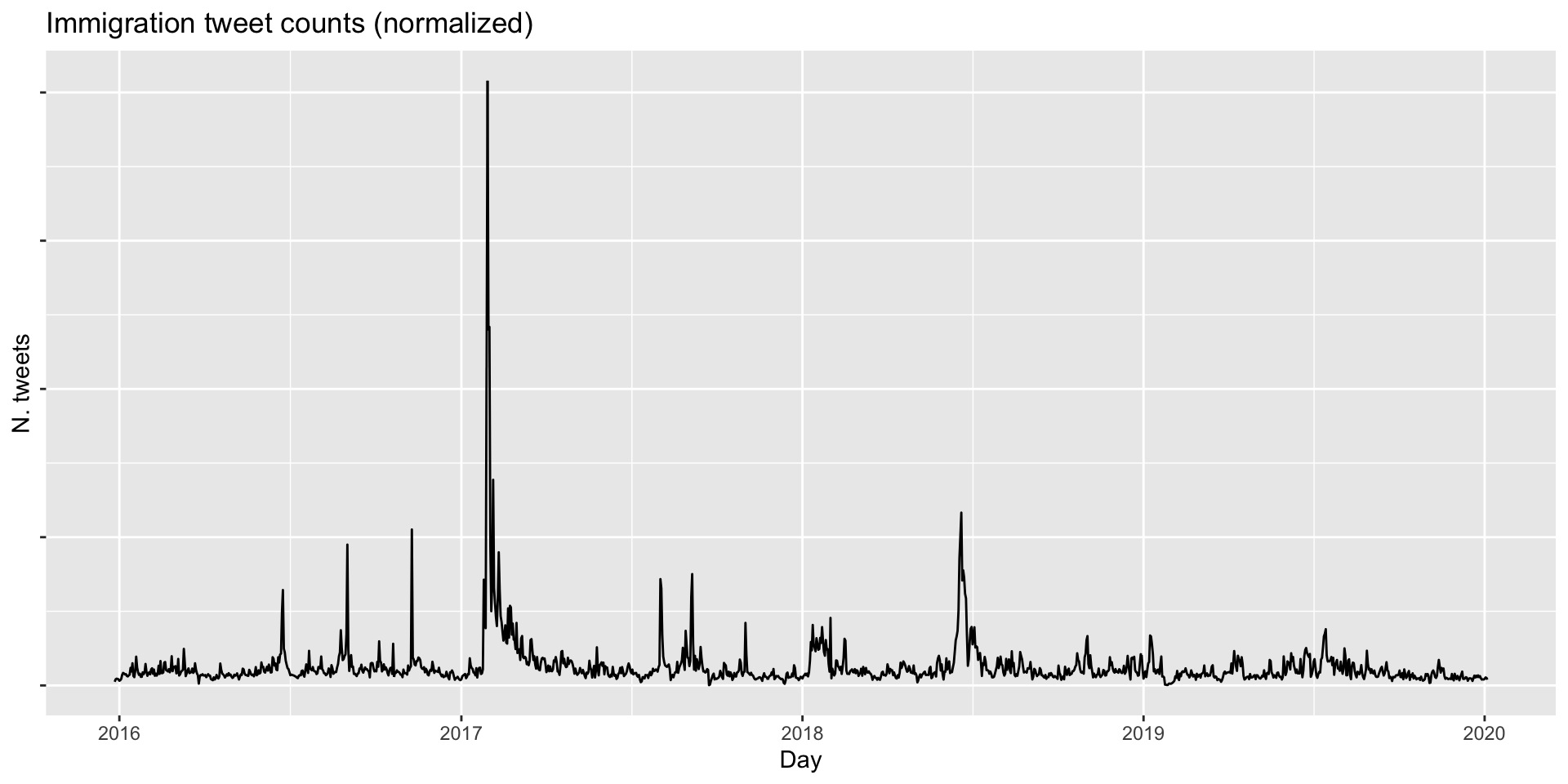

- But… design considerations (normalizing)

Counting tweets

Format date

end start tweet_count

1 2019-12-27T01:00:00.000Z 2019-12-27T00:00:00.000Z 47

2 2019-12-27T02:00:00.000Z 2019-12-27T01:00:00.000Z 38

3 2019-12-27T03:00:00.000Z 2019-12-27T02:00:00.000Z 19

4 2019-12-27T04:00:00.000Z 2019-12-27T03:00:00.000Z 14

5 2019-12-27T05:00:00.000Z 2019-12-27T04:00:00.000Z 10

6 2019-12-27T06:00:00.000Z 2019-12-27T05:00:00.000Z 12

time

1 2019-12-27 00:00:00

2 2019-12-27 01:00:00

3 2019-12-27 02:00:00

4 2019-12-27 03:00:00

5 2019-12-27 04:00:00

6 2019-12-27 05:00:00Adding arguments

Adding user parameters

end start tweet_count

1 2019-12-28T00:00:00.000Z 2019-12-27T00:00:00.000Z 1

2 2019-12-29T00:00:00.000Z 2019-12-28T00:00:00.000Z 0

3 2019-12-30T00:00:00.000Z 2019-12-29T00:00:00.000Z 1

4 2019-12-31T00:00:00.000Z 2019-12-30T00:00:00.000Z 4

5 2020-01-01T00:00:00.000Z 2019-12-31T00:00:00.000Z 6

6 2020-01-02T00:00:00.000Z 2020-01-01T00:00:00.000Z 2

7 2020-01-03T00:00:00.000Z 2020-01-02T00:00:00.000Z 3

8 2020-01-04T00:00:00.000Z 2020-01-03T00:00:00.000Z 1

9 2020-01-05T00:00:00.000Z 2020-01-04T00:00:00.000Z 0Normalizing

normalize_counts <- function(tweetcounts, baselinecounts) {

tweetcounts <- tweetcounts$tweet_count

baselinecounts <- baselinecounts$tweet_count

normalized_counts <- tweetcounts/baselinecounts

return(normalized_counts)

}

tweetcounts$normalized_count <-

normalize_counts(tweetcounts = tweetcounts,

baselinecounts = baselinecounts)

head(tweetcounts$normalized_count)[1] 0.8641441 1.4070588 1.8567669 2.3317991 1.1134310 1.0938708