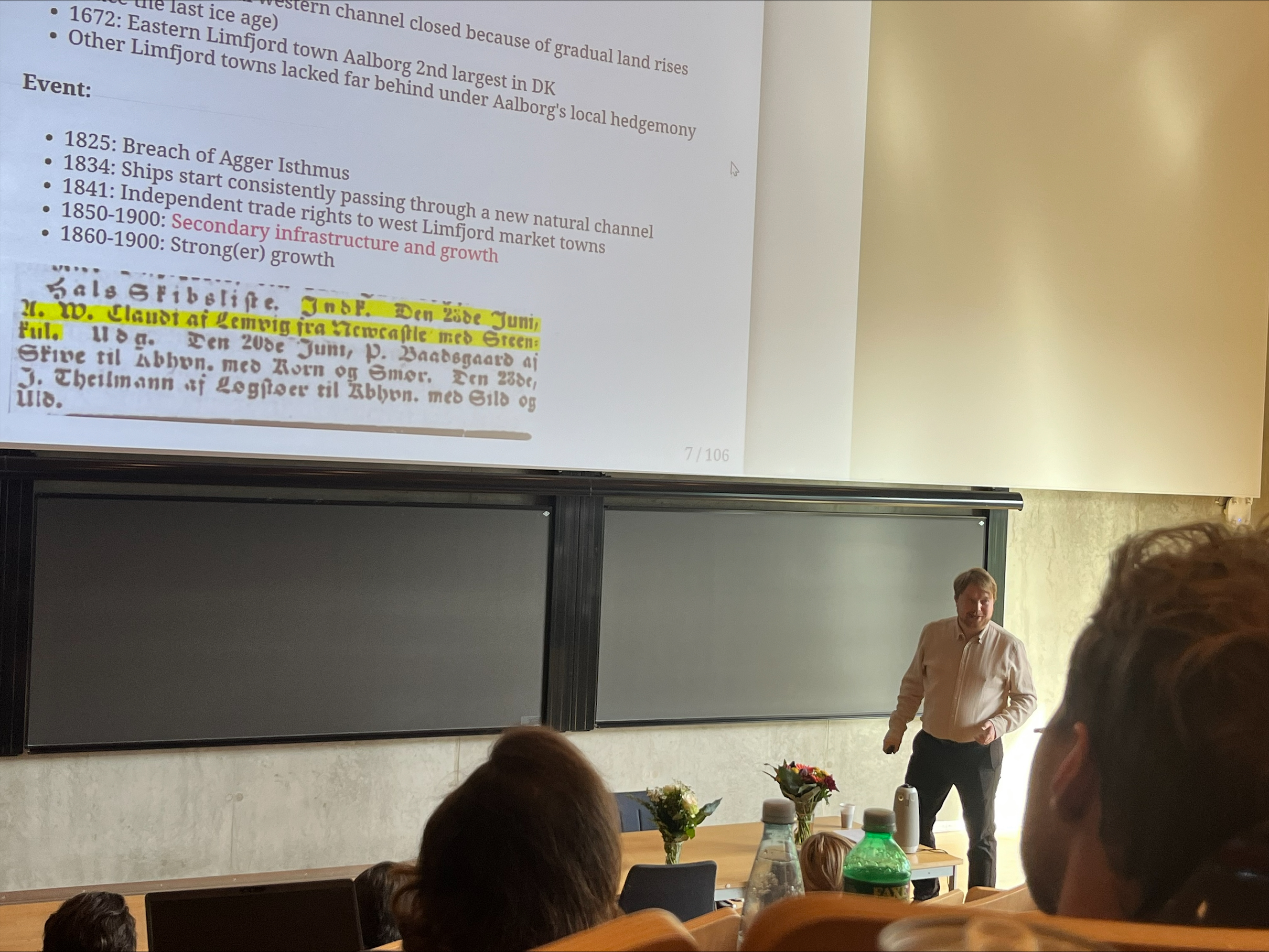

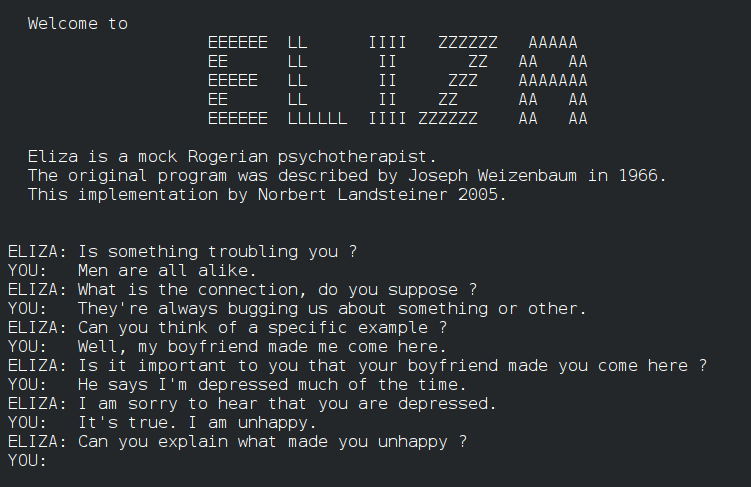

class: center, inverse, middle <style type="text/css"> .pull-left { float: left; width: 44%; } .pull-right { float: right; width: 44%; } .pull-right ~ p { clear: both; } .pull-left-wide { float: left; width: 66%; } .pull-right-wide { float: right; width: 66%; } .pull-right-wide ~ p { clear: both; } .pull-left-narrow { float: left; width: 30%; } .pull-right-narrow { float: right; width: 30%; } .tiny123 { font-size: 0.40em; } .small123 { font-size: 0.80em; } .large123 { font-size: 2em; } .red { color: red } .orange { color: orange } .green { color: green } </style> # News and Market Sentiment Analytics ## Lecture 1: Introduction ### Christian Vedel,<br>Department of Economics ### Email: [christian-vs@sam.sdu.dk](christian-vs@sam.sdu.dk) ### Updated 2025-10-24 .footnote[ .left[ .small123[ *Please beware. I work on these slides until the last minute before the lecture and push most changes along the way. Until the actual lecture, this is just a draft* ] ] ] --- # Today's lecture .pull-left[ - Course overview - The Efficient Market Hypothesis - How did we get to ChatGPT? (And what are the implications) - Expectations ] .pull-right[  ] --- # Motivaiton for the course .pull-left[ - *It's all about information* - Text is data. Data is text. - Applications: Business, Finance, Research, Software Development, Psychology, Linguistics, Business Intelligence, Policy Evaluation - In a world of LLMs you want to understand what is happening behind the fancy interface - The techniques live inside crazy sci-fi technologies, that you can learn. E.g. [literal mind reading](https://www.nature.com/articles/s41593-023-01304-9) ] .pull-right[  *Counter-example to what we will be doing* *[[xkcd.com/1838]](xkcd.com/1838)* ] --- # About me - Christian Vedel .pull-left[ - Economic Historian - freshly minted PhD (2023) - Research at the intersection between machine learning and economic history - A genuine interest in language, linguistics and etymology + All though you will find my texts riddled with typos and grammatical errors - Write me emails + .orange[christian-vs@sam.sdu.dk] - We can make office hours if you want it. - Third time teaching this course. But most of it completely changed from last year. Most of this course is adaptable. [Who are you? https://forms.gle/5Dk1pkCQdd4XYmgW8](https://forms.gle/5Dk1pkCQdd4XYmgW8) ] .pull-right[  .small123[*PhD defence, 2023-09-25*] ] --- # What to expect .small123[ - **Structure of the lectures** + 45 min. of theory + followup + 45 min. of practical examples + 45 min. of practical coding challenge - **Book:** + We will use 'PA2021' - Patel, A. A., & Arasanipalai, A. U. (2021). _Applied natural language processing in the enterprise_. O'Reilly Media. https://www.oreilly.com/library/view/applied-natural-language/9781492062561/ - **Exam:** + One week project in December (?) - **Course content:** + Low level NLP tools + applications + Transformers `\(\rightarrow\)` Basics `\(\rightarrow\)` Transformers - **Course plan:** + Continuously updated - **Slides:** + The night before the lecture + Slides do not cover everything I say. Please bring a notepad and a pen. - **Course description:** + https://odin.sdu.dk/sitecore/index.php?a=fagbesk&id=156413&lang=en + Course plan + [GitHub](https://github.com/christianvedels/News_and_Market_Sentiment_Analytics) ] --- # Preperation .pull-left[ - Read other literature assigned. - Read assigned PA2021 chapter. - Make sure you understand what is happening. - Please go off tangents. Tangents are the best - if not for this specific course. **Coding challenge:** - Will be challenging - Mistakes are great - Make sure that you understand and can run the solution - **Random selection** ] .pull-right[  ] --- # Efficient Market Hypothesis (EMH) .pull-left[ *If you can buy cheaply something which is more valuable than its price, you can profit (arbitrage). But ...* 1. We all have access to the same information 2. Everyone is interested in maximum profits 3. Everyone buys the undervalued assets 4. Prices increases until the advantages is gone ] .pull-right[  ### Implications: - If any 'whole' in the market occurs it is closed within milliseconds - We cannot invest better than anyone else - Investing in what a chicken poops on is better than listening to an 'expert' ] --- # Information Extraction in Financial Markets .pull-left[ - The NLP promise is to be able to extract information - News about firms changes the expected returns on assets - [NVIDIA News](https://youtu.be/U0dHDr0WFmQ?si=EKAQgBiTekQhxl0u) - If the expected returns on an asset increases or decreases there is an opportunity for arbitrage if prices have not changed - NLP tools potentially allow us to be ahead - EMH dissallows open source implementations: You need to improve on the methods you get here - **Timing is key** ] .pull-right[  ] --- class: inverse, middle, center # How did we get to ChatGPT? --- # The promise of AI .pull-left[ - The idea of the mechanic 'nightingale' ([HC] Andersen, 1844) + **Idea:** The mechanic version can never be as good because it is mechanic + Reaches far back e.g. Descartes (1637) writes about automata in 'Discourse and Methods' - Can machines think? The idea of the Turing test (1950/60s) + If machines can think, they will respond convincingly in conversation ] .pull-right[  *Generated in beta.dreamstudio.ai* ] --- # Early rule-based systems .pull-left[ - We can model behaviour by *if then* statements - ELIZA (Weizenbaum, 1966): + The earliest convincing chatbot + Looks for keywords and otherwise writes something generic or repeats something from earlier - 1980's was the peak of rule based systems + Based on linguistic theory + Grammar + Morphology + Semantics ] .pull-right[  *ELIZA Conversation (Wikimedia Commons)*  *Semantic net* ] --- # The philosophical/linguistic problem .pull-left[ - Can we distinguish reasoning from immitation? + Chinese room problem (Seale, 1980) - What is the nature of language? + Innateness vs. Empiricism (Chomsky vs. Vienna circle*) + Innateness `\(\rightarrow\)` rule-based NLP + Empiricism `\(\rightarrow\)` 'empirical' (learning) methods - ChatGPT is a big score for the Empiricist hypothesis ] .footnote[ \* E.g. (of names you might know) Gödel, Popper, Reichenbach, Wittgenstein [(Wikipedia)](https://en.wikipedia.org/wiki/Vienna_Circle#Overview_of_the_members_of_the_Vienna_Circle) ] .pull-right[  ] --- # 90s computer power - 60s maths .pull-left[ - **Empiricist problem**: Statistics alone can only predict - not understand structure. Mathematical limitation (Pearl, 2019) - But we can phrase language problems as predictions problems - Black box circumvents innateness - We just need to estimate: `$$P(word|\{text\},\theta)$$` - E.g. "He is a [word]" - Choose `\(\theta\)` to minimize predicition errors - Could be based on e.g. a [universal function approximator](https://en.wikipedia.org/wiki/Universal_approximation_theorem) like a neural network ] .pull-right[  .small123[*Crazy realistic 90s dancing baby*] ] --- # History of Neural Networks for NLP .pull-left-wide[ - **'Perceptron'** neural network (Rosenblatt, .orange[1958]) - **Long-Short-term memory** remembers and forgets previous words and letters (Hochreiter, Schmidhuber, .orange[1997]) - A rule-based trick: **Word embeddings:** Queens, Kings, men, women (Firth, .orange[1957] - But applied from .orange[2000/2010s]; Roweis & Saul, .orange[2000]) - **Tranformers**: 'Attention Is All You Need' (Vaswani, .orange[2017]) - **Masked models**: E.g. BERT (Devlin et al, .orange[2019]) + "He is a [MASK] dog." `\(\rightarrow\)` "He is a [smelly] dog." - **GPT**: Radford & Narasimhan (.orange[2018]): What if we make models really large? E.g. 175 billion parameters and train it on 499 Billion tokens (GPT-3) - **What is a good answer?**: ChatGPT etc. (.orange[2022]) trains a sepperate neural network to tell good from bad answers - reinforcement learning. - **Huggingface**: Sharing of various transformer models. ] .pull-right-narrow[  ] --- class: middle # Three types of NLP 1. **Structural, rules-based:** *ELIZA* + Still has supprisingly many uses. E.g. regex but also generally in financial applications where time is important 2. **Statistical, structural-inspired:** *Latent Dirichlet Allocation* + What any NLP/ML class was about ~5 years ago. What I have learned + Worth knowing because there is *so* much of it out there 3. **Statistical, black box:** *Transformer models* + What we will focus on for the most part --- # Open questions .pull-left[ - Do language models think? - What is consciousness? - Should LLMs be allowed for exams? + (Please use it for this one!) + [Link to SDU's policy](https://sdunet.dk/en/undervisning-og-eksamen/nyheder/2024/0628_gai) **What do you think?** ] .pull-right[ **Evidence of structural learning**  .small123[ *Figure 1 from [Gurnee & Tegmark (2023)](https://arxiv.org/abs/2310.02207)* ] ] --- # Course plan .small123[*What do you think?*] .small123[ | Date | Topic | | ---------- | ----------------------------- | | 2025-10-24 | Introduction | | 2025-MM-DD | Text wrangling | | 2025-MM-DD | Classification | | 2025-MM-DD | Tokenization and Embeddings | | 2025-MM-DD | Causality | | 2025-MM-DD | Topic of your choice | | 2025-MM-DD | Sentiment analysis using RAGs | | 2025-MM-DD | Exam workshop | ] --- class: inverse, middle, center # Miscellaneous --- # Setup .pull-left[ - `spaCy` and `transformers` - I use VS Code - but feel free to use something else #### Conda environment ``` r conda create --name sentimentF24 conda activate sentimentF24 conda install numpy matplotlib pandas seaborn requests conda install spyder ``` ] .pull-right[ #### spaCy Download everything with the following python code ``` r conda install -c conda-forge spacy conda install -c conda-forge cupy python -m spacy download en_core_web_sm ``` #### Install pytorch to run on cuda 11.8* ``` r conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia pip install transformers ``` ] .footnote[ .small123[ *Without a GPU? See https://pytorch.org/get-started/locally/ ] ] --- # How to deal with paths .pull-left-narrow[ - We want to write code which runs out of the box on any system - We don't want to edit long paths. We want it to work. ] -- .pull-right-wide[ .small123[ .red[**Bad:**] ``` python pd.read_csv('C:/Users/Christian/Dropbox/Teaching/News_and_Market_Sentiment_Analytics/2024/News_and_Market_Sentiment_Analytics/Lecture_2_-_Lexical_resources/Data/Novo_Nordisk_News.csv') ``` .green[**Good:**] ``` python pd.read_csv('Data/Novo_Nordisk_News.csv') ``` ] ] --- ### Solution? -- 1. Save scripts to relevant folder 2. Run things from main ``` python import pandas as pd def some_function(x): x + 1 def some_other_function(x): x + 2 if __name__ == "__main__": df = pd.read_csv('Data/Novo_Nordisk_News.csv') df = some_function(df) df = some_other_function(df) ``` --- class: middle # About coding challenges .pull-left-wide[ - Meant to get your hands dirty with practical examples - Meant to challenge a bit - Presentation of solution to coding challenge: + Everyone presents at least once + Random pick* at the beginning of each lecture + Just brief presentations: Here is what I did, how I did it + here is what I had trouble with ] .footnote[ `\(^*\)`*Don't worry, you can opt out each time by writing me an email or just letting me know in the beginning of the lecture* ] --- # Before next time .pull-left[ - Read [Text Wrangling with Python](https://blog.devgenius.io/text-wrangling-with-python-a-comprehensive-guide-to-nlp-and-nltk-f7553e713291) - Watch ['The Zipf Mystery'](https://youtu.be/fCn8zs912OE?si=xVMA63kt9M99Qvjx) ] .pull-right[  ] --- # Resources - [Natural Language Processing with spaCy & Python - Course for Beginners](https://www.youtube.com/watch?v=dIUTsFT2MeQ) - [Natural Language Processing With Python and **NLTK**](https://youtu.be/FLZvOKSCkxY?si=6ZgFsh3P551EWVRC) - [Complete Natural Language Processing (NLP) Tutorial in Python! (with examples)](https://youtu.be/M7SWr5xObkA?si=b40Uw97oX5Epy6vf) - **Do you want a quick and dirty GitHub intro?** ### Project directory - Think about how you structure your directory for this course (and in general) - Examples: [OccCANINE](https://github.com/christianvedels/OccCANINE), [This course](https://github.com/christianvedels/News_and_Market_Sentiment_Analytics/) - *More advanced approaches* + [Microsoft Team Data Science Project](https://learn.microsoft.com/en-us/azure/architecture/data-science-process/overview), [Cookiecutter](https://drivendata.github.io/cookiecutter-data-science/), [Cookiecutter youtube video](https://youtu.be/5VjuG5lliYU?si=oci0h0gNiTWciQ_I) --- class: inverse, middle # Coding challenge: ## ['Skipping ahead to the end'](https://github.com/christianvedels/News_and_Market_Sentiment_Analytics/blob/main/Lecture%201%20-%20Introduction/Coding_challenge1.md) --- ## References (1/2) .small123[ Andersen, H. C. (1844). The Nightingale. https://www.hcandersen-homepage.dk/?page_id=2257 Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota. Association for Computational Linguistics. Descartes, R. (1637). Discourse on the Method of Rightly Conducting One's Reason and of Seeking Truth in the Sciences (J. Veitch, Trans.). EBook-No. 59. https://www.gutenberg.org/ebooks/59 Firth, J.R. (1957). "A synopsis of linguistic theory 1930–1955". Studies in Linguistic Analysis: 1–32. Reprinted in F.R. Palmer, ed. (1968). Selected Papers of J.R. Firth 1952–1959. London: Longman. Gurnee, W., & Tegmark, M. (2023). Language Models Represent Space and Time. https://arxiv.org/abs/2310.02207 Pearl, J. (2019). The seven tools of causal inference, with reflections on machine learning. Communications of the ACM, 62(3), 54–60. https://doi.org/10.1145/3241036 Radford, A., & Narasimhan, K. (2018). Improving Language Understanding by Generative Pre-Training. https://api.semanticscholar.org/CorpusID:49313245 Rosenblatt, F. (1958). The perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, 65(6), 386–408. https://doi.org/10.1037/h0042519 ] --- ## References (2/2) .small123[ Roweis, S. T., Saul, L. K. (2000). "Nonlinear Dimensionality Reduction by Locally Linear Embedding". Science, 290(5500), 2323–6. https://doi.org/10.1126/science.290.5500.2323 Searle, J. (1980), "Minds, Brains and Programs", Behavioral and Brain Sciences, 3(3), 417–457. https://doi.org/10.1017/S0140525X00005756 Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention Is All You Need. https://arxiv.org/abs/1706.03762 Weizenbaum, J. (1966). ELIZA—a Computer Program for the Study of Natural Language Communication between Man and Machine. Commun. ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168 ]