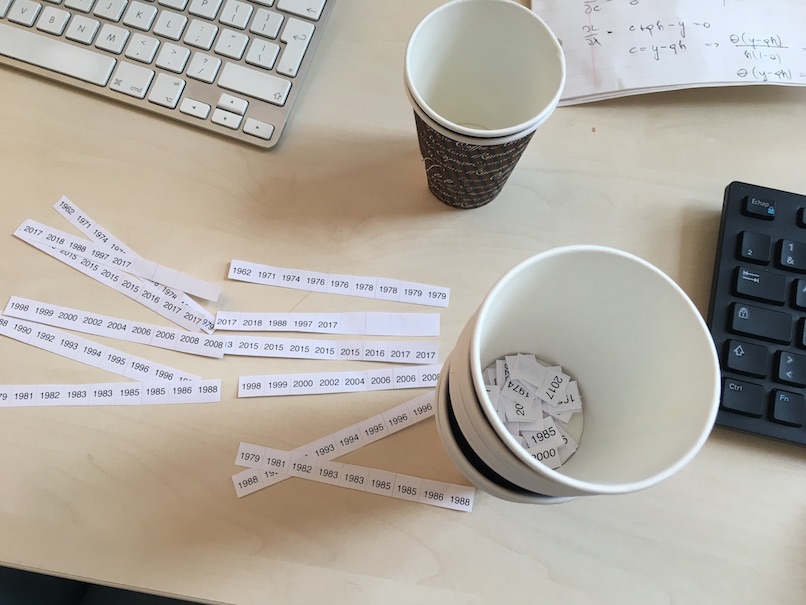

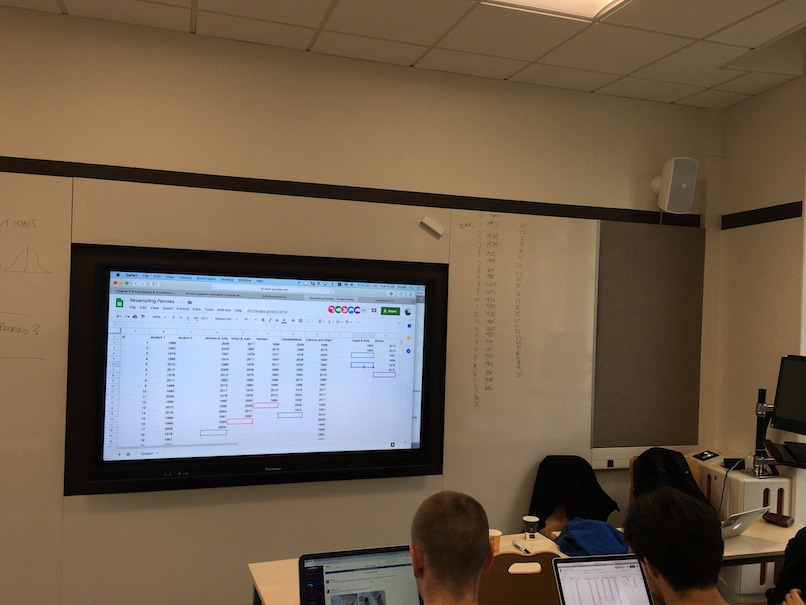

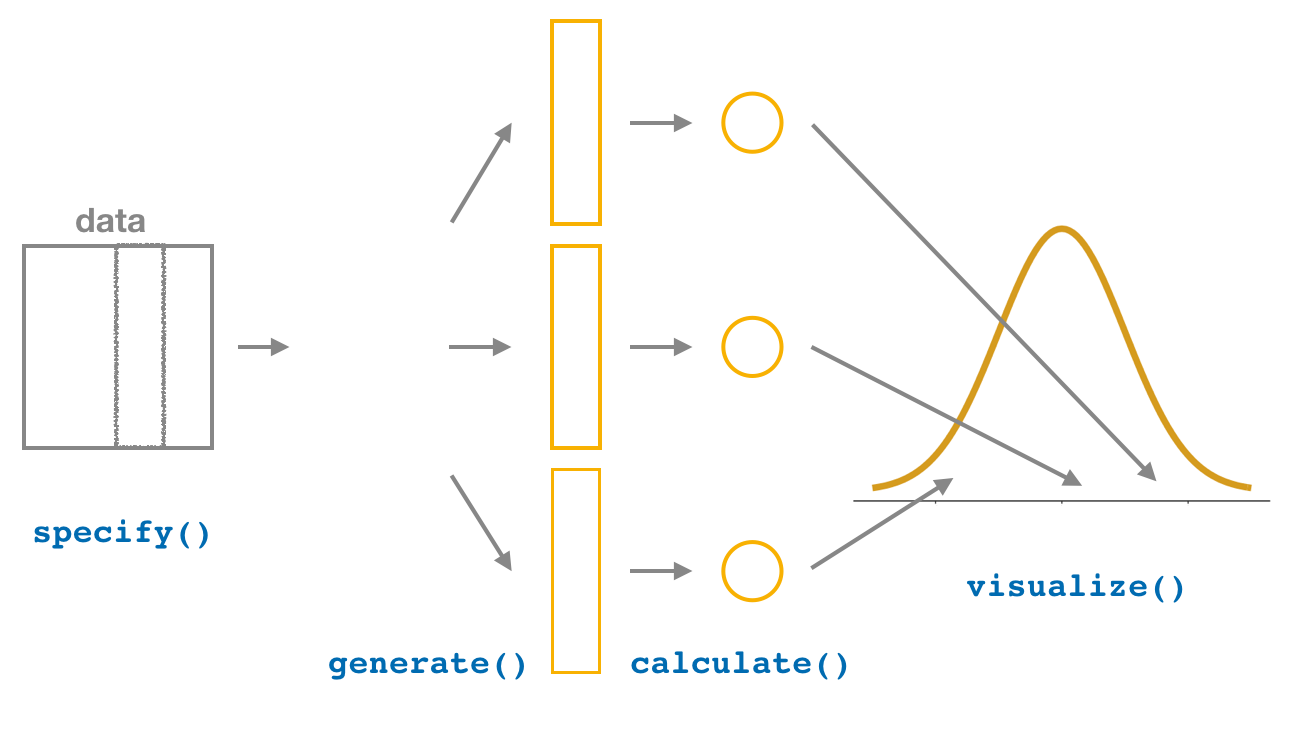

class: center, middle, inverse, title-slide # ScPoEconometrics ## Confidence Intervals and The Bootstrap ### Florian Oswald ### SciencesPo Paris </br> 2019-10-23 --- layout: true <div class="my-footer"><img src="../img/logo/ScPo-shield.png" style="height: 60px;"/></div> --- # Where Did We Stop Last Week? .pull-left[ * Last week we learned about *sampling distributions* * We took repeated samples from a population (Fusilli or plastic balls) and computed an **estimate** of the sample proportion `\(\hat{p}\)` over and over again * We took 1000 (!) samples and looked how the sampling distribution evolved as we increased sample sizes from `\(n=25\)` to `\(n=100\)`. * Our estimates became *more precise* as `\(n\)` increased. ] -- .pull-right[ * We introduced notation: * population size `\(N\)`, * *point estimate* (like `\(\hat{p}\)`), * standard error *of* an estimate * We said *random sampling produces **unbiased** estimates*. * Our sample statistics provided **good guesses** about the true population parameters of interest. ] --- background-image: url(https://media.giphy.com/media/hXG93399r19vi/giphy.gif) background-position: 10% 50% background-size: 500px # Reality Check .pull-right[ * Who could ever take **1000** samples for any study?! * Also, we **knew** the true population proportion of red balls/green Fusilli! * If we knew in real life, we wouldn't have to take samples altogether, would we? * So what on earth was all of this good for? Fun Only?! 😧. ] --- # Real Life Sampling .pull-left[ * In reality we only get to take **one** sample from the population. * Still we *know* now that there exists a *sampling distribution*. * In other words: we *know* that there is sampling variation. * With one sample only, how can we know what it looks like? ] -- .pull-right[ * There are two approaches: 1. Theory: Use mathematical formulas to derive the sampling distributions for our estimators under certain conditions. 1. Simulation. We can use the **Bootstrap** method to *reconstruct* the sampling distribution. * We'll focus on the bootstrap now and come back to the maths approach later. * But first: what is a *bootstrap*? ] --- background-image: url(https://upload.wikimedia.org/wikipedia/commons/9/9b/Zentralbibliothek_Solothurn_-_M%C3%BCnchhausen_zieht_sich_am_Zopf_aus_dem_Sumpf_-_a0400.tif) background-position: 12% 50% background-size: 400px # Baron von Münchhausen (not) .pull-right[ * The ethymology of *bootstrapping* is apparently [wrongly attributed](https://en.wikipedia.org/wiki/Bootstrapping) to the Baron of Münchhausen (who pulled himself out of the swamp by his own pigtail) * The idea is to *pull oneself up by one's own bootstraps*. * It sounds as if one had super-powers. * It's a simple but powerful idea. We just *pretend* that our sample **is** the population. Then we repeatedly *resample* from it. ] --- background-image: url(https://media.giphy.com/media/pPhyAv5t9V8djyRFJH/giphy.gif) background-position: 12% 50% background-size: 700px .right-thin[ <br> <br> <br> * 🤯 ] --- background-image: url(https://media.giphy.com/media/pPhyAv5t9V8djyRFJH/giphy.gif) background-position: 12% 50% background-size: 700px .right-thin[ <br> <br> <br> * 🤯 * Ok, let's do a little hands-on activity first. * You'll be fine. * 😎 ] --- # What's the Average Age of US Pennies? * [`Moderndive`](https://moderndive.com) went for us to the bank. * First, They got a roll of 50 pennies.  --- # What's the Average Age of US Pennies? .left-wide[ * Then, they recorded the year each penny was minted:  ] .thin-right[ * This data is in `moderndive` ```r library(moderndive) pennies_sample ``` ``` ## # A tibble: 50 x 2 ## ID year ## <int> <dbl> ## 1 1 2002 ## 2 2 1986 ## 3 3 2017 ## 4 4 1988 ## 5 5 2008 ## 6 6 1983 ## 7 7 2008 ## 8 8 1996 ## 9 9 2004 ## 10 10 2000 ## # … with 40 more rows ``` ] --- # Analyzing Pennies .pull-left[ * Let's assume this is a *representative, random* sample of all US pennies. * Let's look at it's distribution and compute the mean `year` in the sample. * Given randomness, that estimate should come close to the truth. ] -- .pull-right[ <img src="boostrap_files/figure-html/unnamed-chunk-2-1.svg" style="display: block; margin: auto;" /> ```r pennies_sample %>% summarize(mean_year = mean(year)) ``` ``` ## # A tibble: 1 x 1 ## mean_year ## <dbl> ## 1 1995. ``` ] --- # Pennies Sample Estimates .pull-left[ * So far, this is very similar to Fusilli or Balls, where we estimated `\(p\)` via `\(\hat{p}\)` * Here, we estimate the population average `\(\mu\)` via the **sample mean** `\(\bar{x}\)`. * Our best guess about `\(\mu\)` is thus about 1995. * But what about sampling variation? * If we went back to the bank for another roll of pennies, would we end up with the same number for `\(\bar{x}\)`? ] -- .pull-right[ * Last week we drew 1000 samples from our population of (virtual) balls. * That gave rise to a *distribution* of estimates `\(\hat{p}\)`. * We could go back to the bank 1000 times, but 🤔 * We can also use our *single* sample to learn about the sampling distribution. * It's called **resampling with replacement**. ] --- # Resampling Once .pull-left[ 1. Printed out equally-sized paper slips representing the 50 pennies in the sample. 1. Put them in a paper cup or similar 1. Shake cup to mix the slips, then take out a random slip. 1. Record year on slip. 1. **Put the slip back** into the hat! 1. Repeat 3.-4. for 49 times. ] -- .pull-right[  ] --- # Collect the Bootstrapped Sample * Let's put the recorded years into a `tibble`! ```r pennies_resample <- tibble( year = c(1976, 1962, 1976, 1983, 2017, 2015, 2015, 1962, 2016, 1976, 2006, 1997, 1988, 2015, 2015, 1988, 2016, 1978, 1979, 1997, 1974, 2013, 1978, 2015, 2008, 1982, 1986, 1979, 1981, 2004, 2000, 1995, 1999, 2006, 1979, 2015, 1979, 1998, 1981, 2015, 2000, 1999, 1988, 2017, 1992, 1997, 1990, 1988, 2006, 2000) ) ``` * And now let's look at both original and bootstrapped sample! --- # Comparing Resampled Data To Original Sample .left-wide[ <img src="boostrap_files/figure-html/unnamed-chunk-5-1.svg" style="display: block; margin: auto;" /> ] -- .right-thin[ Also, comparing both means: ```r pennies_sample %>% summarize(mean_year = mean(year)) ``` ``` ## # A tibble: 1 x 1 ## mean_year ## <dbl> ## 1 1995. ``` ```r pennies_resample %>% summarize(mean_year = mean(year)) ``` ``` ## # A tibble: 1 x 1 ## mean_year ## <dbl> ## 1 1995. ``` ] --- # Resampling 11 Times .pull-left[ * Let's do this together now! * Everybody produce one re-sample of 50 draws from their set of paper slips. * Don't forget to **replace** the slip and mix! * We'll collect data via this [shared google sheet](https://docs.google.com/spreadsheets/d/1cBefpVyP3q2SortQD3TJ_XFQ2kJ5iSxM6qjwTvqcooY/edit?usp=sharing) * In class, read this data with ```r url <- "https://docs.google.com/spreadsheets/d/e/2PACX-1vTb_D6Qczq_WLN-VmhG0-xTxh4Kb_e4A_5BPr2p9wtLN9UBB77yMDDKU3nGe34xVvadzjUQCt9jpWj_/pub?gid=0&single=true&output=csv" # pennies_our_sample <- readr::read_csv(url) ``` ] .pull-right[  ] --- # `moderndive` Resampled Data .pull-left[ * Our friends collected 35 Re-samples. * The data is collected in ```r pennies_resamples ``` ``` ## # A tibble: 1,750 x 3 ## replicate name year ## <int> <chr> <dbl> ## 1 1 A 1988 ## 2 1 A 2002 ## 3 1 A 2015 ## 4 1 A 1998 ## 5 1 A 1979 ## 6 1 A 1971 ## 7 1 A 1971 ## 8 1 A 2015 ## 9 1 A 1988 ## 10 1 A 1979 ## # … with 1,740 more rows ``` ] .pull-right[ * And we want to compute the average `year` from each sample: ```r resampled_means <- pennies_resamples %>% group_by(name) %>% summarize(mean_year = mean(year)) resampled_means ``` ``` ## # A tibble: 35 x 2 ## name mean_year ## <chr> <dbl> ## 1 A 1992. ## 2 AA 1996. ## 3 B 1996. ## 4 BB 1992. ## 5 C 1996. ## 6 CC 1996. ## 7 D 1997. ## 8 DD 1997. ## 9 E 1991. ## 10 EE 1998. ## # … with 25 more rows ``` ] --- # Visualizing Our (Manual) Bootstrap Distribution .left-wide[ <img src="boostrap_files/figure-html/unnamed-chunk-11-1.svg" style="display: block; margin: auto;" /> ] .right-thin[ ## Discuss: 1. Shape 2. Central Location of this! ] --- # Recap .pull-left[ * We demonstrated **bootstrapping**. We illustrated sampling distributions like in the previous chapter by *resampling* from a single sample, rather than from the population. * We obtained the **bootstrap distribution**, which approximates the true *sampling distribution* * Next up: ] -- .pull-right[ * We will again re-do the exercise virtually on your computers. * We will define the concept of **confidence interval** * We will introduce the `infer` package to perform *inference* in a `dplyr` pipeline. ] --- # Virtual Bootstrapping .pull-left[ * Let's start with a single resample. * That's like taking 50 paper slips out of our hat before. ```r virtual_resample <- pennies_sample %>% rep_sample_n(size = 50, replace = TRUE) ``` ] -- .pull-right[ * notice we set `replace = TRUE`! ```r virtual_resample ``` ``` ## # A tibble: 50 x 3 ## # Groups: replicate [1] ## replicate ID year ## <int> <int> <dbl> ## 1 1 41 1992 ## 2 1 23 1998 ## 3 1 38 1999 ## 4 1 38 1999 ## 5 1 19 1983 ## 6 1 50 2017 ## 7 1 13 2015 ## 8 1 39 2015 ## 9 1 26 1979 ## 10 1 17 2016 ## # … with 40 more rows ``` ] --- # Resampling 35 Times .pull-left[ First, compute 35 replicates: ```r virtual_resamples <- pennies_sample %>% rep_sample_n(size = 50, replace = TRUE, reps = 35) virtual_resamples ``` ``` ## # A tibble: 1,750 x 3 ## # Groups: replicate [35] ## replicate ID year ## <int> <int> <dbl> ## 1 1 36 2015 ## 2 1 18 1996 ## 3 1 34 1985 ## 4 1 23 1998 ## 5 1 31 2013 ## 6 1 47 1982 ## 7 1 28 2006 ## 8 1 20 1971 ## 9 1 6 1983 ## 10 1 44 2015 ## # … with 1,740 more rows ``` ] -- .pull-right[ Then compute the mean by `replicate`! ```r virtual_resampled_means <- virtual_resamples %>% group_by(replicate) %>% summarize(mean_year = mean(year)) virtual_resampled_means ``` ``` ## # A tibble: 35 x 2 ## replicate mean_year ## <int> <dbl> ## 1 1 1993. ## 2 2 1996. ## 3 3 1998. ## 4 4 1996. ## 5 5 1993. ## 6 6 1998. ## 7 7 1997. ## 8 8 1995. ## 9 9 1994. ## 10 10 1996. ## # … with 25 more rows ``` ] --- # Resampling 1000 times ```r virtual_resampled_means <- pennies_sample %>% rep_sample_n(size = 50, replace = TRUE, reps = 1000) %>% group_by(replicate) %>% summarize(mean_year = mean(year)) virtual_resampled_means ``` ``` ## # A tibble: 1,000 x 2 ## replicate mean_year ## <int> <dbl> ## 1 1 1995. ## 2 2 1996. ## 3 3 1996. ## 4 4 1996. ## 5 5 1995. ## 6 6 1996. ## 7 7 1997. ## 8 8 2000. ## 9 9 1996. ## 10 10 1998. ## # … with 990 more rows ``` --- # Distribution of 1000 Resamples <img src="boostrap_files/figure-html/unnamed-chunk-17-1.svg" style="display: block; margin: auto;" /> --- layout: false class: title-slide-section-red, middle # Confidence Intervals --- layout: true <div class="my-footer"><img src="../img/logo/ScPo-shield.png" style="height: 60px;"/></div> --- # Confidence Intervals (CIs) .pull-left[ * A CI gives us a *range* of plausible values for any given estimate. * In order to construct a CI, we need 1. an underlying *sampling distribution*, either boostrapped as here, or derived from theory. 2. to specify a **confidence level**, like 90% or 95% etc. Greater level implies wider CIs. * There are two methods: the **percentile method** and the **standard error method**. ] -- .pull-right[ 1. the **percentile method** cuts the bootstrap distribution below the 2.5% and 97.5% percentile (thus it selects the middle 95%). 2. The **standard error method** assumes that the sampling distribution is approximately normal, and uses a corresponding formula for the width of the CI. ] --- # Percentile Method .left-wide[ <img src="boostrap_files/figure-html/unnamed-chunk-18-1.svg" style="display: block; margin: auto;" /> ] .right-thin[ * We cut the boostrap distribution at the 2.5% and 97.5% quantiles * Those values are 1991.1985 and 1999.6805 ] --- # Standard Error Method .left-wide[ * We need mean `\(\bar{x}\)` and *standard error* (SE) of the mean: * If approximately normal, 95% of observations fall within `\(\pm 1.96\)` standard deviations of the mean. * We obtain the CIs as follows: `$$\begin{align} x \pm 1.96 \cdot SE &= (\bar{x} - 1.96 \cdot SE, \bar{x} + 1.96 \cdot SE)\\ &= (1995.44 - 1.96 \cdot 2.19, 1995.44 + 1.96 \cdot 2.19)\\ &= (1991.1476, 1999.7324) \end{align}$$` * Only valid, if sampling distribution is **approximately normal**! ] .right-thin[ ```r x_bar <- pennies_sample %>% summarize(mean_year = mean(year)) SE = virtual_resampled_means %>% summarize(SE = sd(mean_year)) SE ``` ``` ## # A tibble: 1 x 1 ## SE ## <dbl> ## 1 2.19 ``` * Remember that we called this the *standard error* (SE) of the mean. ] --- # Comparing Percentile and Standard Error Method .left-wide[ <img src="boostrap_files/figure-html/unnamed-chunk-20-1.svg" style="display: block; margin: auto;" /> ] .right-thin[ * The *percentile method* is based on the simulated bootstrap distribution. Shape of distribution is irrelevant. * The *standard error method* is based on *assuming* that the sampling dist. is approximately normal. ] --- # Constructing Confidence Intervals With `infer` .pull-left[ * The `infer` package is useful for **inference**. * It relies on the bootstrap just like we introduced it above. * A typical `infer` pipeline looks like this: ```r library(infer) pennies_sample %>% specify(response = year) %>% generate(reps = 35,type = "bootstrap") %>% calculate(stat = "mean") %>% visualize() ``` ] .pull-right[ <br> <br>  ] --- # Percentile Method with `infer` .pull-left[ * Let's start with creating a bootstrap distribution: ```r bootstrap_distribution <- pennies_sample %>% specify(response = year) %>% generate(reps = 1000, type = "bootstrap") %>% calculate(stat = "mean") bootstrap_distribution ``` ``` ## # A tibble: 1,000 x 2 ## replicate stat ## <int> <dbl> ## 1 1 1996. ## 2 2 1995. ## 3 3 1996. ## 4 4 1997. ## 5 5 1993. ## 6 6 1996. ## 7 7 1996. ## 8 8 1994. ## 9 9 1995. ## 10 10 1994. ## # … with 990 more rows ``` ] -- .pull-right[ * Then, let's visualize it! ```r visualize(bootstrap_distribution) ``` <img src="boostrap_files/figure-html/unnamed-chunk-23-1.svg" style="display: block; margin: auto;" /> ] --- # Percentile Method with `infer` .pull-left[ * We can easily get the CI like this: ```r percentile_ci <- bootstrap_distribution %>% get_confidence_interval(level = 0.95, type = "percentile") percentile_ci ``` ``` ## # A tibble: 1 x 2 ## `2.5%` `97.5%` ## <dbl> <dbl> ## 1 1991. 2000. ``` * Just set `type = "se"` for standard error method! * What's best is the visualization though! 🎉 ] -- .pull-right[ ```r visualize(bootstrap_distribution) + shade_confidence_interval(endpoints = percentile_ci) ``` <img src="boostrap_files/figure-html/unnamed-chunk-25-1.svg" style="display: block; margin: auto;" /> * Or shorter `shade_ci()`. ] --- # Interpreting CIs .pull-left[ * Now we want to know: is the true value contained in the CI? * Let's go back to the red/white balls in `bowl`. * Given this **is** the population, we can compute the true `\(p\)`: ```r bowl %>% summarize(p_red = mean(color == "red")) ``` ``` ## # A tibble: 1 x 1 ## p_red ## <dbl> ## 1 0.375 ``` ] -- .pull-right[ * In reality, again, we *don't know* `\(p\)`, and that is why we go through all this estimation trouble. * Let's take one of the samples taken from the bowl, `bowl_sample_1` ```r prop.table(table(bowl_sample_1$color)) ``` ``` ## ## red white ## 0.42 0.58 ``` * Is the true value `\(p\)` contained in a CI based on this particular `\(\hat{p}=0.42\)`? * Let's construct a boostrap sample and find out! ] --- # Constructing a CI based on `\(\hat{p}\)` .pull-left[ ```r sample_1_bootstrap <- bowl_sample_1 %>% # given `color` is a factor, tell what is success specify(response = color, success = "red") %>% # generate 1000 bootstrap samples generate(reps = 1000, type = "bootstrap") %>% # for each, calculate the proportion of successes calculate(stat = "prop") percentile_ci_1 <- sample_1_bootstrap %>% get_confidence_interval(level = 0.95, type = "percentile") percentile_ci_1 ``` ``` ## # A tibble: 1 x 2 ## `2.5%` `97.5%` ## <dbl> <dbl> ## 1 0.28 0.56 ``` * Here to visualize it: ```r sample_1_bootstrap %>% visualize(bins = 15) + shade_confidence_interval(endpoints = percentile_ci_1) + geom_vline(xintercept = 0.375, linetype = "dashed") ``` ] -- .pull-right[ <img src="boostrap_files/figure-html/unnamed-chunk-30-1.svg" style="display: block; margin: auto;" /> * Had we taken another sample, we would have obtained a different result. * Take 100 samples and see how well we do! ] --- # How many CIs Contain the True Values? .left-thin[ 1. Take 100 virtual samples from `bowl`. 2. Construct a 95%-CI from each. 3. We plot the true `\(p = 0.375\)`. 4. You see that 5 of them miss the true value. 5. Hence, a 95% confidence interval. ] -- .right-wide[ <img src="boostrap_files/figure-html/unnamed-chunk-31-1.svg" style="display: block; margin: auto;" /> ] --- class: title-slide-final, middle # THANKS To the amazing [moderndive](https://moderndive.com/) team! --- class: title-slide-final, middle background-image: url(../img/logo/ScPo-econ.png) background-size: 250px background-position: 9% 19% # END | | | | :--------------------------------------------------------------------------------------------------------- | :-------------------------------- | | <a href="mailto:florian.oswald@sciencespo.fr">.ScPored[<i class="fa fa-paper-plane fa-fw"></i>] | florian.oswald@sciencespo.fr | | <a href="https://github.com/ScPoEcon/ScPoEconometrics-Slides">.ScPored[<i class="fa fa-link fa-fw"></i>] | Slides | | <a href="https://scpoecon.github.io/ScPoEconometrics">.ScPored[<i class="fa fa-link fa-fw"></i>] | Book | | <a href="http://twitter.com/ScPoEcon">.ScPored[<i class="fa fa-twitter fa-fw"></i>] | @ScPoEcon | | <a href="http://github.com/ScPoEcon">.ScPored[<i class="fa fa-github fa-fw"></i>] | @ScPoEcon |