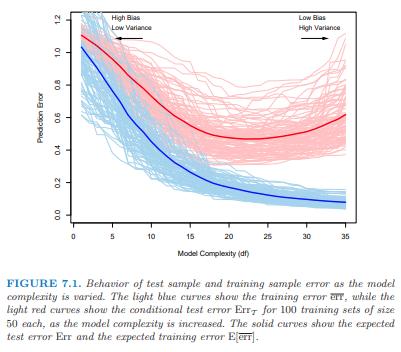

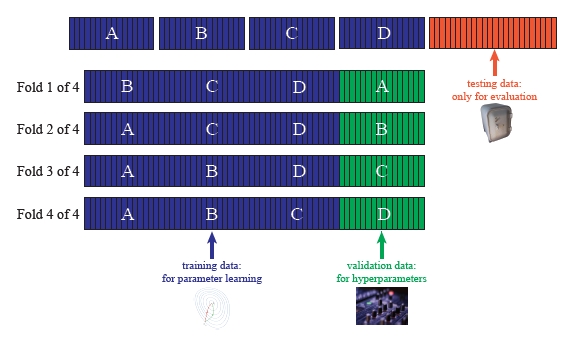

class: title-slide <br><br><br> # Lecture 19 ## Introduction to Machine Learning ### Tyler Ransom ### ECON 6343, University of Oklahoma --- # Attribution Today's material is based on the two main Statistical Learning textbooks: .pull-left[ .center[ <img src="islr_cover.png" width="146" height="90%" style="display: block; margin: auto;" /> .small[James, Witten, Hastie, and Tibshirani (2013)] ] ] .pull-right[ .center[ <img src="eslr_cover.png" width="149" height="90%" style="display: block; margin: auto;" /> .small[Hastie, Tibshirani, and Friedman (2009)] ] ] --- # Plan for the Day 1. What is Machine Learning (ML)? 2. Broad overview 3. How to do ML in Julia Next time, we'll discuss how ML is being used with econometrics --- # Machine Learning and Artifical Intelligence (AI) - .hi[Machine learning (ML):] Allowing computers to learn for themselves without explicitly being programmed - USPS: Computer to read handwriting on envelopes - Google: AlphaGo Zero, computer that defeated world champion Go player - Apple/Amazon/Microsoft: Siri, Alexa, Cortana voice assistants - .hi[Artificial intelligence (AI):] Constructing machines (robots, computers) to think and act like human beings - ML is a subset of AI --- # Prediction vs. Inference - Prediction and Inference are the two main reasons for analyzing data - .hi[Prediction:] We simply want to obtain `\(\hat{Y}\)` given the `\(X\)`'s - .hi[Inference:] Understanding how changing the `\(X\)`'s will change `\(Y\)` - Three types of inference, according to [Andrew Gelman](https://andrewgelman.com/2018/08/18/fallacy-excluded-middle-statistical-philosophy-edition/) 1. Generalizing from sample to population (statistical inference) 2. Generalizing from control state to treatment state (causal inference) 3. Generalizing from observed measurements to underlying constructs of interest - Philosophically, these can each be framed as prediction problems --- # Inference as Prediction - How can each type of inference be framed as a prediction problem? - Statistical inference: - Predict what `\(Y\)` (or `\(\beta\)`) would be in a different sample - Causal inference: - Predict what `\(Y\)` would be if we switched each person's treatment status - Measurement quality: - Predict what `\(Y\)` would be if we could better measure it (or the `\(X\)`'s) - e.g. personal rating in Harvard admissions (what does it measure?) --- # Vocabulary Econometrics and Machine Learning use different words for the same objects .pull-left[ .hi[Econometrics] - Dependent variable - Covariate - Observation - Objective function - Estimation ] .pull-right[ .hi[Machine Learning] - Target variable - Feature - Example (Instance) - Cost function (Loss function) - Training (Learning) ] --- # The goal of machine learning Machine learning is all about automating two hand-in-hand processes: 1. Model selection - What should the specification be? 2. Model validation - Does the model generalize to other (similar) contexts? - Want to automate these processes to maximize predictive accuracy - As defined by some cost function - This is .hi[different] than the goal of econometrics! (causal inference) --- # Training, validation and test data - In econometrics, we typically use the entire data set for estimation - In ML, we assess out-of-sample performance, so we should hold out some data - Some held-out data is used for validating the model, and some to test the model - Data used in estimation is referred to as .hi[training data] (60%-70% of sample) - Data we use to test performance is called .hi[test data] (10%-20%) - Data we use to cross-validate our model is called .hi[validation data] (10%-20%) - Division of training/validation/test sets should be .hi[random] --- # Model complexity and the bias/variance tradeoff - There is a trade-off between bias and variance `\begin{align*} \underbrace{\mathbb{E}\left(y-\hat{f}(x)\right)^2}_{\text{Expected }MSE\text{ in test set}} &= \underbrace{\mathbb{V}\left(\hat{f}(x)\right)}_{\text{Variance}} + \underbrace{\left[\text{Bias}\left(\hat{f}(x)\right)\right]^2}_{\text{Squared Bias}} + \underbrace{\mathbb{V}\left(\epsilon\right)}_{\text{Irreducible Error}} \end{align*}` - A model with high .hi[bias] is a poor approximation of reality - A model with high .hi[variance] is one that does not generalize well to a new data set - A model is .hi[overfit] if it has low bias and high variance - A model is .hi[underfit] if it has high bias and low variance --- # Visualizing the bias/variance tradeoff .center[] - Optimal model complexity is at the minimum of the red line - Irreducible error means that the red line can't go below a certain level - maybe at 0.3 in this picture? - Image source: Hastie, Tibshirani, and Friedman (2009) --- # Continuous vs. categorical target variables - When the target variable is continuous, we use `\(MSE\)` to measure fit - When it is categorical, we use .hi[accuracy] or similar measures - or some combination of specificity and sensitivity - goal is to not have a good "fit" by randomly guessing - so each potential metric penalizes random guessing --- # Different types of learning algorithms - Just like in econometrics, the most basic "learning algorithm" is OLS - Or, if `\(Y\)` is categorical, logistic regression - But, we know there are many other ways to estimate models - e.g. non-parametric, semi-parametric, Bayesian, ... --- # Supervised and unsupervised learning - .hi[Supervised learning:] we predict `\(Y\)` from a set of `\(X\)`'s - .hi[Unsupervised learning:] we try to group observations by their `\(X\)`'s - Most of econometrics is about supervised learning (i.e. estimate `\(\hat{\beta}\)`) - But there are some elements of unsupervised learning - Particularly with regards to detecting unobserved heterogeneity types - e.g. factor analysis (detect types based on a set of measurements) - e.g. the EM algorithm (detect types based on serial correlation of residuals) --- # Supervised learning algorithms Examples of supervised learning algorithms: - Tree models - Basically a fully non-parametric bin estimator - Can generalize to "forests" that average over many "trees" - Neural networks - Model the human brain's system of axons and dendrites - "Input layer" is the `\(X\)`'s, "Output layer" is `\(Y\)` - "Hidden layers" nonlinearly map the input and output layers - Mapping ends up looking like a logit of logit of logits --- # Supervised learning algorithms (continued) - Bayesian models - Bayes' rule can be thought of as a learning algorithm - Use it to update one's prior - Support Vector Machine (SVM) - Originally developed for classification - Tries to divide 0s and 1s by as large of a margin as possible - Based on representing examples as points in space - Generalization of the maximal margin classifier --- # Unsupervised learning algorithms - We covered the EM algorithm and PCA in previous lectures - `\(k\)`-means clustering - Attempts to group observations together based on the `\(X\)`'s - Choose cluster labels to minimize difference in `\(X\)`'s among labeled observations `\begin{align*} & \min_{C_1,\ldots,C_K} \sum_{k=1}^K \frac{1}{N_k}\sum_{i\in C_k}\sum_{\ell=1}^L\left(x_{i\ell}-\overline{x}_{\ell j}\right)^2 \\ \end{align*}` `\(N_k\)` is the number of observations in cluster `\(k\)`, `\(L\)` is number of `\(X\)`'s - Can choose other metrics besides Euclidean distance --- # Active learning algorithms - .hi[Active learning:] algorithm chooses the next example it wants a label for - Balances "exploration" and "exploitation" - Two common examples of active learning: 1. .hi[Reinforcement learning] powers the world-beating chess engines - These algorithms use dynamic programming methods - Use Conditional Choice Probabilities for computational gains 2. .hi[Recommender systems] power social networks, streaming services, etc. --- # Back to the bias-variance tradeoff - The Bias-Variance Tradeoff applies to supervised learning - How do we ensure that our model is not overly complex (i.e. overfit)? - The answer is to penalize complexity - .hi[Regularization] is the way we penalize complexity - .hi[Cross-validation] is the way that we choose the optimal level of regularization --- # How cross-validation works (Adams, 2018) .center[] - Blue is the data that we use to estimate the model's parameters - We randomly hold out `\(K\)` portions of this data one-at-a-time (Green boxes) - We assess the performance of the model in the Green data - This tells us the optimal complexity (by "hyperparameters" if CV is automated) --- # Types of regularization - Regularization is algorithm-specific - in tree models, complexity is the number of "leaves" on the tree - in linear models, complexity is the number of covariates - in neural networks, complexity is the number/mapping of hidden layers - in Bayesian approaches, priors act as regularization - Whatever our algorithm, we can tune the complexity parameters using CV --- # Regularization of linear-in-parameters models There are three main types of regularization for linear-in-parameters models: 1. `\(L0\)` regularization (Subset selection) 2. `\(L1\)` regularization (LASSO) 3. `\(L2\)` regularization (Ridge) --- # `\(L0\)` regularization - Suppose you have `\(L\)` `\(X\)`'s you may want to include in your model - Subset selection automatically chooses which ones to include - This is an automated version of what is traditionally done in econometrics - Can use Adjusted `\(R^2\)` to penalize complexity - or AIC, BIC, or a penalized SSR - Algorithm either starts from 0 `\(X\)`'s and moves forward - Or it starts from the full set of `\(X\)`'s and works backward - But this won't work if `\(L>N\)`! (i.e. there are more `\(X\)`'s than observations) --- # `\(L1\)` and `\(L2\)` regularization - Consider two different penalized versions of the OLS model: `\begin{align*} &\min_{\beta} \sum_i \left(y_i - x_i'\beta\right)^2 + \lambda\sum_k \vert\beta_k\vert & \text{(LASSO)} \\ &\min_{\beta} \sum_i \left(y_i - x_i'\beta\right)^2 + \lambda\sum_k \beta_k^2 & \text{(Ridge)} \end{align*}` - .hi[LASSO:] Least Absolute Shrinkage and Selection Operator - sets some `\(\beta\)`'s to be 0, others to be attenuated in magnitude - .hi[Ridge:] - sets each `\(\beta\)` to be attenuated in magnitude --- # `\(L1\)` and `\(L2\)` regularization (continued) - We want to choose `\(\lambda\)` to optimize the bias-variance tradeoff - We choose `\(\lambda\)` by `\(k\)`-fold Cross Validation - We can also employ a weighted average of `\(L1\)` and `\(L2\)`, known as .hi[elastic net] `\begin{align*} &\min_{\beta} \sum_i \left(y_i - x_i'\beta\right)^2 + \lambda_1\sum_k \vert\beta_k\vert + \lambda_2\sum_k \beta_k^2 \\ \end{align*}` where we choose `\((\lambda_1,\lambda_2)\)` by cross-validation - `\(L1\)` and `\(L2\)` are excellent for problems where `\(L>N\)` (more `\(X\)`'s than observations) - We can apply `\(L1\)` and `\(L2\)` to other problems (logit, neural network, etc.) --- # How to estimate ML models - R, Python and Julia all have excellent ML libraries - Each language also has a "meta" ML library - [`mlr3`](https://mlr3.mlr-org.com/) (R), [`scikit-learn`](https://scikit-learn.org/stable/) (Python), [`MLJ.jl`](https://alan-turing-institute.github.io/MLJ.jl/dev/) (Julia) - In these libraries, the user specifies `\(Y\)` and `\(X\)` - With only a slight change in code, can estimate a completely different ML model - e.g. go from a logit to a tree model with minimal code changes - e.g. choose values of tuning parameters by `\(k\)`-fold CV - I'll go through a quick example with `MLJ.jl` --- # `MLJ` example After installing the required packages: ``` julia using MLJ, Tables, DataFrames, MLJDecisionTreeInterface, MLJLinearModels models() ``` will list all of the models that can interface with `MLJ`: ``` julia 151-element Array{NamedTuple{(:name, :package_name, :is_supervised, :docstring, :hyperparameter_ranges, :hyperparameter_types, :hyperparameters, :implemented_methods, :is_pure_julia, :is_wrapper, :load_path, :package_license, :package_url, :package_uuid, :prediction_type, :supports_online, :supports_weights, :input_scitype, :target_scitype, :output_scitype),T} where T<:Tuple,1}: (name = ARDRegressor, package_name = ScikitLearn, ... ) (name = AdaBoostClassifier, package_name = ScikitLearn, ... ) (name = AdaBoostRegressor, package_name = ScikitLearn, ... ) ⋮ (name = XGBoostClassifier, package_name = XGBoost, ... ) (name = XGBoostCount, package_name = XGBoost, ... ) (name = XGBoostRegressor, package_name = XGBoost, ... ) ``` --- # `MLJ` example (continued) .scroll-box-18[ ``` julia # use house price data from US Census Bureau df = OpenML.load(574) |> DataFrame X = df[:,[:P1,:P5p1,:P6p2,:P11p4,:P14p9,:P15p1,:P15p3,:P16p2,:P18p2,:P27p4,:H2p2,:H8p2,:H10p1,:H13p1,:H18pA,:H40p4]] X = Tables.rowtable(X) y = log.(df.price) models(matching(X,y)) # declare a tree and lasso model tree_model = @load DecisionTreeRegressor pkg=DecisionTree lasso_model = @load LassoRegressor pkg=MLJLinearModels # initialize "machines" where results can be reported tree = machine(tree_model, X, y) lass = machine(lasso_model, X, y) # split into training and testing data train, test = partition(eachindex(y), 0.7, shuffle=true) # train the models MLJ.fit!(tree, rows=train) MLJ.fit!(lass, rows=train) # predict in test set yhat = MLJ.predict(tree, X[test,:]); yhat = MLJ.predict(lass, X[test,:]); # get RMSE across validation folds MLJ.evaluate(tree_model, X, y, resampling=CV(nfolds=6, shuffle=true), measure=rmse) MLJ.evaluate(lasso_model, X, y, resampling=CV(nfolds=6, shuffle=true), measure=rmse) ``` ] --- # References Aakvik, A., J. J. Heckman, and E. J. Vytlacil (2005). "Estimating Treatment Effects for Discrete Outcomes When Responses to Treatment Vary: An Application to Norwegian Vocational Rehabilitation Programs". In: _Journal of Econometrics_ 125.1, pp. 15-51. DOI: [10.1016/j.jeconom.2004.04.002](https://doi.org/10.1016%2Fj.jeconom.2004.04.002). Ackerberg, D. A. (2003). "Advertising, Learning, and Consumer Choice in Experience Good Markets: An Empirical Examination". In: _International Economic Review_ 44.3, pp. 1007-1040. DOI: [10.1111/1468-2354.t01-2-00098](https://doi.org/10.1111%2F1468-2354.t01-2-00098). Adams, R. P. (2018). _Model Selection and Cross Validation_. Lecture Notes. Princeton University. URL: [https://www.cs.princeton.edu/courses/archive/fall18/cos324/files/model-selection.pdf](https://www.cs.princeton.edu/courses/archive/fall18/cos324/files/model-selection.pdf). Ahlfeldt, G. M., S. J. Redding, D. M. Sturm, et al. (2015). "The Economics of Density: Evidence From the Berlin Wall". In: _Econometrica_ 83.6, pp. 2127-2189. DOI: [10.3982/ECTA10876](https://doi.org/10.3982%2FECTA10876). Altonji, J. G., T. E. Elder, and C. R. Taber (2005). "Selection on Observed and Unobserved Variables: Assessing the Effectiveness of Catholic Schools". In: _Journal of Political Economy_ 113.1, pp. 151-184. DOI: [10.1086/426036](https://doi.org/10.1086%2F426036). Altonji, J. G. and C. R. Pierret (2001). "Employer Learning and Statistical Discrimination". In: _Quarterly Journal of Economics_ 116.1, pp. 313-350. DOI: [10.1162/003355301556329](https://doi.org/10.1162%2F003355301556329). Angrist, J. D. and A. B. Krueger (1991). "Does Compulsory School Attendance Affect Schooling and Earnings?" In: _Quarterly Journal of Economics_ 106.4, pp. 979-1014. DOI: [10.2307/2937954](https://doi.org/10.2307%2F2937954). Angrist, J. D. and J. Pischke (2009). _Mostly Harmless Econometrics: An Empiricist's Companion_. Princeton University Press. ISBN: 0691120358. Arcidiacono, P. (2004). "Ability Sorting and the Returns to College Major". In: _Journal of Econometrics_ 121, pp. 343-375. DOI: [10.1016/j.jeconom.2003.10.010](https://doi.org/10.1016%2Fj.jeconom.2003.10.010). Arcidiacono, P., E. Aucejo, A. Maurel, et al. (2016). _College Attrition and the Dynamics of Information Revelation_. Working Paper. Duke University. URL: [https://tyleransom.github.io/research/CollegeDropout2016May31.pdf](https://tyleransom.github.io/research/CollegeDropout2016May31.pdf). Arcidiacono, P., E. Aucejo, A. Maurel, et al. (2025). "College Attrition and the Dynamics of Information Revelation". In: _Journal of Political Economy_ 133.1. DOI: [10.1086/732526](https://doi.org/10.1086%2F732526). Arcidiacono, P. and J. B. Jones (2003). "Finite Mixture Distributions, Sequential Likelihood and the EM Algorithm". In: _Econometrica_ 71.3, pp. 933-946. DOI: [10.1111/1468-0262.00431](https://doi.org/10.1111%2F1468-0262.00431). Arcidiacono, P., J. Kinsler, and T. Ransom (2022b). "Asian American Discrimination in Harvard Admissions". In: _European Economic Review_ 144, p. 104079. DOI: [10.1016/j.euroecorev.2022.104079](https://doi.org/10.1016%2Fj.euroecorev.2022.104079). Arcidiacono, P., J. Kinsler, and T. Ransom (2022a). "Legacy and Athlete Preferences at Harvard". In: _Journal of Labor Economics_ 40.1, pp. 133-156. DOI: [10.1086/713744](https://doi.org/10.1086%2F713744). Arcidiacono, P. and R. A. Miller (2011). "Conditional Choice Probability Estimation of Dynamic Discrete Choice Models With Unobserved Heterogeneity". In: _Econometrica_ 79.6, pp. 1823-1867. DOI: [10.3982/ECTA7743](https://doi.org/10.3982%2FECTA7743). Arroyo Marioli, F., F. Bullano, S. Kucinskas, et al. (2020). _Tracking R of COVID-19: A New Real-Time Estimation Using the Kalman Filter_. Working Paper. medRxiv. DOI: [10.1101/2020.04.19.20071886](https://doi.org/10.1101%2F2020.04.19.20071886). Ashworth, J., V. J. Hotz, A. Maurel, et al. (2021). "Changes across Cohorts in Wage Returns to Schooling and Early Work Experiences". In: _Journal of Labor Economics_ 39.4, pp. 931-964. DOI: [10.1086/711851](https://doi.org/10.1086%2F711851). Attanasio, O. P., C. Meghir, and A. Santiago (2011). "Education Choices in Mexico: Using a Structural Model and a Randomized Experiment to Evaluate PROGRESA". In: _Review of Economic Studies_ 79.1, pp. 37-66. DOI: [10.1093/restud/rdr015](https://doi.org/10.1093%2Frestud%2Frdr015). Aucejo, E. M. and J. James (2019). "Catching Up to Girls: Understanding the Gender Imbalance in Educational Attainment Within Race". In: _Journal of Applied Econometrics_ 34.4, pp. 502-525. DOI: [10.1002/jae.2699](https://doi.org/10.1002%2Fjae.2699). Baragatti, M., A. Grimaud, and D. Pommeret (2013). "Likelihood-free Parallel Tempering". In: _Statistics and Computing_ 23.4, pp. 535-549. DOI: [ 10.1007/s11222-012-9328-6](https://doi.org/%2010.1007%2Fs11222-012-9328-6). Bayer, P., R. McMillan, A. Murphy, et al. (2016). "A Dynamic Model of Demand for Houses and Neighborhoods". In: _Econometrica_ 84.3, pp. 893-942. DOI: [10.3982/ECTA10170](https://doi.org/10.3982%2FECTA10170). Begg, C. B. and R. Gray (1984). "Calculation of Polychotomous Logistic Regression Parameters Using Individualized Regressions". In: _Biometrika_ 71.1, pp. 11-18. DOI: [10.1093/biomet/71.1.11](https://doi.org/10.1093%2Fbiomet%2F71.1.11). Beggs, S. D., N. S. Cardell, and J. Hausman (1981). "Assessing the Potential Demand for Electric Cars". In: _Journal of Econometrics_ 17.1, pp. 1-19. DOI: [10.1016/0304-4076(81)90056-7](https://doi.org/10.1016%2F0304-4076%2881%2990056-7). Berry, S., J. Levinsohn, and A. Pakes (1995). "Automobile Prices in Market Equilibrium". In: _Econometrica_ 63.4, pp. 841-890. URL: [http://www.jstor.org/stable/2171802](http://www.jstor.org/stable/2171802). Bjorklund, A. and R. Moffitt (1987). "The Estimation of Wage Gains and Welfare Gains in Self-Selection Models". In: _Review of Economics and Statistics_ 69.1, pp. 42-49. DOI: [10.2307/1937899](https://doi.org/10.2307%2F1937899). Blass, A. A., S. Lach, and C. F. Manski (2010). "Using Elicited Choice Probabilities to Estimate Random Utility Models: Preferences for Electricity Reliability". In: _International Economic Review_ 51.2, pp. 421-440. DOI: [10.1111/j.1468-2354.2010.00586.x](https://doi.org/10.1111%2Fj.1468-2354.2010.00586.x). Blundell, R. (2010). "Comments on: ``Structural vs. Atheoretic Approaches to Econometrics'' by Michael Keane". In: _Journal of Econometrics_ 156.1, pp. 25-26. DOI: [10.1016/j.jeconom.2009.09.005](https://doi.org/10.1016%2Fj.jeconom.2009.09.005). Bonhomme, S. and J. Robin (2009). "Consistent Noisy Independent Component Analysis". In: _Journal of Econometrics_ 149.1, pp. 12-25. DOI: [10.1016/j.jeconom.2008.12.019](https://doi.org/10.1016%2Fj.jeconom.2008.12.019). Bonhomme, S. and J. Robin (2010). "Generalized Non-Parametric Deconvolution with an Application to Earnings Dynamics". In: _Review of Economic Studies_ 77.2, pp. 491-533. DOI: [10.1111/j.1467-937X.2009.00577.x](https://doi.org/10.1111%2Fj.1467-937X.2009.00577.x). Bresnahan, T. F., S. Stern, and M. Trajtenberg (1997). "Market Segmentation and the Sources of Rents from Innovation: Personal Computers in the Late 1980s". In: _The RAND Journal of Economics_ 28.0, pp. S17-S44. DOI: [10.2307/3087454](https://doi.org/10.2307%2F3087454). Brien, M. J., L. A. Lillard, and S. Stern (2006). "Cohabitation, Marriage, and Divorce in a Model of Match Quality". In: _International Economic Review_ 47.2, pp. 451-494. DOI: [10.1111/j.1468-2354.2006.00385.x](https://doi.org/10.1111%2Fj.1468-2354.2006.00385.x). Brinch, C. N., M. Mogstad, and M. Wiswall (2017). "Beyond LATE with a Discrete Instrument". In: _Journal of Political Economy_ 125.4, pp. 985-1039. DOI: [10.1086/692712](https://doi.org/10.1086%2F692712). Card, D. (1995). "Using Geographic Variation in College Proximity to Estimate the Return to Schooling". In: _Aspects of Labor Market Behaviour: Essays in Honour of John Vanderkamp_. Ed. by L. N. Christofides, E. K. Grant and R. Swidinsky. Toronto: University of Toronto Press. Cardell, N. S. (1997). "Variance Components Structures for the Extreme-Value and Logistic Distributions with Application to Models of Heterogeneity". In: _Econometric Theory_ 13.2, pp. 185-213. URL: [https://www.jstor.org/stable/3532724](https://www.jstor.org/stable/3532724). Carneiro, P., K. T. Hansen, and J. J. Heckman (2003). "Estimating Distributions of Treatment Effects with an Application to the Returns to Schooling and Measurement of the Effects of Uncertainty on College Choice". In: _International Economic Review_ 44.2, pp. 361-422. DOI: [10.1111/1468-2354.t01-1-00074](https://doi.org/10.1111%2F1468-2354.t01-1-00074). Carneiro, P., J. J. Heckman, and E. Vytlacil (2010). "Evaluating Marginal Policy Changes and the Average Effect of Treatment for Individuals at the Margin". In: _Econometrica_ 78.1, pp. 377-394. DOI: [10.3982/ECTA7089](https://doi.org/10.3982%2FECTA7089). Carneiro, P., J. J. Heckman, and E. J. Vytlacil (2011). "Estimating Marginal Returns to Education". In: _American Economic Review_ 101.6, pp. 2754-2781. DOI: [10.1257/aer.101.6.2754](https://doi.org/10.1257%2Faer.101.6.2754). Caucutt, E. M., L. Lochner, J. Mullins, et al. (2020). _Child Skill Production: Accounting for Parental and Market-Based Time and Goods Investments_. Working Paper 27838. National Bureau of Economic Research. DOI: [10.3386/w27838](https://doi.org/10.3386%2Fw27838). Chen, X., H. Hong, and D. Nekipelov (2011). "Nonlinear Models of Measurement Errors". In: _Journal of Economic Literature_ 49.4, pp. 901-937. DOI: [10.1257/jel.49.4.901](https://doi.org/10.1257%2Fjel.49.4.901). Chintagunta, P. K. (1992). "Estimating a Multinomial Probit Model of Brand Choice Using the Method of Simulated Moments". In: _Marketing Science_ 11.4, pp. 386-407. DOI: [10.1287/mksc.11.4.386](https://doi.org/10.1287%2Fmksc.11.4.386). Cinelli, C. and C. Hazlett (2020). "Making Sense of Sensitivity: Extending Omitted Variable Bias". In: _Journal of the Royal Statistical Society: Series B (Statistical Methodology)_ 82.1, pp. 39-67. DOI: [10.1111/rssb.12348](https://doi.org/10.1111%2Frssb.12348). Coate, P. and K. Mangum (2019). _Fast Locations and Slowing Labor Mobility_. Working Paper 19-49. Federal Reserve Bank of Philadelphia. Cunha, F. and J. Heckman (2007). "The Technology of Skill Formation". In: _American Economic Review_ 97.2, pp. 31-47. DOI: [10.1257/aer.97.2.31](https://doi.org/10.1257%2Faer.97.2.31). Cunha, F., J. J. Heckman, and S. M. Schennach (2010). "Estimating the Technology of Cognitive and Noncognitive Skill Formation". In: _Econometrica_ 78.3, pp. 883-931. DOI: [10.3982/ECTA6551](https://doi.org/10.3982%2FECTA6551). Cunningham, S. (2021). _Causal Inference: The Mixtape_. Yale University Press. URL: [https://www.scunning.com/causalinference_norap.pdf](https://www.scunning.com/causalinference_norap.pdf). Delavande, A. and C. F. Manski (2015). "Using Elicited Choice Probabilities in Hypothetical Elections to Study Decisions to Vote". In: _Electoral Studies_ 38, pp. 28-37. DOI: [10.1016/j.electstud.2015.01.006](https://doi.org/10.1016%2Fj.electstud.2015.01.006). Delavande, A. and B. Zafar (2019). "University Choice: The Role of Expected Earnings, Nonpecuniary Outcomes, and Financial Constraints". In: _Journal of Political Economy_ 127.5, pp. 2343-2393. DOI: [10.1086/701808](https://doi.org/10.1086%2F701808). Diegert, P., M. A. Masten, and A. Poirier (2025). _Assessing Omitted Variable Bias when the Controls are Endogenous_. arXiv. DOI: [10.48550/ARXIV.2206.02303](https://doi.org/10.48550%2FARXIV.2206.02303). Erdem, T. and M. P. Keane (1996). "Decision-Making under Uncertainty: Capturing Dynamic Brand Choice Processes in Turbulent Consumer Goods Markets". In: _Marketing Science_ 15.1, pp. 1-20. DOI: [10.1287/mksc.15.1.1](https://doi.org/10.1287%2Fmksc.15.1.1). Evans, R. W. (2018). _Simulated Method of Moments (SMM) Estimation_. QuantEcon Note. University of Chicago. URL: [https://notes.quantecon.org/submission/5b3db2ceb9eab00015b89f93](https://notes.quantecon.org/submission/5b3db2ceb9eab00015b89f93). Farber, H. S. and R. Gibbons (1996). "Learning and Wage Dynamics". In: _Quarterly Journal of Economics_ 111.4, pp. 1007-1047. DOI: [10.2307/2946706](https://doi.org/10.2307%2F2946706). Fu, C., N. Grau, and J. Rivera (2020). _Wandering Astray: Teenagers' Choices of Schooling and Crime_. Working Paper. University of Wisconsin-Madison. URL: [https://www.ssc.wisc.edu/~cfu/wander.pdf](https://www.ssc.wisc.edu/~cfu/wander.pdf). Geary, R. C. (1942). "Inherent Relations between Random Variables". In: _Proceedings of the Royal Irish Academy. Section A: Mathematical and Physical Sciences_ 47, pp. 63-76. URL: [http://www.jstor.org/stable/20488436](http://www.jstor.org/stable/20488436). Gillingham, K., F. Iskhakov, A. Munk-Nielsen, et al. (2022). "Equilibrium Trade in Automobiles". In: _Journal of Political Economy_. DOI: [10.1086/720463](https://doi.org/10.1086%2F720463). Haile, P. (2019). _``Structural vs. Reduced Form'' Language and Models in Empirical Economics_. Lecture Slides. Yale University. URL: [http://www.econ.yale.edu/~pah29/intro.pdf](http://www.econ.yale.edu/~pah29/intro.pdf). Haile, P. (2024). _Models, Measurement, and the Language of Empirical Economics_. Lecture Slides. Yale University. URL: [https://www.dropbox.com/s/8kwtwn30dyac18s/intro.pdf](https://www.dropbox.com/s/8kwtwn30dyac18s/intro.pdf). Hastie, T., R. Tibshirani, and J. Friedman (2009). _The Elements of Statistical Learning: Data Mining, Inference, Prediction_. 2nd. New York: Springer. URL: [https://web.stanford.edu/~hastie/Papers/ESLII.pdf](https://web.stanford.edu/~hastie/Papers/ESLII.pdf). Heckman, J. J. and J. A. Smith (1993). "Assessing the Case for Randomized Evaluation of Social Programs". In: _Measuring Labor Market Measures: Evaluating the Effects of Active Labour Market Policies_. Ed. by K. Jensen and P. K. Madsen. Copenhagen: Danish Ministry of Labor, pp. 35-96. Heckman, J. J., J. Smith, and N. Clements (1997). "Making the Most Out of Programme Evaluations and Social Experiments: Accounting for Heterogeneity in Programme Impacts". In: _Review of Economic Studies_ 64.4, pp. 487-535. URL: [http://www.jstor.org/stable/2971729](http://www.jstor.org/stable/2971729). Heckman, J. J., J. Stixrud, and S. Urzua (2006). "The Effects of Cognitive and Noncognitive Abilities on Labor Market Outcomes and Social Behavior". In: _Journal of Labor Economics_ 24.3, pp. 411-482. DOI: [10.1086/504455](https://doi.org/10.1086%2F504455). Heckman, J. J. and E. Vytlacil (2005). "Structural Equations, Treatment Effects, and Econometric Policy Evaluation1". In: _Econometrica_ 73.3, pp. 669-738. DOI: [10.1111/j.1468-0262.2005.00594.x](https://doi.org/10.1111%2Fj.1468-0262.2005.00594.x). Hotz, V. J. and R. A. Miller (1993). "Conditional Choice Probabilities and the Estimation of Dynamic Models". In: _The Review of Economic Studies_ 60.3, pp. 497-529. DOI: [10.2307/2298122](https://doi.org/10.2307%2F2298122). Huenermund, P. and E. Bareinboim (2019). _Causal Inference and Data-Fusion in Econometrics_. Working Paper. arXiv. URL: [https://arxiv.org/abs/1912.09104](https://arxiv.org/abs/1912.09104). Hurwicz, L. (1950). "Generalization of the Concept of Identification". In: _Statistical Inference in Dynamic Economic Models_. Hoboken, NJ: John Wiley and Sons, pp. 245-257. Imbens, G. W. and J. D. Angrist (1994). "Identification and Estimation of Local Average Treatment Effects". In: _Econometrica_ 62.2, pp. 467-475. DOI: [10.2307/2951620](https://doi.org/10.2307%2F2951620). Ishimaru, S. (2022). _Geographic Mobility of Youth and Spatial Gaps in Local College and Labor Market Opportunities_. Working Paper. Hitotsubashi University. James, G., D. Witten, T. Hastie, et al. (2013). _An Introduction to Statistical Learning with Applications in R_. New York: Springer. DOI: [10.1007/978-1-4614-7138-7](https://doi.org/10.1007%2F978-1-4614-7138-7). URL: [https://faculty.marshall.usc.edu/gareth-james/ISL/ISLR_Seventh_Printing.pdf](https://faculty.marshall.usc.edu/gareth-james/ISL/ISLR_Seventh_Printing.pdf). James, J. (2011). _Ability Matching and Occupational Choice_. Working Paper 11-25. Federal Reserve Bank of Cleveland. James, J. (2017). "MM Algorithm for General Mixed Multinomial Logit Models". In: _Journal of Applied Econometrics_ 32.4, pp. 841-857. DOI: [10.1002/jae.2532](https://doi.org/10.1002%2Fjae.2532). Jin, H. and H. Shen (2020). "Foreign Asset Accumulation Among Emerging Market Economies: A Case for Coordination". In: _Review of Economic Dynamics_ 35.1, pp. 54-73. DOI: [10.1016/j.red.2019.04.006](https://doi.org/10.1016%2Fj.red.2019.04.006). Keane, M. P. (2010). "Structural vs. Atheoretic Approaches to Econometrics". In: _Journal of Econometrics_ 156.1, pp. 3-20. DOI: [10.1016/j.jeconom.2009.09.003](https://doi.org/10.1016%2Fj.jeconom.2009.09.003). Keane, M. P. and K. I. Wolpin (1997). "The Career Decisions of Young Men". In: _Journal of Political Economy_ 105.3, pp. 473-522. DOI: [10.1086/262080](https://doi.org/10.1086%2F262080). Koopmans, T. C. and O. Reiersol (1950). "The Identification of Structural Characteristics". In: _The Annals of Mathematical Statistics_ 21.2, pp. 165-181. URL: [http://www.jstor.org/stable/2236899](http://www.jstor.org/stable/2236899). Kosar, G., T. Ransom, and W. van der Klaauw (2022). "Understanding Migration Aversion Using Elicited Counterfactual Choice Probabilities". In: _Journal of Econometrics_ 231.1, pp. 123-147. DOI: [10.1016/j.jeconom.2020.07.056](https://doi.org/10.1016%2Fj.jeconom.2020.07.056). Kotlarski, I. (1967). "On Characterizing the Gamma and the Normal Distribution". In: _Pacific Journal of Mathematics_ 20, pp. 69-76. Krauth, B. (2016). "Bounding a Linear Causal Effect Using Relative Correlation Restrictions". In: _Journal of Econometric Methods_ 5.1, pp. 117-141. DOI: [10.1515/jem-2013-0013](https://doi.org/10.1515%2Fjem-2013-0013). Lang, K. and M. D. Palacios (2018). _The Determinants of Teachers' Occupational Choice_. Working Paper 24883. National Bureau of Economic Research. DOI: [10.3386/w24883](https://doi.org/10.3386%2Fw24883). Lee, D. S., J. McCrary, M. J. Moreira, et al. (2020). _Valid t-ratio Inference for IV_. Working Paper. arXiv. URL: [https://arxiv.org/abs/2010.05058](https://arxiv.org/abs/2010.05058). Lewbel, A. (2019). "The Identification Zoo: Meanings of Identification in Econometrics". In: _Journal of Economic Literature_ 57.4, pp. 835-903. DOI: [10.1257/jel.20181361](https://doi.org/10.1257%2Fjel.20181361). Mahoney, N. (2022). "Principles for Combining Descriptive and Model-Based Analysis in Applied Microeconomics Research". In: _Journal of Economic Perspectives_ 36.3, pp. 211-22. DOI: [10.1257/jep.36.3.211](https://doi.org/10.1257%2Fjep.36.3.211). Mardia, K. V. (1970). "Measures of Multivariate Skewness and Kurtosis with Applications". In: _Biometrika_ 57.3, pp. 519-530. URL: [http://www.jstor.org/stable/2334770](http://www.jstor.org/stable/2334770). McFadden, D. (1978). "Modelling the Choice of Residential Location". In: _Spatial Interaction Theory and Planning Models_. Ed. by A. Karlqvist, L. Lundqvist, F. Snickers and J. W. Weibull. Amsterdam: North Holland, pp. 75-96. McFadden, D. (1989). "A Method of Simulated Moments for Estimation of Discrete Response Models Without Numerical Integration". In: _Econometrica_ 57.5, pp. 995-1026. DOI: [10.2307/1913621](https://doi.org/10.2307%2F1913621). URL: [http://www.jstor.org/stable/1913621](http://www.jstor.org/stable/1913621). Mellon, J. (2020). _Rain, Rain, Go Away: 137 Potential Exclusion-Restriction Violations for Studies Using Weather as an Instrumental Variable_. Working Paper. University of Manchester. URL: [https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3715610](https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3715610). Miller, R. A. (1984). "Job Matching and Occupational Choice". In: _Journal of Political Economy_ 92.6, pp. 1086-1120. DOI: [10.1086/261276](https://doi.org/10.1086%2F261276). Mincer, J. (1974). _Schooling, Experience and Earnings_. New York: Columbia University Press for National Bureau of Economic Research. Ost, B., W. Pan, and D. Webber (2018). "The Returns to College Persistence for Marginal Students: Regression Discontinuity Evidence from University Dismissal Policies". In: _Journal of Labor Economics_ 36.3, pp. 779-805. DOI: [10.1086/696204](https://doi.org/10.1086%2F696204). Oster, E. (2019). "Unobservable Selection and Coefficient Stability: Theory and Evidence". In: _Journal of Business & Economic Statistics_ 37.2, pp. 187-204. DOI: [10.1080/07350015.2016.1227711](https://doi.org/10.1080%2F07350015.2016.1227711). Pearl, J. (2012). "The Do-Calculus Revisited". In: _Proceedings of the Twenty-Eighth Conference on Uncertainty in Artificial Intelligence_. Ed. by N. de Freitas and K. Murphy. Corvallis, OR: AUAI Press, pp. 4-11. Pischke, S. (2007). _Lecture Notes on Measurement Error_. Lecture Notes. London School of Economics. URL: [http://econ.lse.ac.uk/staff/spischke/ec524/Merr_new.pdf](http://econ.lse.ac.uk/staff/spischke/ec524/Merr_new.pdf). Ransom, M. R. and T. Ransom (2018). "Do High School Sports Build or Reveal Character? Bounding Causal Estimates of Sports Participation". In: _Economics of Education Review_ 64, pp. 75-89. DOI: [10.1016/j.econedurev.2018.04.002](https://doi.org/10.1016%2Fj.econedurev.2018.04.002). Ransom, T. (2022). "Labor Market Frictions and Moving Costs of the Employed and Unemployed". In: _Journal of Human Resources_ 57.S, pp. S137-S166. DOI: [10.3368/jhr.monopsony.0219-10013R2](https://doi.org/10.3368%2Fjhr.monopsony.0219-10013R2). Reiersol, O. (1950). "Identifiability of a Linear Relation between Variables Which Are Subject to Error". In: _Econometrica_ 18.4, pp. 375-389. URL: [http://www.jstor.org/stable/1907835](http://www.jstor.org/stable/1907835). Robins, J. M. (1997). "Causal Inference from Complex Longitudinal Data". In: _Latent Variable Modeling and Applications to Causality_. Ed. by M. Berkane. New York: Springer, pp. 69-117. Robinson, P. M. (1988). "Root-N-Consistent Semiparametric Regression". In: _Econometrica_ 56.4, pp. 931-954. URL: [http://www.jstor.org/stable/1912705](http://www.jstor.org/stable/1912705). Rudik, I. (2020). "Optimal Climate Policy When Damages Are Unknown". In: _American Economic Journal: Economic Policy_ 12.2, pp. 340-373. DOI: [10.1257/pol.20160541](https://doi.org/10.1257%2Fpol.20160541). Rueschendorf, L. (1981). "Sharpness of Frechet-bounds". In: _Zeitschrift fur Wahrscheinlichkeitstheorie und Verwandte Gebiete_ 57.2, pp. 293-302. DOI: [10.1007/BF00535495](https://doi.org/10.1007%2FBF00535495). Rust, J. (1987). "Optimal Replacement of GMC Bus Engines: An Empirical Model of Harold Zurcher". In: _Econometrica_ 55.5, pp. 999-1033. URL: [http://www.jstor.org/stable/1911259](http://www.jstor.org/stable/1911259). Shalizi, C. R. (2019). _Advanced Data Analysis from an Elementary Point of View_. Cambridge University Press. URL: [http://www.stat.cmu.edu/~cshalizi/ADAfaEPoV/ADAfaEPoV.pdf](http://www.stat.cmu.edu/~cshalizi/ADAfaEPoV/ADAfaEPoV.pdf). Smith Jr., A. A. (2008). "Indirect Inference". In: _The New Palgrave Dictionary of Economics_. Ed. by S. N. Durlauf and L. E. Blume. Vol. 1-8. London: Palgrave Macmillan. DOI: [10.1007/978-1-349-58802-2](https://doi.org/10.1007%2F978-1-349-58802-2). URL: [http://www.econ.yale.edu/smith/palgrave7.pdf](http://www.econ.yale.edu/smith/palgrave7.pdf). Stinebrickner, R. and T. Stinebrickner (2014a). "Academic Performance and College Dropout: Using Longitudinal Expectations Data to Estimate a Learning Model". In: _Journal of Labor Economics_ 32.3, pp. 601-644. DOI: [10.1086/675308](https://doi.org/10.1086%2F675308). Stinebrickner, R. and T. R. Stinebrickner (2014b). "A Major in Science? Initial Beliefs and Final Outcomes for College Major and Dropout". In: _Review of Economic Studies_ 81.1, pp. 426-472. DOI: [10.1093/restud/rdt025](https://doi.org/10.1093%2Frestud%2Frdt025). Su, C. and K. L. Judd (2012). "Constrained Optimization Approaches to Estimation of Structural Models". In: _Econometrica_ 80.5, pp. 2213-2230. DOI: [10.3982/ECTA7925](https://doi.org/10.3982%2FECTA7925). Train, K. (2009). _Discrete Choice Methods with Simulation_. 2nd ed. Cambridge; New York: Cambridge University Press. ISBN: 9780521766555. Vytlacil, E. (2002). "Independence, Monotonicity, and Latent Index Models: An Equivalence Result". In: _Econometrica_ 70.1, pp. 331-341. DOI: [10.1111/1468-0262.00277](https://doi.org/10.1111%2F1468-0262.00277). Wiswall, M. and B. Zafar (2018). "Preference for the Workplace, Investment in Human Capital, and Gender". In: _Quarterly Journal of Economics_ 133.1, pp. 457-507. DOI: [10.1093/qje/qjx035](https://doi.org/10.1093%2Fqje%2Fqjx035). Young, A. (2020). _Consistency without Inference: Instrumental Variables in Practical Application_. Working Paper. London School of Economics.