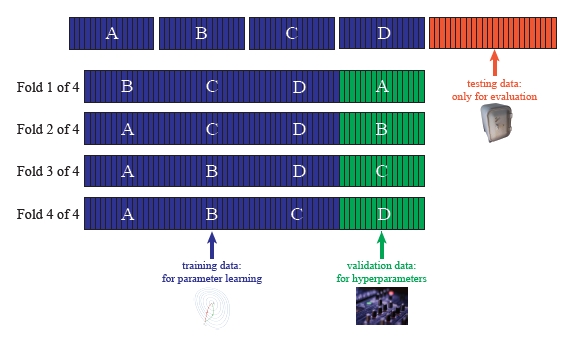

class: title-slide <br><br><br> # Lecture 10 ## Model Fit, Validation and Counterfactual Simulations ### Tyler Ransom ### ECON 6343, University of Oklahoma --- # Plan for the Day 1. Discuss how to assess model fit 2. Model validation 3. Counterfactual simulations 4. Go through examples from a couple of recent papers - Fu, Grau, and Rivera (2020) - Lang and Palacios (2018) --- # Steps to producing a "structural" estimation - We discussed the structural workflow in our 3rd class meeting - Since that class meeting, we've focused mainly on estimation - We also briefly talked about identification - But there are still some steps we haven't covered - Counterfactuals are where you actually answer your research question - And before counterfactuals you need to show your model fits the data --- # Model Fit - Model fit basically consists of showing that your model can match the data - This is because the counterfactuals rely solely on the model - Most model fit is _ad hoc_: you can choose what to show - Typically, you'll want to show that moments of the `\(Y\)` variables match - e.g. choice frequencies in the data match choice probabilities of the model - But there may be other moments of interest, like dynamic choice transitions --- # Example from Fu, Grau, and Rivera (2020) - In this paper, the authors show the following model fit statistics: <table> <thead> <tr> <th style="text-align:left;"> Variable </th> <th style="text-align:right;"> Data </th> <th style="text-align:right;"> Model </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Ever arrested % </td> <td style="text-align:right;"> 5.60 </td> <td style="text-align:right;"> 5.10 </td> </tr> <tr> <td style="text-align:left;"> Always enrolled, 0 arrest % </td> <td style="text-align:right;"> 72.20 </td> <td style="text-align:right;"> 71.10 </td> </tr> <tr> <td style="text-align:left;"> GPA (standardized) </td> <td style="text-align:right;"> -0.39 </td> <td style="text-align:right;"> -0.43 </td> </tr> <tr> <td style="text-align:left;"> Retention % </td> <td style="text-align:right;"> 7.30 </td> <td style="text-align:right;"> 8.00 </td> </tr> <tr> <td style="text-align:left;"> Grade Completed by T </td> <td style="text-align:right;"> 11.10 </td> <td style="text-align:right;"> 11.20 </td> </tr> </tbody> </table> - These results are for youth with parents who are not well educated - (This group is the most at risk to be arrested or drop out of school) - Model fit for other groups is similar --- # How to do model fit There are a couple of options for producing model fit statistics 1. Use the model assumptions and estimates to simulate a new dataset 2. Use the data and estimates to compute `\(\hat{Y}\)` and compare with `\(Y\)` --- # Simulating a new dataset - To simulate a new data set, you simply draw the unobservables from the model - Use any initial conditions from the data - Then (if the model is dynamic) simply draw `\(Y\)` in `\(t=1\)` - Then update the `\(X\)`'s in `\(t=2\)` and draw `\(Y\)` in `\(t=2\)`, ... - If you used SMM to estimate the model, you should already have code for this - Typically you want to repeat this process a few times - This is because you don't want model fit to be subject to simulation error --- # Assessing model fit from the data and estimates - A simpler option is to predict the `\(\hat{Y}\)` using the actual data - This is identical to using a `predict()` function (like in PS6) - However, this approach may not be possible in a model with key latent variables - For example, unobserved types or other unobservables are not in the data - In this case, you have no choice but to go the simulation route --- # Model Validation - Even if your model fits the data well, some may still be skeptical - This is because, in most cases, you used your entire dataset in estimation - If you do this, you open yourself up to the possibility of .hi[overfitting] - .hi[Model validation] is a way of showing that your model is not overfit - To do so, you show that the model reproduces key stats in a "holdout sample" - This sample was "held out" of the estimation for purposes of validation --- # Mini aside on machine learning Machine learning is all about automating two hand-in-hand processes: 1. Model selection - What should the specification be? 2. Model validation - Does the model generalize to other (similar) contexts? - The goal of machine learning is to automate these processes to maximize prediction - This is different than the goal of econometrics (causal inference) --- # Model selection - In classical econometrics, we always select our models by hand - This what the famed and sometimes maligned "robustness checks" address - In machine learning, we let the computer tell us which specification is "best" - "Best" is defined by minimizing both in- and out-of-sample prediction error - This involves estimating many different specifications - For complex models, automated model selection is computationally intensive - Rust (1987) did this by hand when exploring the shape of the cost function --- # Model validation - Broadly speaking, model validation is about whether the model "makes sense" - It can be qualitative ("do the parameter results make sense? conform to theory?") - It can also be quantitative ("is the prediction error sufficiently low?") - In machine learning, .hi[cross-validation] is typically used - Cross-validation informs about the optimal complexity of the model - Then we (can) automate our specification search subject to that optimal complexity - Automated specification search is still not commonly done in structural estimation --- # How cross-validation works (Adams, 2018) .center[] - Blue is the data that we use to estimate the model's parameters - We randomly hold out `\(K\)` portions of this data one-at-a-time (Green boxes) - We assess the performance of the model in the Green data - This tells us the optimal complexity (by "hyperparameters" if CV is automated) --- # Validation in Lang and Palacios (2018) - A common question is how much unobserved heterogeneity to allow for - This is not _ex ante_ knowable - Typical approach is to put "enough" in there such that the in-sample fit is good - Lang and Palacios (2018) search for optimal number of types - Use a 20% holdout sample to assess the out-of-sample fit - Note that they do not do cross-validation - They simply compare how the model fits in the estimation and holdout data --- # Validation in Delavande and Zafar (2019) - Another form of validation could be to predict treatment effects in control group - If the model is good, it should be able to do this - Delavande and Zafar (2019) do something similar to this - Rather than comparing model predictions in/out of sample, - They compare them for a different state of the world - In their case, the new state of the world is one without schooling costs - They can do this because they elicited preferences for schooling under both regimes - But they only used one regime when estimating their model --- # Counterfactuals - Counterfactuals involve changing the model, simulating new data and then comparing w/status quo - "Changing a policy" typically can be captured by changing the value of a set of parameters - "Policy experiments" typically mimic some real-world policy in this way - .hi[Note:] policy experiments always assume invariance of the other model parameters! - So if I change the value of one parameter, that won't "leak" into any others - This may be a heroic assumption, depending on the model --- # Examples of counterfactuals .smaller[ - Fu, Grau, and Rivera (2020) - Would low-SES youth commit fewer crimes if we sent them to better schools? - Arcidiacono, Aucejo, Maurel et al. (2016) - How would human capital investment change if there were perfect information? - Arcidiacono, Kinsler, and Ransom (2022a) - Would Harvard become less white if it were to eliminate legacy admissions? - Ransom (2022) - Would American workers move to a new city if they were paid $10,000 to do so? - Caucutt, Lochner, Mullins et al. (2020) - What can reduce disparities in child investment across rich/poor families? ] --- # How to do counterfactuals - To perform the counterfactual simulations, follow these steps: - Impose parameter restrictions - Simulate fake data under those restrictions - Compare the resulting model summary to a "baseline" prediction - (The baseline prediction is your model fit) - As you can see, if you use SMM, you've already got the code to simulate the model --- # Coding example: multinomial logit estimation This code comes from the first question of Problem Set 4: .scroll-box-16[ ``` julia using Random, LinearAlgebra, Statistics, Optim, DataFrames, CSV, HTTP, GLM, ForwardDiff, FreqTables # read in data url = "https://raw.githubusercontent.com/OU-PhD-Econometrics/fall-2020/master/ProblemSets/PS4-mixture/nlsw88t.csv" df = CSV.read(HTTP.get(url).body) X = [df.age df.white df.collgrad] Z = hcat(df.elnwage1, df.elnwage2, df.elnwage3, df.elnwage4, df.elnwage5, df.elnwage6, df.elnwage7, df.elnwage8) y = df.occ_code # estimate multionomial logit model include("mlogit_with_Z.jl") θ_start = [ .0403744; .2439942; -1.57132; .0433254; .1468556; -2.959103; .1020574; .7473086; -4.12005; .0375628; .6884899; -3.65577; .0204543; -.3584007; -4.376929; .1074636; -.5263738; -6.199197; .1168824; -.2870554; -5.322248; 1.307477] td = TwiceDifferentiable(b -> mlogit_with_Z(b, X, Z, y), θ_start; autodiff = :forward); θ̂_optim_ad = optimize(td, θ_start, LBFGS(), Optim.Options(g_tol = 1e-5, iterations=100_000, show_trace=true, show_every=50)); θ̂_mle_ad = θ̂_optim_ad.minimizer H = Optim.hessian!(td, θ̂_mle_ad) θ̂_mle_ad_se = sqrt.(diag(inv(H))) ``` ] --- # Coding example: model fit and counterfactuals Now let's evaluate model fit and effect of counterfactual policy .scroll-box-16[ ``` julia # get baseline predicted probabilities function plogit(θ, X, Z, J) α = θ[1:end-1] γ = θ[end] K = size(X,2) bigα = [reshape(α,K,J-1) zeros(K)] P = exp.(X*bigα .+ Z*γ) ./ sum.(eachrow(exp.(X*bigα .+ Z*γ))) return P end println(θ̂_mle_ad) P = plogit(θ̂_mle_ad, X, Z, length(unique(y))) # compare with data modelfitdf = DataFrame(data_freq = 100*convert(Array, prop(freqtable(df,:occ_code))), P = 100*vec(mean(P'; dims=2))) display(modelfitdf) # now run a counterfactual: # suppose people don't care about wages when choosing occupation # this means setting θ̂_mle_ad[end] = 0 θ̂_cfl = deepcopy(θ̂_mle_ad) θ̂_cfl[end] = 0 P_cfl = plogit(θ̂_cfl, X, Z, length(unique(y))) modelfitdf.P_cfl = 100*vec(mean(P_cfl'; dims=2)) modelfitdf.ew = vec(mean(Z'; dims=2)) modelfitdf.diff = modelfitdf.P_cfl .- modelfitdf.P display(modelfitdf) │ Row │ data_freq │ P │ P_cfl │ diff │ ew │ │ │ Float64 │ Float64 │ Float64 │ Float64 │ Float64 │ ├─────┼───────────┼─────────┼─────────┼───────────┼─────────┤ │ 1 │ 10.5976 │ 11.2549 │ 8.34334 │ -2.91158 │ 1.96627 │ │ 2 │ 5.24943 │ 6.21553 │ 4.98708 │ -1.22845 │ 1.85026 │ │ 3 │ 38.6462 │ 37.5457 │ 34.4537 │ -3.092 │ 1.71771 │ │ 4 │ 4.66067 │ 4.88623 │ 5.70078 │ 0.814551 │ 1.54756 │ │ 5 │ 1.54063 │ 1.80065 │ 1.62727 │ -0.173382 │ 1.75298 │ │ 6 │ 15.1525 │ 14.676 │ 15.123 │ 0.447092 │ 1.58918 │ │ 7 │ 18.3571 │ 17.3364 │ 23.3689 │ 6.03245 │ 1.42956 │ │ 8 │ 5.79588 │ 6.28457 │ 6.39589 │ 0.111316 │ 1.59501 │ ``` ] --- # Discussion of model fit counterfactuals - Model fit is good, and I would have expected even better - MLE is designed to match the data choice frequencies - The counterfactuals make sense - Removing preference for earnings leads to re-sorting of occupations - Sorting into low-wage occupations and out of high-wage ones --- # Confidence intervals around counterfactuals - The counterfactuals above give us a new predicted occupational distribution - But we don't know what the confidence intervals are around the prediction - Our estimates inherently come with sampling variation - We should incorporate that variation into our counterfactual - How do we do this? Bootstrapping --- # Bootstrapping to get CIs around counterfactuals - Recall: `\(-H^{-1}\)` is the variance matrix of our estimates, where `\(H\)` is the Hessian - Assume that `\(\hat{\theta} \sim MVN(\theta,-H^{-1})\)` - but remember that we want to minimize `\(-\ell\)` so we just use `\(H^{-1}\)` - We sample from this distribution `\(B\)` times and compute our new counterfactuals - Then we can look at the 2.5th and 97.5th percentiles - This will represent a 95% confidence interval - This algorithm is known as the .hi[parametric bootstrap] - Cf. .hi[nonparametric bootstrap] which is when we randomly re-sample from our data --- # Coding example: parametric bootstrap - Here's how we can get the confidence interval around the policy effect - Note: it's uncommon to see this, due to computational demands of just one cfl .scroll-box-12[ ``` julia # now do parametric bootstrap Random.seed!(1234) invHfix = UpperTriangular(inv(H)) .- Diagonal(inv(H)) .+ UpperTriangular(inv(H))' # this code makes inv(H) truly symmetric (not just numerically symmetric) d = MvNormal(θ̂_mle_ad,invHfix) B = 1000 cfl_bs = zeros(8,B) for b = 1:B θ_draw = rand(d) Pb = plogit(θ_draw, X, Z, length(unique(y))) Pbs = 100*vec(mean(Pb'; dims=2)) θ_draw[end] = 0 P_cflb = plogit(θ_draw, X, Z, length(unique(y))) Pcfl_bs = 100*vec(mean(P_cflb'; dims=2)) cfl_bs[:,b] = Pcfl_bs .- Pbs end bsdiffL = deepcopy(cfl_bs[:,1]) bsdiffU = deepcopy(cfl_bs[:,1]) for i=1:size(cfl_bs,1) bsdiffL[i] = quantile(cfl_bs[i,:],.025) bsdiffU[i] = quantile(cfl_bs[i,:],.975) end modelfitdf.bs_diffL = bsdiffL modelfitdf.bs_diffU = bsdiffU display(modelfitdf) │ Row │ data_freq │ P │ P_cfl │ diff │ ew │ bs_diffL │ bs_diffU │ │ │ Float64 │ Float64 │ Float64 │ Float64 │ Float64 │ Float64 │ Float64 │ ├─────┼───────────┼─────────┼─────────┼───────────┼─────────┼───────────┼───────────┤ │ 1 │ 10.5976 │ 11.2549 │ 8.34334 │ -2.91158 │ 1.96627 │ -3.37791 │ -2.40627 │ │ 2 │ 5.24943 │ 6.21553 │ 4.98708 │ -1.22845 │ 1.85026 │ -1.46601 │ -1.0004 │ │ 3 │ 38.6462 │ 37.5457 │ 34.4537 │ -3.092 │ 1.71771 │ -3.72105 │ -2.4462 │ │ 4 │ 4.66067 │ 4.88623 │ 5.70078 │ 0.814551 │ 1.54756 │ 0.666758 │ 0.967763 │ │ 5 │ 1.54063 │ 1.80065 │ 1.62727 │ -0.173382 │ 1.75298 │ -0.214975 │ -0.135771 │ │ 6 │ 15.1525 │ 14.676 │ 15.123 │ 0.447092 │ 1.58918 │ 0.387452 │ 0.506462 │ │ 7 │ 18.3571 │ 17.3364 │ 23.3689 │ 6.03245 │ 1.42956 │ 4.82327 │ 7.20728 │ │ 8 │ 5.79588 │ 6.28457 │ 6.39589 │ 0.111316 │ 1.59501 │ 0.0795148 │ 0.143139 │ ``` ] --- # References .tiny[ Ackerberg, D. A. (2003). "Advertising, Learning, and Consumer Choice in Experience Good Markets: An Empirical Examination". In: _International Economic Review_ 44.3, pp. 1007-1040. DOI: [10.1111/1468-2354.t01-2-00098](https://doi.org/10.1111%2F1468-2354.t01-2-00098). Adams, R. P. (2018). _Model Selection and Cross Validation_. Lecture Notes. Princeton University. URL: [https://www.cs.princeton.edu/courses/archive/fall18/cos324/files/model-selection.pdf](https://www.cs.princeton.edu/courses/archive/fall18/cos324/files/model-selection.pdf). Ahlfeldt, G. M., S. J. Redding, D. M. Sturm, et al. (2015). "The Economics of Density: Evidence From the Berlin Wall". In: _Econometrica_ 83.6, pp. 2127-2189. DOI: [10.3982/ECTA10876](https://doi.org/10.3982%2FECTA10876). Altonji, J. G., T. E. Elder, and C. R. Taber (2005). "Selection on Observed and Unobserved Variables: Assessing the Effectiveness of Catholic Schools". In: _Journal of Political Economy_ 113.1, pp. 151-184. DOI: [10.1086/426036](https://doi.org/10.1086%2F426036). Altonji, J. G. and C. R. Pierret (2001). "Employer Learning and Statistical Discrimination". In: _Quarterly Journal of Economics_ 116.1, pp. 313-350. DOI: [10.1162/003355301556329](https://doi.org/10.1162%2F003355301556329). Angrist, J. D. and A. B. Krueger (1991). "Does Compulsory School Attendance Affect Schooling and Earnings?" In: _Quarterly Journal of Economics_ 106.4, pp. 979-1014. DOI: [10.2307/2937954](https://doi.org/10.2307%2F2937954). Angrist, J. D. and J. Pischke (2009). _Mostly Harmless Econometrics: An Empiricist's Companion_. Princeton University Press. ISBN: 0691120358. Arcidiacono, P. (2004). "Ability Sorting and the Returns to College Major". In: _Journal of Econometrics_ 121, pp. 343-375. DOI: [10.1016/j.jeconom.2003.10.010](https://doi.org/10.1016%2Fj.jeconom.2003.10.010). Arcidiacono, P., E. Aucejo, A. Maurel, et al. (2016). _College Attrition and the Dynamics of Information Revelation_. Working Paper. Duke University. URL: [https://tyleransom.github.io/research/CollegeDropout2016May31.pdf](https://tyleransom.github.io/research/CollegeDropout2016May31.pdf). Arcidiacono, P., E. Aucejo, A. Maurel, et al. (2025). "College Attrition and the Dynamics of Information Revelation". In: _Journal of Political Economy_ 133.1. DOI: [10.1086/732526](https://doi.org/10.1086%2F732526). Arcidiacono, P. and J. B. Jones (2003). "Finite Mixture Distributions, Sequential Likelihood and the EM Algorithm". In: _Econometrica_ 71.3, pp. 933-946. DOI: [10.1111/1468-0262.00431](https://doi.org/10.1111%2F1468-0262.00431). Arcidiacono, P., J. Kinsler, and T. Ransom (2022b). "Asian American Discrimination in Harvard Admissions". In: _European Economic Review_ 144, p. 104079. DOI: [10.1016/j.euroecorev.2022.104079](https://doi.org/10.1016%2Fj.euroecorev.2022.104079). Arcidiacono, P., J. Kinsler, and T. Ransom (2022a). "Legacy and Athlete Preferences at Harvard". In: _Journal of Labor Economics_ 40.1, pp. 133-156. DOI: [10.1086/713744](https://doi.org/10.1086%2F713744). Arcidiacono, P. and R. A. Miller (2011). "Conditional Choice Probability Estimation of Dynamic Discrete Choice Models With Unobserved Heterogeneity". In: _Econometrica_ 79.6, pp. 1823-1867. DOI: [10.3982/ECTA7743](https://doi.org/10.3982%2FECTA7743). Arroyo Marioli, F., F. Bullano, S. Kucinskas, et al. (2020). _Tracking R of COVID-19: A New Real-Time Estimation Using the Kalman Filter_. Working Paper. medRxiv. DOI: [10.1101/2020.04.19.20071886](https://doi.org/10.1101%2F2020.04.19.20071886). Ashworth, J., V. J. Hotz, A. Maurel, et al. (2021). "Changes across Cohorts in Wage Returns to Schooling and Early Work Experiences". In: _Journal of Labor Economics_ 39.4, pp. 931-964. DOI: [10.1086/711851](https://doi.org/10.1086%2F711851). Attanasio, O. P., C. Meghir, and A. Santiago (2011). "Education Choices in Mexico: Using a Structural Model and a Randomized Experiment to Evaluate PROGRESA". In: _Review of Economic Studies_ 79.1, pp. 37-66. DOI: [10.1093/restud/rdr015](https://doi.org/10.1093%2Frestud%2Frdr015). Aucejo, E. M. and J. James (2019). "Catching Up to Girls: Understanding the Gender Imbalance in Educational Attainment Within Race". In: _Journal of Applied Econometrics_ 34.4, pp. 502-525. DOI: [10.1002/jae.2699](https://doi.org/10.1002%2Fjae.2699). Baragatti, M., A. Grimaud, and D. Pommeret (2013). "Likelihood-free Parallel Tempering". In: _Statistics and Computing_ 23.4, pp. 535-549. DOI: [ 10.1007/s11222-012-9328-6](https://doi.org/%2010.1007%2Fs11222-012-9328-6). Bayer, P., R. McMillan, A. Murphy, et al. (2016). "A Dynamic Model of Demand for Houses and Neighborhoods". In: _Econometrica_ 84.3, pp. 893-942. DOI: [10.3982/ECTA10170](https://doi.org/10.3982%2FECTA10170). Begg, C. B. and R. Gray (1984). "Calculation of Polychotomous Logistic Regression Parameters Using Individualized Regressions". In: _Biometrika_ 71.1, pp. 11-18. DOI: [10.1093/biomet/71.1.11](https://doi.org/10.1093%2Fbiomet%2F71.1.11). Beggs, S. D., N. S. Cardell, and J. Hausman (1981). "Assessing the Potential Demand for Electric Cars". In: _Journal of Econometrics_ 17.1, pp. 1-19. DOI: [10.1016/0304-4076(81)90056-7](https://doi.org/10.1016%2F0304-4076%2881%2990056-7). Berry, S., J. Levinsohn, and A. Pakes (1995). "Automobile Prices in Market Equilibrium". In: _Econometrica_ 63.4, pp. 841-890. URL: [http://www.jstor.org/stable/2171802](http://www.jstor.org/stable/2171802). Blass, A. A., S. Lach, and C. F. Manski (2010). "Using Elicited Choice Probabilities to Estimate Random Utility Models: Preferences for Electricity Reliability". In: _International Economic Review_ 51.2, pp. 421-440. DOI: [10.1111/j.1468-2354.2010.00586.x](https://doi.org/10.1111%2Fj.1468-2354.2010.00586.x). Blundell, R. (2010). "Comments on: ``Structural vs. Atheoretic Approaches to Econometrics'' by Michael Keane". In: _Journal of Econometrics_ 156.1, pp. 25-26. DOI: [10.1016/j.jeconom.2009.09.005](https://doi.org/10.1016%2Fj.jeconom.2009.09.005). Bresnahan, T. F., S. Stern, and M. Trajtenberg (1997). "Market Segmentation and the Sources of Rents from Innovation: Personal Computers in the Late 1980s". In: _The RAND Journal of Economics_ 28.0, pp. S17-S44. DOI: [10.2307/3087454](https://doi.org/10.2307%2F3087454). Brien, M. J., L. A. Lillard, and S. Stern (2006). "Cohabitation, Marriage, and Divorce in a Model of Match Quality". In: _International Economic Review_ 47.2, pp. 451-494. DOI: [10.1111/j.1468-2354.2006.00385.x](https://doi.org/10.1111%2Fj.1468-2354.2006.00385.x). Card, D. (1995). "Using Geographic Variation in College Proximity to Estimate the Return to Schooling". In: _Aspects of Labor Market Behaviour: Essays in Honour of John Vanderkamp_. Ed. by L. N. Christofides, E. K. Grant and R. Swidinsky. Toronto: University of Toronto Press. Cardell, N. S. (1997). "Variance Components Structures for the Extreme-Value and Logistic Distributions with Application to Models of Heterogeneity". In: _Econometric Theory_ 13.2, pp. 185-213. URL: [https://www.jstor.org/stable/3532724](https://www.jstor.org/stable/3532724). Caucutt, E. M., L. Lochner, J. Mullins, et al. (2020). _Child Skill Production: Accounting for Parental and Market-Based Time and Goods Investments_. Working Paper 27838. National Bureau of Economic Research. DOI: [10.3386/w27838](https://doi.org/10.3386%2Fw27838). Chen, X., H. Hong, and D. Nekipelov (2011). "Nonlinear Models of Measurement Errors". In: _Journal of Economic Literature_ 49.4, pp. 901-937. DOI: [10.1257/jel.49.4.901](https://doi.org/10.1257%2Fjel.49.4.901). Chintagunta, P. K. (1992). "Estimating a Multinomial Probit Model of Brand Choice Using the Method of Simulated Moments". In: _Marketing Science_ 11.4, pp. 386-407. DOI: [10.1287/mksc.11.4.386](https://doi.org/10.1287%2Fmksc.11.4.386). Cinelli, C. and C. Hazlett (2020). "Making Sense of Sensitivity: Extending Omitted Variable Bias". In: _Journal of the Royal Statistical Society: Series B (Statistical Methodology)_ 82.1, pp. 39-67. DOI: [10.1111/rssb.12348](https://doi.org/10.1111%2Frssb.12348). Coate, P. and K. Mangum (2019). _Fast Locations and Slowing Labor Mobility_. Working Paper 19-49. Federal Reserve Bank of Philadelphia. Cunha, F., J. J. Heckman, and S. M. Schennach (2010). "Estimating the Technology of Cognitive and Noncognitive Skill Formation". In: _Econometrica_ 78.3, pp. 883-931. DOI: [10.3982/ECTA6551](https://doi.org/10.3982%2FECTA6551). Cunningham, S. (2021). _Causal Inference: The Mixtape_. Yale University Press. URL: [https://www.scunning.com/causalinference_norap.pdf](https://www.scunning.com/causalinference_norap.pdf). Delavande, A. and C. F. Manski (2015). "Using Elicited Choice Probabilities in Hypothetical Elections to Study Decisions to Vote". In: _Electoral Studies_ 38, pp. 28-37. DOI: [10.1016/j.electstud.2015.01.006](https://doi.org/10.1016%2Fj.electstud.2015.01.006). Delavande, A. and B. Zafar (2019). "University Choice: The Role of Expected Earnings, Nonpecuniary Outcomes, and Financial Constraints". In: _Journal of Political Economy_ 127.5, pp. 2343-2393. DOI: [10.1086/701808](https://doi.org/10.1086%2F701808). Diegert, P., M. A. Masten, and A. Poirier (2025). _Assessing Omitted Variable Bias when the Controls are Endogenous_. arXiv. DOI: [10.48550/ARXIV.2206.02303](https://doi.org/10.48550%2FARXIV.2206.02303). Erdem, T. and M. P. Keane (1996). "Decision-Making under Uncertainty: Capturing Dynamic Brand Choice Processes in Turbulent Consumer Goods Markets". In: _Marketing Science_ 15.1, pp. 1-20. DOI: [10.1287/mksc.15.1.1](https://doi.org/10.1287%2Fmksc.15.1.1). Evans, R. W. (2018). _Simulated Method of Moments (SMM) Estimation_. QuantEcon Note. University of Chicago. URL: [https://notes.quantecon.org/submission/5b3db2ceb9eab00015b89f93](https://notes.quantecon.org/submission/5b3db2ceb9eab00015b89f93). Farber, H. S. and R. Gibbons (1996). "Learning and Wage Dynamics". In: _Quarterly Journal of Economics_ 111.4, pp. 1007-1047. DOI: [10.2307/2946706](https://doi.org/10.2307%2F2946706). Fu, C., N. Grau, and J. Rivera (2020). _Wandering Astray: Teenagers' Choices of Schooling and Crime_. Working Paper. University of Wisconsin-Madison. URL: [https://www.ssc.wisc.edu/~cfu/wander.pdf](https://www.ssc.wisc.edu/~cfu/wander.pdf). Gillingham, K., F. Iskhakov, A. Munk-Nielsen, et al. (2022). "Equilibrium Trade in Automobiles". In: _Journal of Political Economy_. DOI: [10.1086/720463](https://doi.org/10.1086%2F720463). Haile, P. (2019). _``Structural vs. Reduced Form'' Language and Models in Empirical Economics_. Lecture Slides. Yale University. URL: [http://www.econ.yale.edu/~pah29/intro.pdf](http://www.econ.yale.edu/~pah29/intro.pdf). Haile, P. (2024). _Models, Measurement, and the Language of Empirical Economics_. Lecture Slides. Yale University. URL: [https://www.dropbox.com/s/8kwtwn30dyac18s/intro.pdf](https://www.dropbox.com/s/8kwtwn30dyac18s/intro.pdf). Heckman, J. J., J. Stixrud, and S. Urzua (2006). "The Effects of Cognitive and Noncognitive Abilities on Labor Market Outcomes and Social Behavior". In: _Journal of Labor Economics_ 24.3, pp. 411-482. DOI: [10.1086/504455](https://doi.org/10.1086%2F504455). Hotz, V. J. and R. A. Miller (1993). "Conditional Choice Probabilities and the Estimation of Dynamic Models". In: _The Review of Economic Studies_ 60.3, pp. 497-529. DOI: [10.2307/2298122](https://doi.org/10.2307%2F2298122). Hurwicz, L. (1950). "Generalization of the Concept of Identification". In: _Statistical Inference in Dynamic Economic Models_. Hoboken, NJ: John Wiley and Sons, pp. 245-257. Ishimaru, S. (2022). _Geographic Mobility of Youth and Spatial Gaps in Local College and Labor Market Opportunities_. Working Paper. Hitotsubashi University. James, J. (2011). _Ability Matching and Occupational Choice_. Working Paper 11-25. Federal Reserve Bank of Cleveland. James, J. (2017). "MM Algorithm for General Mixed Multinomial Logit Models". In: _Journal of Applied Econometrics_ 32.4, pp. 841-857. DOI: [10.1002/jae.2532](https://doi.org/10.1002%2Fjae.2532). Jin, H. and H. Shen (2020). "Foreign Asset Accumulation Among Emerging Market Economies: A Case for Coordination". In: _Review of Economic Dynamics_ 35.1, pp. 54-73. DOI: [10.1016/j.red.2019.04.006](https://doi.org/10.1016%2Fj.red.2019.04.006). Keane, M. P. (2010). "Structural vs. Atheoretic Approaches to Econometrics". In: _Journal of Econometrics_ 156.1, pp. 3-20. DOI: [10.1016/j.jeconom.2009.09.003](https://doi.org/10.1016%2Fj.jeconom.2009.09.003). Keane, M. P. and K. I. Wolpin (1997). "The Career Decisions of Young Men". In: _Journal of Political Economy_ 105.3, pp. 473-522. DOI: [10.1086/262080](https://doi.org/10.1086%2F262080). Koopmans, T. C. and O. Reiersol (1950). "The Identification of Structural Characteristics". In: _The Annals of Mathematical Statistics_ 21.2, pp. 165-181. URL: [http://www.jstor.org/stable/2236899](http://www.jstor.org/stable/2236899). Kosar, G., T. Ransom, and W. van der Klaauw (2022). "Understanding Migration Aversion Using Elicited Counterfactual Choice Probabilities". In: _Journal of Econometrics_ 231.1, pp. 123-147. DOI: [10.1016/j.jeconom.2020.07.056](https://doi.org/10.1016%2Fj.jeconom.2020.07.056). Krauth, B. (2016). "Bounding a Linear Causal Effect Using Relative Correlation Restrictions". In: _Journal of Econometric Methods_ 5.1, pp. 117-141. DOI: [10.1515/jem-2013-0013](https://doi.org/10.1515%2Fjem-2013-0013). Lang, K. and M. D. Palacios (2018). _The Determinants of Teachers' Occupational Choice_. Working Paper 24883. National Bureau of Economic Research. DOI: [10.3386/w24883](https://doi.org/10.3386%2Fw24883). Lee, D. S., J. McCrary, M. J. Moreira, et al. (2020). _Valid t-ratio Inference for IV_. Working Paper. arXiv. URL: [https://arxiv.org/abs/2010.05058](https://arxiv.org/abs/2010.05058). Lewbel, A. (2019). "The Identification Zoo: Meanings of Identification in Econometrics". In: _Journal of Economic Literature_ 57.4, pp. 835-903. DOI: [10.1257/jel.20181361](https://doi.org/10.1257%2Fjel.20181361). Mahoney, N. (2022). "Principles for Combining Descriptive and Model-Based Analysis in Applied Microeconomics Research". In: _Journal of Economic Perspectives_ 36.3, pp. 211-22. DOI: [10.1257/jep.36.3.211](https://doi.org/10.1257%2Fjep.36.3.211). McFadden, D. (1978). "Modelling the Choice of Residential Location". In: _Spatial Interaction Theory and Planning Models_. Ed. by A. Karlqvist, L. Lundqvist, F. Snickers and J. W. Weibull. Amsterdam: North Holland, pp. 75-96. McFadden, D. (1989). "A Method of Simulated Moments for Estimation of Discrete Response Models Without Numerical Integration". In: _Econometrica_ 57.5, pp. 995-1026. DOI: [10.2307/1913621](https://doi.org/10.2307%2F1913621). URL: [http://www.jstor.org/stable/1913621](http://www.jstor.org/stable/1913621). Mellon, J. (2020). _Rain, Rain, Go Away: 137 Potential Exclusion-Restriction Violations for Studies Using Weather as an Instrumental Variable_. Working Paper. University of Manchester. URL: [https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3715610](https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3715610). Miller, R. A. (1984). "Job Matching and Occupational Choice". In: _Journal of Political Economy_ 92.6, pp. 1086-1120. DOI: [10.1086/261276](https://doi.org/10.1086%2F261276). Mincer, J. (1974). _Schooling, Experience and Earnings_. New York: Columbia University Press for National Bureau of Economic Research. Ost, B., W. Pan, and D. Webber (2018). "The Returns to College Persistence for Marginal Students: Regression Discontinuity Evidence from University Dismissal Policies". In: _Journal of Labor Economics_ 36.3, pp. 779-805. DOI: [10.1086/696204](https://doi.org/10.1086%2F696204). Oster, E. (2019). "Unobservable Selection and Coefficient Stability: Theory and Evidence". In: _Journal of Business & Economic Statistics_ 37.2, pp. 187-204. DOI: [10.1080/07350015.2016.1227711](https://doi.org/10.1080%2F07350015.2016.1227711). Pischke, S. (2007). _Lecture Notes on Measurement Error_. Lecture Notes. London School of Economics. URL: [http://econ.lse.ac.uk/staff/spischke/ec524/Merr_new.pdf](http://econ.lse.ac.uk/staff/spischke/ec524/Merr_new.pdf). Ransom, M. R. and T. Ransom (2018). "Do High School Sports Build or Reveal Character? Bounding Causal Estimates of Sports Participation". In: _Economics of Education Review_ 64, pp. 75-89. DOI: [10.1016/j.econedurev.2018.04.002](https://doi.org/10.1016%2Fj.econedurev.2018.04.002). Ransom, T. (2022). "Labor Market Frictions and Moving Costs of the Employed and Unemployed". In: _Journal of Human Resources_ 57.S, pp. S137-S166. DOI: [10.3368/jhr.monopsony.0219-10013R2](https://doi.org/10.3368%2Fjhr.monopsony.0219-10013R2). Rudik, I. (2020). "Optimal Climate Policy When Damages Are Unknown". In: _American Economic Journal: Economic Policy_ 12.2, pp. 340-373. DOI: [10.1257/pol.20160541](https://doi.org/10.1257%2Fpol.20160541). Rust, J. (1987). "Optimal Replacement of GMC Bus Engines: An Empirical Model of Harold Zurcher". In: _Econometrica_ 55.5, pp. 999-1033. URL: [http://www.jstor.org/stable/1911259](http://www.jstor.org/stable/1911259). Shalizi, C. R. (2019). _Advanced Data Analysis from an Elementary Point of View_. Cambridge University Press. URL: [http://www.stat.cmu.edu/~cshalizi/ADAfaEPoV/ADAfaEPoV.pdf](http://www.stat.cmu.edu/~cshalizi/ADAfaEPoV/ADAfaEPoV.pdf). Smith Jr., A. A. (2008). "Indirect Inference". In: _The New Palgrave Dictionary of Economics_. Ed. by S. N. Durlauf and L. E. Blume. Vol. 1-8. London: Palgrave Macmillan. DOI: [10.1007/978-1-349-58802-2](https://doi.org/10.1007%2F978-1-349-58802-2). URL: [http://www.econ.yale.edu/smith/palgrave7.pdf](http://www.econ.yale.edu/smith/palgrave7.pdf). Stinebrickner, R. and T. Stinebrickner (2014a). "Academic Performance and College Dropout: Using Longitudinal Expectations Data to Estimate a Learning Model". In: _Journal of Labor Economics_ 32.3, pp. 601-644. DOI: [10.1086/675308](https://doi.org/10.1086%2F675308). Stinebrickner, R. and T. R. Stinebrickner (2014b). "A Major in Science? Initial Beliefs and Final Outcomes for College Major and Dropout". In: _Review of Economic Studies_ 81.1, pp. 426-472. DOI: [10.1093/restud/rdt025](https://doi.org/10.1093%2Frestud%2Frdt025). Su, C. and K. L. Judd (2012). "Constrained Optimization Approaches to Estimation of Structural Models". In: _Econometrica_ 80.5, pp. 2213-2230. DOI: [10.3982/ECTA7925](https://doi.org/10.3982%2FECTA7925). Train, K. (2009). _Discrete Choice Methods with Simulation_. 2nd ed. Cambridge; New York: Cambridge University Press. ISBN: 9780521766555. Wiswall, M. and B. Zafar (2018). "Preference for the Workplace, Investment in Human Capital, and Gender". In: _Quarterly Journal of Economics_ 133.1, pp. 457-507. DOI: [10.1093/qje/qjx035](https://doi.org/10.1093%2Fqje%2Fqjx035). Young, A. (2020). _Consistency without Inference: Instrumental Variables in Practical Application_. Working Paper. London School of Economics. ]