Now known as SAP Commerce Cloud, is a comprehensive e-commerce platform designed to help businesses create, manage, and optimize digital commerce experiences across multiple channels. It offers features for product content management, customer experience management, and integrated marketing capabilities.

The platform is built on a unified core that provides key services and models tailored specifically for commerce, accessible across all functional modules. Additional modules extend the platform's capabilities with services like the API registry and support for search and navigation functionalities.

SAP Commerce is highly flexible and extensible, allowing businesses to create personalized commerce solutions. Its architecture leverages multiple abstraction layers and a modular design, enabling customization and scalability to meet specific business needs.

SAP Commerce is a modular, extensible platform that integrates deeply with SAP's broader ecosystem, enabling businesses to deliver scalable and unique commerce experiences across various channels. Its flexibility ensures that it can adapt to diverse business requirements while maintaining seamless integration and consistency throughout operations.

The platform’s layered architecture and modular approach make it an ideal solution for businesses aiming for scalable, adaptable, and future-proof digital transformation in commerce.

The SAP Commerce Platform offers a range of features to support its core functionalities such as containerization, data management, synchronization, security, and localization. For example, the data report feature helps collect raw data from the SAP Commerce database and present it in an easily understandable report format.

SAP Commerce includes a build framework that handles many tasks, including code generation. It is extendable and supports tools like JRebel, which can help avoid unnecessary rebuilds during the development phase, improving efficiency.

The SAP Commerce Cache is an important part of the persistence layer, improving performance by reducing the number of database queries. It stores search results, item attributes, and item instances in memory, making data access faster.

Clustering refers to using multiple SAP Commerce installations that share data from a single database. The clustering functionality offers various configurable options for scaling your system effectively.

With containerization, SAP Commerce allows you to build Docker images and run them as isolated software instances. This makes it easier to deploy your system in different environments, ensuring compatibility without issues.

The platform offers a Data Retention Framework that enables you to define strategies for retaining or cleaning up specified data. The Item Locking Service helps you prevent data modifications or deletions when necessary.

SAP Commerce includes a data validation framework based on the JSR 303 Java validation specification. This framework allows you to validate your data before it's saved, and it can be customized at runtime to meet specific needs.

SAP Commerce simplifies the management of digital assets with tools for media conversion and organization, making it easier to handle various aspects of your digital content.

The Generic Data Report feature allows you to collect and display raw data from the SAP Commerce database in an understandable report format. For historical reports, the audit feature is available, providing a way to track data changes over time.

ImpEx is a text-based tool for importing and exporting data. It allows you to create, update, remove, and transfer data items like customer, product, or order information between CSV files and the platform.

SAP Commerce supports internationalization and localization, enabling you to customize the system for different languages and regional requirements, ensuring that your platform caters to a global audience.

JMS provides asynchronous communication, allowing for remote method invocation. It complements synchronous solutions like RMI and Web services, providing a more flexible communication approach.

SAP Commerce includes robust logging features that let you configure how logs are formatted, sorted by message type and level, and where they are reported, making it easier to track and manage application events.

In multitenant mode, SAP Commerce can run multiple logical instances on a single installation, each with its own distinct set of data. This is useful for hosting multiple online shops for different customers within one SAP Commerce system.

OAuth 2.0 is the default authorization framework used for the Omni Commerce Connect (OCC) Web Services, enabling secure and flexible authentication and authorization.

The platform provides built-in support for processing orders, managing payment methods, handling pricing, and organizing regions for shipping costs. It also offers services for managing orders efficiently.

SAP Commerce provides various tools for monitoring the performance of your application. These tools help you fine-tune your installation, ensuring that the system runs efficiently and effectively.

Polyglot persistence allows you to store certain types of data in alternative storage systems, like document-based storage. This helps reduce the load on the main database and supports non-SQL storage for specific data types.

SAP Commerce provides methods to filter database data based on primary keys, improving query performance and data management.

When customizing SAP Commerce, it's essential to set up a clear business model. The platform provides tools to help you design and implement your business models effectively.

The Product Content and Catalog features allow you to structure, manage, and organize products and product information, making it easier to manage your online store’s catalog.

SAP Commerce supports two built-in search mechanisms: FlexibleSearch and GenericSearch. You can also use ViewType, which represents a database view, to enhance search functionality.

SAP Commerce uses Charon to ensure secure HTTP transactions, protecting sensitive data during communication.

The platform includes tools for managing user access, data encryption, and security, allowing you to control who can access what data and ensuring your platform is secure.

The SAP Commerce ServiceLayer API allows you to develop and extend services for your system. It includes a number of common services and allows you to create custom services tailored to your needs.

ServiceLayer Direct enables you to directly read and write data in the database, bypassing the Jalo layer. This is useful when you need more direct control over your data management.

Platform workflow and collaboration tools make it easier to define and manage complex organizational processes. These tools help improve transparency and efficiency in your workflows.

Omnichannel Capabilities:

Pre-built Accelerators:

Product Content Management (PCM):

Order Management:

Personalization:

Integration with SAP Ecosystem:

Cloud Deployment:

Analytics and Reporting:

SAP Commerce, is built on a modular and extensible architecture that ensures flexibility, scalability,

and

seamless integration with SAP’s ecosystem. The platform supports tailored commerce experiences through its

multi-layered

structure, including the Platform, Modules and Extensions,

Accelerators, and a comprehensive

suite of administrative tools. This design empowers businesses

to efficiently manage complex commerce operations,

integrate with other systems, and adapt to evolving business

requirements.

The core of SAP Commerce is the Platform, which serves as the foundational module common to all configurations. It includes a variety of extensions that provide essential building blocks for higher-level modules. These building blocks include support for core functionalities like:

Additionally, many optional extensions can be added to enhance functionality and support both standard business modules and any custom features you may wish to develop.

Modularity and Extensibility

Seamless Integration

Customizable and Scalable Solutions

Business Agility:

Enhanced Customer Experience:

Future-Proof Design:

Extensions and addons are foundational concepts in SAP Commerce (Hybris). While both are used to deliver functionality, their purposes and use cases differ significantly. Here's a detailed comparison to clarify their distinctions:

core, storefront, cockpit, and various

platform-provided extensions.

addoninstall

command.

captchaaddon, asmaddon, and secureportaladdon.

| Aspect | Extension | Addon |

|---|---|---|

| Purpose | Used to create completely new or standalone functionality. | Used to enhance or add to existing functionality. |

| Scope | Provides business logic, integration, or core functionalities. | Adds modular, optional features (e.g., Captcha, Assisted Service Module). |

| Codebase Impact | Requires direct integration or calls within existing codebases. | Leaves existing code untouched; overlays functionality during the build process. |

| Reusability | Can be reused but often requires additional integration effort. | Highly reusable and easily pluggable into different projects. |

| Installation | No special installation; included during regular builds. | Requires running addoninstall to copy code into the target extension. |

| Removal | Requires manual removal of code references. | Simple to remove using addonuninstall. |

| Build Time | Generally faster, as no file copying is required. | Slower, as addon files are copied into target extensions during the build. |

| Examples | core, storefront, backoffice, integration extensions.

|

captchaaddon, secureportaladdon, commerceorgaddon. |

| Use Case | Choose Extension | Choose Addon |

|---|---|---|

| Developing new functionality | When building large or complex functionality from scratch. | Not suitable. |

| Enhancing storefront functionality | Not ideal. | When adding specific enhancements like Captcha or ASM. |

| Third-party system integration | Preferred for handling integrations with APIs or services. | Not suitable. |

| Reusability across projects | Possible but requires manual integration. | Best suited for reusable, pluggable modules. |

| Adding/removing functionality easily | Requires manual effort for both addition and removal. | Addons are highly modular and can be plugged/unplugged easily. |

addoninstall, copying files) during the

build process.

Modules provide the business logic, APIs, and presentation layers. Each module consists of several extensions that deliver different layers of a business function. For example, a subscription management module might include:

Other common extensions may include web service extensions, such as REST APIs, or AddOns, which allow you to add new functionality to a storefront without modifying core template files. Modules may also leverage business logic from other modules, which is handled by SAP Commerce's build management system during application construction.

Accelerators are pre-built, customizable storefront templates provided by SAP Commerce. These templates support both B2C and B2B business models, along with industry-specific solutions. You can also create a storefront using a decoupled JavaScript implementation, such as Spartacus, for more flexibility in front-end design.

SAP Commerce allows full customization and integration to meet your specific business requirements. You can integrate it with the SAP Business Technology Platform (BTP) to sync data with backend systems like SAP ERP or S/4HANA, or utilize services from the SAP BTP Extensions. Additionally, SAP Commerce allows you to extend or build new features from scratch, leveraging its platform and APIs to create unique, tailored experiences for your customers.

SAP Commerce provides a suite of tools for both system and business administration. System configurations can be managed using the Administration Console. For business management, the Backoffice Administration Cockpit and various specialized perspectives like the Product Management Cockpit and Adaptive Search allow for detailed control over users, roles, access permissions, and other business aspects.

The data model is base of application, it defines the structure of your application.

Business logic is always

based on top of the data model and helps an organize and maintain their database.

each extension has

extension-name-items.xml file.

ItemTypes are the base of the hybris. Item types are used to create new tables or to update existing tables.

The items.xml file in is used to define ItemTypes, which represent the business objects

.

These items are mapped to database tables. The items.xml file specifies attributes,

relations, and other metadata for

each type, helping to configure the data model for the application.

Data entities are defined with item type elements, whereas relations between items are defined with relation

elements.

Item.xml file is locate resource/ extension-name.items.xml file in each extension. which is used for

create data model

of business. you can define new types,override and extend existing types.

Type system is used for design data modeling or organize data. Types define an objects for manage and store

data

with Java implementation. For instance, Java have class and object. Class is blueprint of Object and Object is

instance

of Class. Same concept is follow. Hybris defines Type and Item. Type is blueprint of Item and Item

is

instance of type.

System Related Type : It extends type system itself and deal with manage internal data

Business Related Type: it is manage business activity data like Order, Customer, Product.

A CollectionType contains number of instances of types. It is based on the Java Collection class. you can use of

the

Collection

class and some of its sub-classes (List, Set, and SortedSet). There are two types of relations that you can

build

with CollectionTypes: one to many relations and many to one relations. Both kinds of relation are unidirectional.

Collection Types have technical limitations

If a collection contains a number of PKs, the field value may reach the maximum length of field for the database

and

collection entries may get truncated. you can only store values of a certain length in that database field

and every bit

of information beyond that length gets lost.

As the database entry only contains the PKs and not the items themselves, you cannot run database searches on the

entries

directly.

If a single CollectionType instance has several AtomicType entries that match a search query, you are not able to

detect

the exact number of matches from the database directly.

RelationTypes represent n:m relations. You can link a one item to other item. LinkItems hold two

attributes,

SourceItem and TargetItem, that hold references to the respective item. LinkItem is helper type item which

is

linked together of source and target item.

one-to-one, unidirectional : (attribute definition, such as Product instance - Unit instance)

one-to many,

unidirectional : CollectionType

many-to-one, unidirectional : CollectionType

many-to-many, bidirectional :

RelationType

When to use and when not use Collection/Relation?

There is no such hard and fast rule for choosing

Collection/Relation, we just need to consider few points before

choosing it.

When to Use Collection?

Prefer collection when we are sure that in our current and future requirements, we will

not have many rows mapped for

one side.

It means whenever the collection size is small,we can prefer

collection as it helps to achieve faster retrieval

When not to use Collection?

Don’t use collection whenever the collection size is very big as it can lead to data

truncation

When to use Relation?

Whenever the collection size is bigger or there is a chance that it can grow bigger then

prefer Relation as it assures

that there will be no data truncation.

For many to many , we should go for

Relation always.

When not to use Relation?

We can just prefer collection in place of Relation whenever the collection size is

smaller to compensate slow retrieval

of Relation but in that case we need to negotiate with Bidirectional

mapping.

So choose it based on the above factors which suits your requirements.

As we all know that any attribute we define in item type will have a tag called persistent type.

persistent type=”property”

Corresponding column will be created in the database and hence the values will be

stored in the DB. So it’s called

persistent attribute.

persistent type=”dynamic”

There will be no column created in the database and hence values will not be stored in

the database.

So it’s called Non persistent or dynamic attribute.

For every dynamic attribute we define, we need to mention the attribute handler otherwise Bean Id will be

generated

automatically and we have to use the same bean id while defining Spring bean in XML.

Attribute handler is implemented using Spring.

So we need to mention the spring bean id for the attribute handler.

Then we need to define the class for that

spring bean id which provides the custom logic for the dynamic attribute.

It is possible that one item type can

have any number of dynamic attributes.

Dynamic in enum is completely different from Dynamic attributes.

If an Enumtype is non-dynamic (by default,

dynamic=”false”) we are not allowed to add new values at runtime.

If we add any non-dynamic enumtype without

values,build will fail as it does not have any effect.

So if you want to add new values at runtime we have to

make dynamic=”true” for an enum.

We can change the flag anytime but enforces a system update.

If

dynamic=”false” the servicelayer generates real java enums (having a fixed set of values).

If dynamic=”true” it

generates hybris enums which can be used without fixed values(means we can add run time values).

code :The identifier of this ItemType

extends :The superclass of this ItemType

jaloclass: The fully qualified

classpath of this ItemType

autocreate : If set to true, this ItemType will be created when the platform creates

the type system during

initialization.

generate: If set to true, the platform creates getter and setter

methods for this ItemType.

qualifier: The identifier of this Attribute

redeclare: change its behaviour of an attribute

type: The

identifier of the type this attribute is going to be.

write : Setting modifier to true results in a setter

method being generated for this attribute and setting modifier to

false results in no setter method being

generated for this attribute:

read: Setting modifier to true results in a getter method being generated for this

attribute and setting the modifier to

false results in no getter method being generated for this attribute:

Hybris provide two ways for set the database column type :

By specifying the database column type in the item.xml file, such as

You can also define this in more detail by

specifying database systems

We need to decide one of the ways based on the requirement.

SAP Hybris provides a powerful data modeling framework that allows businesses to define complex relationships and associations between various data elements. The types of data used in SAP Hybris are fundamental for building a robust data model. This article explores key concepts, including the types of data, attributes, and relationships used in the platform.

The SAP Hybris data model consists of several different types of data, each representing a particular category or entity. These data types include Simple Types, Collection Types, and Relation Types, which are essential in defining the structure and relationships of items within the model.

Simple types represent basic data elements that do not have any further complexity. These types typically define attributes such as numbers, text, and dates. Simple types are often used for defining basic properties of an entity, such as a product’s name or a customer’s address.

Some examples of simple types include:

Collection types are used to store multiple instances of a given item type. They allow a data model to support relationships where an entity can have multiple associated items.

There are different types of collections in SAP Hybris, including:

Collection types are especially useful in cases where entities need to reference multiple related entities. For example, a product can have multiple images, and a customer can have multiple orders.

A relation type, also known as a relationship type or simply a relationship, defines the association or connection between two or more entities or data elements within a data model. Relationships play a crucial role in modeling the structure and interactions between different parts of a data model, helping to represent the complex relationships that exist in the real world.

Here are some key aspects and types of relationships in data modeling:

Cardinality: Cardinality describes the number of instances of one entity that can be related to the number of instances of another entity through a relationship. Common cardinality options include "one-to-one," "one-to-many," and "many-to-many."

Directionality: Relationships can be unidirectional or bidirectional. A unidirectional relationship means that one entity knows about the other, but the other doesn't necessarily have knowledge of the first entity. In a bidirectional relationship, both entities are aware of each other.

Here’s an explanation of how relationship types are defined in items.xml:

One-to-One Relationship: A one-to-one relationship can be created by simply defining an attribute of item type.

In this example, the "Employee" item type has a one-to-one relationship with the "IdentityCard" item type. An "Employee" item can have one associated "IdentityCard" item through the "identityCard" attribute.

Practical Use Case: Suppose you have an employee Steve with code "stev8848" who has one identity card with code "id299292". We can define this one-to-one relation as below:

One-to-Many Relationship: A one-to-many relationship between item types defines a relationship where one instance of the source item type can be associated with multiple instances of the target item type. This is known as a "one-to-N" relationship.

In the example, you have two item types: "Country" and "State." Each country can have one or more states, creating a one-to-many relationship.

Practical Use Case: Suppose you have a Country as USA and you want to associate multiple states (e.g., Florida, California, Arizona) with this Country. You can use this one-to-many relationship to link the "Country" item to all the relevant State items.

Many-to-Many Relationship: A many-to-many relationship between item types defines a relationship where multiple instances of one item type can be associated with multiple instances of another item type. This is known as a "N-to-N" relationship.

In this case, you have two item types: "Product" and "Category." Each product can belong to multiple categories, and each category can contain multiple products, creating a many-to-many relationship.

Practical Use Case: Suppose you have a "Clothing" category that contains multiple products, and you also have a "Sale" category that contains some of the same products. You can use this many-to-many relationship to associate these categories with the relevant products.

In Hybris, defining data types is a key task when designing the data model. This is typically done within the items.xml file, where different item types and their attributes are defined. Each data type, whether simple, collection, or relation, can be configured with various attributes that determine its behavior and structure.

For example:

String,

Integer, etc.

By defining these data types properly in the items.xml file, Hybris users can ensure that their system is capable of handling complex data models and relationships, enabling the platform to function smoothly and efficiently.

Here is the revised article without code, maintaining the original explanations and source definitions, but removing the code snippets as requested:

An "item type" is a fundamental concept used to define the structure and characteristics of different data entities or objects within the Hybris. Item types serve as the blueprint for creating, managing, and querying data in a Hybris system.

In an e-commerce system, common item types might include "Product," "Category," "Customer," "Order," and "Payment."

For content management, item types like "CMSComponent" and "CMSPages" can be used to model web page components and pages.

Custom item types can also be created to represent domain-specific data within the Hybris system.

There are mainly 4 ways of defining an item type in item.xml:

New Item Type Definition Without Extending Existing Item Type:

In this case, we are

creating an item type from scratch without extending any existing item type. This approach is used when a new

table needs to be created to store specific data.

Practical Use Case: For example, defining a table to store customer ID, feedback text, and the ratings given by a customer. This requires defining attributes for the customer feedback details, where the table is created and its persistence configuration is specified.

Attribute Data Types:

Attribute Modifiers:

New Item Type Definition by Extending Existing Item Type:

This approach involves creating

a new item type by extending an existing one. This allows new functionality to be added to an existing item

type.

Practical Use Case: For instance, adding a new attribute for a product to specify the list of country codes where the product is available. This defines a relationship between the product and the countries where it is visible.

Attribute Definition: A list of country codes is added to the new item type, specifying which countries each product is available in.

Define the New Attribute in the Existing Item Type:

In some cases, new attributes are

added to existing item types to introduce new functionality, such as adding a configurable flag to products.

Practical Use Case: A flag to indicate whether a product is configurable, allowing the business logic to filter configurable and non-configurable products accordingly.

Attribute Modifiers:

Boolean.FALSE for the configurable

flag.

Redeclaring the Existing Attribute in the Child Item:

In some scenarios, an existing

attribute needs to be redeclared in the child item type, usually to modify its properties like making it

read-only or changing its data type.

Practical Use Case: For example, redefining the "isocode" attribute in the Country

item type, which was originally defined in the parent C2LItem item type. Here, the attribute's

uniqueness constraint might be modified in the child item.

Attribute Modifiers:

concept called variable hiding which means variable with the same name is defined in both parent and child classes.

variable from Parent will be inherited but it will be hidden in the Child class as the Child class also has the

same

variable.

we can also change the variable data type in Child class keeping the same variable name.

To create a new extension in , follow these steps:

/bin/platform directory.ant extgen command: ant extgen -Dinput.template=yempty -Dinput.name=occ

-Dinput.package=com.epam.trainingyempty for an empty extension)./custom directory and is ready to be developed.This file contains the list of extensions that you are using for your commerce application. This file would be

responsible

to build all the extension for your application.

It enables developers to customize the platform’s functionality by selectively enabling or disabling

extensions,

controlling their loading order, and managing dependencies, all of which ensure the flexibility and

scalability

of the system.

The Model Life Cycle in SAP Hybris Commerce describes how a Model, which represents a database record (entity), transitions through various phases. These phases control how models are created, modified, saved, and deleted, while also providing hooks for custom logic via interceptors. The lifecycle ensures that models are managed efficiently, reflecting changes in the database only when explicitly saved, rather than automatically syncing data.

Instantiating the Model:

new keyword to

create a new instance.

ModelService: This provides a more

flexible way to create models, especially dynamically.

Example of creating an instance:

ProductModel product = new ProductModel();

or through ModelService:

ProductModel product = modelService.create(ProductModel.class);

Loading an Existing Model:

Models can be loaded from the database using different

mechanisms:

ModelService.get() method fetches a model

by its primary key.

ProductModel product = modelService.get(pk);

Modifying Model Values:

Once a model is instantiated, its properties can be modified

using standard getter/setter methods.

product.setCatalogVersion(catalogVersion);

product.setCode(code);

Saving Model Values:

After making modifications, models must be explicitly saved to

persist changes to the database. The ModelService.save() method is used for this.

modelService.save(product);

modelService.saveAll();

When saving, any referenced models (models referenced by other models) are also saved automatically if they are new or modified.

Removing the Model:

To delete a model from the database, use the remove()

method of ModelService.

modelService.remove(product);

Refreshing the Model:

To refresh a model's state from the database, the refresh()

method is used, which will discard unsaved changes.

modelService.refresh(product);

Lazy loading ensures that model properties are not loaded from the database until they are explicitly accessed. The model is instantiated with empty or default values, and the actual data is fetched from the database only when required. This reduces the initial overhead of loading unnecessary data.

The loading behavior can be controlled via the servicelayer.prefetch property:

literal: Pre-fetch only atomic attributes, not reference attributes.all: Pre-fetch all attributes, including references.CategoryModel

references a ProductModel, the product will only be saved if it is new or modified when saving the

category.

Hybris uses two different layers of data representation:

You can convert between these layers:

Product productItem = modelService.getSource(productModel);

CartModel cartModel = modelService.get(cartItem);

ModelService provides methods to create models dynamically,

attach them to the context, and apply default values.

This lifecycle ensures that the models are efficiently managed, changes are tracked, and database persistence is optimized in the Hybris Commerce platform.

Impex files are used for importing and exporting data to and from the system. The Impex header defines the structure and metadata for the import data, specifying how the data should be handled, which attributes are involved, and additional settings for the import process.

default keyword is used to specify default values for attributes that are not provided during

an import. This keyword can be used for attributes that should have a fixed value if not otherwise specified in

the import data.

INSERT_UPDATE Product; code[unique=true]; name[lang=en]; price[default=0.0]

In this example, if the price attribute is not provided in the import data, the system will assign a default value of

0.0.

unique keyword indicates that the attribute must have a unique value. This ensures that no

duplicate values are allowed for the specified attribute. Typically, this is used for identifiers like product

codes, category codes, etc.

INSERT_UPDATE Product; code[unique=true]; name[lang=en]

Here, the code attribute must have a unique value for each product. The unique=true setting

enforces uniqueness on the code field.

mode keyword controls how the Impex data is processed. It determines whether the Impex file

will insert, update, or both (insert or update) data in the Hybris system. The typical modes are:

INSERT_UPDATE Product; code[unique=true]; name[lang=en]; price

In this case, the mode INSERT_UPDATE means that if a product with the specified code

exists, it will be updated; otherwise, a new product will be inserted.

INSERT_UPDATE Product; code[unique=true]; name[lang=en]; description[lang=en]

Here, the name and description attributes are specific to the English language (lang=en).

collection keyword is used to define a list or collection of items. It is often used for

attributes that refer to related items, such as products in a category.

INSERT_UPDATE Category; code[unique=true]; products(code)[collection=true]

Here, products(code) indicates that the products attribute is a collection, and multiple

product codes can be provided.

optional keyword specifies that a given attribute is optional, meaning that the import will

proceed even if the attribute is not provided in the Impex data.

INSERT_UPDATE Product; code[unique=true]; name[lang=en]; description[optional=true]

In this case, the description attribute is optional, so it is not required to be included in the import

data.

| Keyword | Description |

|---|---|

| default | Defines default values for attributes that are not provided in the import. |

| unique | Enforces uniqueness on the attribute value. |

| mode | Defines the operation mode: insert, update, insert_update, or delete.

|

| lang | Specifies language-specific values for localized attributes. |

| collection | Indicates a collection or list of related items. |

| optional | Marks an attribute as optional, meaning it can be left out. |

These keywords help define the structure, behavior, and rules for importing and managing data within Hybris.

Business processes are workflows that define the operations and transactions that occur within an e-commerce environment. These processes are essential for automating tasks, streamlining operations, and ensuring efficient execution of business logic. The processes run asynchronously, meaning actions are executed independently, enabling a smooth flow of operations without blocking other tasks. Business processes are defined through XML files, where each process consists of nodes (actions, decisions, events, etc.) that guide the workflow from one step to another.

This structure ensures that the business process executes the defined workflow reliably and asynchronously, with each step being handled appropriately based on the system state and external interactions.

Business Process Engine:

The Business Process Engine allows for the

asynchronous execution of business processes, which are defined in XML. The engine ensures that each action in

the process is completed before transitioning to the next. A transition is the link between two actions, guiding

the workflow based on the outcome of a previous action. The process engine persists the state of the process,

meaning that even if a system crash occurs, the process will resume from its last known state after recovery.

Process Definition:

A process definition specifies the sequence of steps

(nodes) that make up the workflow. Each node represents a step in the process, and actions within those nodes

determine the next step. The nodes include action nodes, wait nodes, notify nodes, and end nodes. Transitions

between nodes dictate how the process progresses based on the outcomes (e.g., success or failure).

Action Nodes:

Action nodes are critical as they execute the business logic. Each action

is linked to a bean that performs a specific function, and the outcome of this action determines the next step

in the process. If the action succeeds, the process moves to one node, and if it fails, it moves to another.

Wait Nodes:

Wait nodes are used when the process needs to wait for external events or

systems to complete a task before continuing. These events might be tied to external systems or processes that

trigger the next step in the workflow.

Notify Nodes:

Notify nodes are used to send notifications to user groups or specific

users at certain points in the process, updating them on the status or actions required.

End Nodes:

End nodes mark the completion of a business process. These nodes store the

final state of the process (e.g., success, error) and typically define messages like "Process completed

successfully" or "Process failed."

Consider a simple process where, after an order is placed, email notifications are sent to the customer and the business partners. This example could involve the following sequence of steps:

Asynchronous Execution: By default, Hybris executes business process actions asynchronously, allowing each action to run independently without blocking others. This ensures a responsive system where processes can operate concurrently.

Synchronous Execution: In some cases, actions must be run synchronously, i.e., one after

another within the same task. This can be explicitly configured by modifying properties in the local.properties

file to ensure actions in a business process do not proceed until the current action completes.

placeOrderNotificationProcess.xml, which specifies the sequence of actions, transitions, and end

states.

spring.xml),

linking the defined XML process to the system.

BusinessProcessService

to create and start the process instance when an order is placed.

Business processes are a series of steps or activities that repeat over time. Business process management in SAP Commerce helps identify, define, document, control, and optimize these processes, integrating both automated and human-driven activities.

Caching is essential for enhancing the performance and scalability of a system by storing frequently accessed data and minimizing the need to repeatedly fetch it from databases or other external sources. In Hybris, caching is integrated within the persistence layer and is responsible for storing search results, item attributes, and item instances. When a query is made, the cache checks if the data is available; if not, the data is retrieved from the database and written to the cache for future use.

When the cache reaches its maximum capacity and can no longer accommodate new entries, a displacement strategy is employed to remove older data. Additionally, when the data in the cache becomes outdated and no longer matches the database, an invalidation strategy is used to invalidate and refresh the cache.

Item Cache:

The item cache stores model objects or items retrieved from the database. It

reduces the load on the database by caching items that are accessed frequently. The configuration for item

caching is generally specified in the project.properties or local.properties file.

Attribute Cache:

This cache stores frequently accessed attributes of items, eliminating

the need to reload the entire item from the database. It can be configured at the attribute level, allowing

specific attributes to be cached individually.

Region Cache:

The region cache divides the cache into multiple segments, referred to as

cache regions. Each region can hold specific types of data, offering the flexibility to cache certain data for

longer durations while expiring other data more quickly. This segmentation allows for better management of cache

resources, optimizing performance.

CMS Site Cache:

This cache stores site-specific data that improves the performance of

content management functionality in Hybris.

The region cache provides flexibility by partitioning the cache into multiple regions, each dedicated to specific data types. This configuration ensures that some objects remain in the cache longer, while others may be evicted sooner due to limited cache space.

Regions can be configured separately to manage different types of data and control cache sizes and eviction strategies.

Least Recently Used (LRU):

LRU evicts the least recently used entries first when the

cache needs to make space for new data. This is a common and straightforward eviction policy.

Least Frequently Used (LFU):

LFU evicts the least frequently accessed entries first. It

prioritizes retaining the entries that are accessed most often.

First-In-First-Out (FIFO):

FIFO evicts the oldest entries first based on the order in

which they were added to the cache.

You can modify the region cache settings by updating values in the local.properties file, which allows

you to adjust preconfigured values and override defaults.

For example, you can configure the size of the entity region or specify the eviction policy to be used, such as LRU or FIFO.

Hybris also allows you to implement custom cache regions using different caching implementations, such as Ehcache. You can define a new region cache with specific settings, including maximum entries, eviction policies, and the types of data to be cached.

A custom cache region can be configured using the Spring configuration framework, specifying parameters like the region name, maximum entries, eviction policy, and the data types handled by the region.

A catalog serves as a structured repository for product and content data, enabling the organization and presentation of e-commerce items and associated content in a cohesive manner. Catalogs are vital for managing product listings, pricing, promotions, and other related data, enhancing the overall user experience on the platform. They help businesses organize products into categories and offer content that can be managed to create targeted marketing and customer engagement strategies. Effective catalog management withensures that the content is up-to-date and that product data is consistent across different channels.

Hybris supports two primary types of catalogs:

Content Catalog: A content catalog helps businesses manage and organize content such as images, videos, banners, and other media for the e-commerce platform. It allows businesses to create rich, dynamic content experiences for customers, facilitating engagement and driving conversions.

Product Catalog: The product catalog is used for managing the data related to the products sold on the e-commerce site. It includes information like product attributes (name, description, price, images) and categorizes products into predefined groups, making it easier to structure and find products. A single Hybris installation can support multiple catalogs and catalog versions.

Catalog versions enable the management of changes to products and content data over time. They allow businesses to make adjustments in a controlled manner and ensure the integrity of product listings. Two main types of catalog versions are typically used:

Staged Catalog Version: The "Staged" catalog version is used for making changes and testing modifications in a sandbox environment. Changes here are not immediately visible to customers. It acts as a preview or validation space where product data, pricing, and content updates can be thoroughly tested and reviewed before going live.

Online Catalog Version: The "Online" catalog version is the live version that customers interact with. It reflects the current state of the product catalog and is directly visible on the e-commerce site. Any updates made in the online version are immediately accessible to customers, ensuring they see the most current product data, availability, and pricing.

Catalog synchronization is the process of transferring updates made in the "Staged" catalog version to the "Online" catalog version. This ensures that the live store reflects the latest validated product and content data. Synchronization is crucial for maintaining the accuracy and consistency of product listings in real-time.

Before synchronization, it’s essential that the staged catalog has undergone thorough testing and review to ensure no issues arise when the changes are applied to the online store.

Delta synchronization refers to synchronizing only the items that have been changed (added, updated, or deleted) in the source catalog version since the last synchronization. This approach improves performance by avoiding the synchronization of unchanged items.

Delta synchronization is a powerful optimization technique that focuses on syncing only the changed (delta) items based on timestamps. This mechanism is essential for efficient catalog management, especially in environments with large and frequently updated catalogs. By leveraging timestamps and sync jobs, delta synchronization ensures performance and data consistency between staged and online catalog versions.

Change Tracking Mechanism

modifiedTimestamp field that

indicates when it was last changed.

syncTimestamp)

for each synchronized item in the source and target catalog versions.

modifiedTimestamp of an item in the staged version is newer than its syncTimestamp

in the online version, the item is flagged for synchronization.

Delta Computation During Sync

modifiedTimestamp of each item in the source catalog.syncTimestamp of the corresponding item in the target catalog.Database Query Optimization

modifiedTimestamp > syncTimestamp are included in the

synchronization queue.

Partial Synchronization

modifiedTimestamp <= syncTimestamp) are skipped.Delta synchronization can be triggered under the following conditions:

Synchronization in catalog versions is needed to keep the staged and online catalog versions consistent, ensuring that changes made in the staged catalog are properly reflected in the online catalog. Hybris determines the need for synchronization based on certain criteria, configurations, and states of the catalog items. Here's how Hybris identifies when synchronization is required:

ItemSyncTimestamp).

sync attribute.Hybris determines the need for synchronization based on:

Properly configured synchronization jobs and workflows ensure consistency between catalog versions while minimizing unnecessary sync operations.

Impex is a powerful import/export tool, designed primarily for inserting, updating, or

deleting data in

the database from flat files (such as CSV). It allows batch operations for managing data within

the Hybris system. It

can be used for importing product data, updating catalog information, or managing complex

configurations like user

groups and permissions.

PageTemplateModel:

INSERT_UPDATE PageTemplate; code[unique = true]; name[lang = en]; catalogVersion(catalog(id), version)[unique = true]

; myPageTemplate; "My Page Template" ; {catalogVersion}

items.xml).catalogVersion).

FlexibleSearch is a query language used to retrieve data from the database in a more

dynamic way. It is an

abstraction layer over SQL, making it easier to write database queries while remaining

database-agnostic. FlexibleSearch

automatically adapts to the underlying database system (e.g., MySQL, SAP HANA)

and allows you to execute queries using

the Hybris FlexibleSearch API.

SELECT * FROM {Order}

date is not null:

SELECT * FROM {Order} WHERE {date} IS NOT NULL

| Feature | Impex | FlexibleSearch |

|---|---|---|

| Purpose | Data import/export, batch data manipulation. | Data retrieval from the database. |

| Used for importing, inserting, and updating data in the database. | Used for querying and retrieving data from the database. | |

| Use Case | Batch operations for managing data (e.g., product data, user groups). | Querying specific data from Hybris items. |

| Used for inserting, updating, and deleting large sets of data (products, categories, etc.). | Used for querying data from the database for reports, filtering, and searching. | |

| Data Handling | Works primarily with CSV-like data in flat files. | Works with Hybris database and model objects. |

| Query Type | No querying; it’s based on predefined actions like INSERT, UPDATE,

REMOVE.

|

Supports complex queries with filters, joins, and sorting. |

| Read/Write | Both read and write (can insert, update, or delete data). | Read-only (used for data retrieval). |

| Performance | Optimized for batch processing and large imports/exports. | Optimized for querying and retrieving data in real-time. |

| Context Support | Supports dynamic data population using context-based values. | Allows querying of data objects and relations. |

| Flexibility | Limited flexibility, as it is mainly for predefined operations on datasets. | Highly flexible in terms of querying and data retrieval. |

| Language | Flat-file format with specific syntax. | SQL-like query language with object-oriented syntax. |

| Syntax | Declarative syntax with INSERT_UPDATE. |

SQL-like syntax with a focus on object-oriented queries. |

| Flexibility | Primarily used for data imports and updates; not used for dynamic querying. | More flexible in querying with dynamic parameters and complex conditions. |

| Modification | Used to insert or update records in the database. | Does not modify data, only fetches it. |

| Database Support | Database-independent, but based on predefined item types. | Database-agnostic, adapts to various underlying databases. |

| Output | Affects the database by inserting or updating records. | Returns results in a SearchResult object (list of models). |

| Example Use | Importing new products, updating user group configurations. | Fetching orders based on their status or products based on a category. |

FlexibleSearch supports parameters, making it more dynamic and adaptable for real-time queries.

Example:

private static final String GET_ORDERS = "SELECT {PK} FROM {Order} WHERE {status}=?status";

FlexibleSearchQuery query = new FlexibleSearchQuery(GET_ORDERS);

query.

addQueryParameter("status",OrderStatus.COMPLETED);

SearchResult<OrderModel> result = flexibleSearchService.search(query);

List<OrderModel> orders = result.getResult();

This example demonstrates how a parameterized query is used to dynamically fetch orders that are completed.

Impex should be used when:

FlexibleSearch should be used when:

Hybris supports importing data via CSV (Comma-Separated Values) format, but with certain specific rules and

configurations.

Here's how to use the ImpEx CSV format.

code[unique=true];name[lang=en];catalogVersion(catalog(id),version);price

product001;Product 1;{electronicsCatalog:online};100.00

product002;Product 2;{electronicsCatalog:online};200.00

Here, each column in the CSV represents an attribute of the Product item type:

# This Impex script imports or updates products.

INSERT_UPDATE Product; code[unique = true]; name[lang = en] ; catalogVersion(catalog(id), version); price

product001 ; Product 1 ; {electronicsCatalog:online} ; 100.00

product002 ; Product 2 ; {electronicsCatalog:online} ; 200.00

INSERT_UPDATE: Ensures that if the product exists, it will be updated; if not, it will be inserted.

catalogVersion(catalog(id), version): References the catalog and version.You can access Groovy scripting in ImpEx to add logic or retrieve external data. The

Groovy code can be used to

enhance or manipulate the import data dynamically.

# Define variables for dynamic logic

$catalogVersion = {electronicsCatalog:online}

$price = 150.00

# Groovy Script to create product and set attributes

"#% import de.hybris.platform.servicelayer.search.FlexibleSearchQuery;"

"#% def catalogVersion = catalogVersionService.getCatalogVersion('electronicsCatalog', 'online');"

"#% def price = 150.00;"

# Use the Groovy variable in Impex

INSERT_UPDATE Product; code[unique = true]; name[lang = en] ; catalogVersion(catalog(id), version); price

product003 ; Product 3 ; {$catalogVersion} ; {$price}

Groovy logic runs before the Impex lines are executed. It dynamically determines catalog

versions or computesYou can include conditional logic and variables in your Impex scripts to make them more flexible.

You can conditionally insert or update data based on the value of a variable:

$var = "true"

#% if: "$var.equals('true')"

INSERT_UPDATE Product; code[unique = true]; name[lang = en] ; catalogVersion(catalog(id), version); price

product004 ; Product 4 ; {electronicsCatalog:online} ; 250.00

#% endif;

$var equals "true".Macros can be created to define reusable data or behavior.

$item = "Product"

INSERT_UPDATE $item; code[unique = true]; name[lang = en] ; catalogVersion(catalog(id), version); price

product005 ; Product 5 ; {electronicsCatalog:online} ; 300.00

$item is a macro, and its value can be reused across the script.You can import data from external files or SQL databases directly into Hybris via ImpEx.

# Importing external data from a file

#% impex.includeExternalData("external_data.csv", "UTF-8", 0);

INSERT_UPDATE Product; code[unique = true]; name[lang = en]; price

#% impex.includeExternalData("external_data.csv", "UTF-8", 0);

impex.includeExternalData allows importing data from an external CSV into your system.You can also import data directly from an SQL database using impex.includeSQLData.

#% impex.initDatabase("jdbc:mysql://localhost/testdb?user=testuser&password=testpass", "com.mysql.jdbc.Driver");

impex.includeSQLData("SELECT code, name FROM Products");

To remove data from a specific item type (e.g., Product), use the

REMOVE operation.

$item = Product

REMOVE $item[batchmode = true]; code[unique = true];

$product

Hybris supports maps and collections within ImpEx. For example, for a product with multiple categories:

INSERT_UPDATE Product; code[unique = true]; categories(code)

product007 ; Electronics|HomeAppliances

Here, categories(code) is a map relationship where multiple values can be inserted in one go.

You can set validation modes to relaxed or strict depending on how

the imports should handle missing or

inconsistent data.

#% impex.setValidationMode("import_relaxed");

INSERT_UPDATE Product; code[unique = true]; name[lang = en] ; catalogVersion(catalog(id), version); price

product008 ; Product 8 ; {electronicsCatalog:online} ; 150.00

import_relaxed allows ignoring mandatory field validation errors, while import_strict

will enforce them.

Creating item types is a flexible process that allows for customization and extension of the platform's

data

models. You can create new item types from scratch, extend existing ones, or modify them with new

attributes, depending

on the requirements of your project. Each method ensures that the corresponding database

structure and Java classes are

correctly generated and managed by the system.

Creating new item types is a critical part of managing data models for business applications. Item types

are

akin to database tables and their attributes represent the columns of those tables. Item types can be defined in

three

primary ways: as new standalone types, by extending existing types, or by modifying existing types with new

attributes.

Each method comes with specific configurations, such as generating Java classes, creating database tables,

and

managing attribute persistence.

To create a new Item Type, you must define the item type in the items.xml

file within your extension.

There are three main approaches:

In this method, you define a completely new item type without inheriting from any existing ones, such as GenericItem.

Example:

<itemtype code="DeliveryArea"

autocreate="true"

generate="true"

jaloclass="com.custom.core.jalo.DeliveryArea">

<description>The delivery area for an order</description>

<deployment table="deliveryArea" typecode="10502"/>

<attributes>

<attribute qualifier="code" type="java.lang.String">

<description>Area code</description>

<modifiers optional="false" unique="true" initial="true"/>

<persistence type="property"/>

</attribute>

<attribute qualifier="name" type="localized:java.lang.String">

<description>Area name</description>

<modifiers optional="false"/>

<persistence type="property"/>

</attribute>

</attributes>

</itemtype>

You can extend an existing item type (e.g., Product), inheriting its attributes and functionality, and

then add custom

attributes or logic.

Example:

<itemtype generate="true"

code="MyProduct"

jaloclass="com.hybris.backoffice.jalo.MyProduct"

extends="Product"

autocreate="true">

<attributes>

<attribute qualifier="myExampleField" type="java.lang.String">

<description>My Example Initial String Value</description>

<modifiers/>

<persistence type="property"/>

</attribute>

</attributes>

</itemtype>

MyProduct is an extension of the

existing Product type, inheriting itsInstead of defining an entirely new type, you can add new attributes to an existing item type, without modifying

its

structure. This is particularly useful when extending existing functionalities.

Example:

<itemtype code="Cart" generate="false" autocreate="false">

<attributes>

<attribute qualifier="subscription" type="Subscription">

<persistence type="property"/>

<modifiers/>

</attribute>

</attributes>

</itemtype>

unique, optional, etc.

persistence

type="property") orConfiguring a CronJob involves creating the CronJob model, implementing the job logic in a

JobPerformable

class, and linking everything through Spring beans and Cron expressions. The CronJob system is useful for running

periodic tasks in the background, such as synchronization, cleaning, and data processing. Once set up, you can

schedule the execution of the job and manage its lifecycle through the HMC or Backoffice.

a CronJob is used to automate and schedule background tasks, such as catalog synchronization, data indexing, and cart cleaning. It consists of three main components: CronJob, Job, and Trigger. The Job defines the logic, the CronJob holds configurations like inputs for the job, and the Trigger schedules the job execution based on Cron expressions. This guide covers how to configure a new CronJob by defining the necessary components and setting up a job for scheduled execution.

creating a new CronJob involves defining and configuring several components: CronJob model, Job (performable), and Trigger. Here’s a step-by-step guide to configure a new CronJob:

The CronJob holds the configurations for the job, such as inputs, and represents a single run of the

job. You can create a new CronJob model by extending the CronJob item type.

Example:

<itemtype code="HelloWorldCronJob" extends="CronJob" jaloclass="com.stackextend.training.core.jalo.HelloWorldCronJob">

<attributes>

<attribute qualifier="firstName" type="java.lang.String">

<modifiers/>

<persistence type="property"/>

</attribute>

</attributes>

</itemtype>

The Job contains the business logic to be executed., you generally create a

JobPerformable

class to implement the business logic. The class should extend AbstractJobPerformable and implement the

perform method.

Example:

public class HelloWorldJob extends AbstractJobPerformable<HelloWorldCronJobModel> {

@Override

public PerformResult perform(HelloWorldCronJobModel cronJobModel) {

try {

// Retrieve firstName from the cronJob model

String firstName = cronJobModel.getFirstName();

// Display the greeting

System.out.println("Hello " + firstName);

// Return success status

return new PerformResult(CronJobResult.SUCCESS, CronJobStatus.FINISHED);

} catch (Exception e) {

// Return error status if an exception occurs

return new PerformResult(CronJobResult.ERROR, CronJobStatus.ABORTED);

}

}

}

Once the JobPerformable is implemented, register the class as a Spring bean.

Spring Bean Definition Example:

<bean id="helloWorldJob" class="com.stackextend.training.core.job.HelloWorldJob" parent="abstractJobPerformable">

<!-- Other bean configurations if needed -->

</bean>

helloWorldJob) will be used in the next step.Create an instance of the ServicelayerJob and associate it with the Spring bean defined in the previous

step.

Example:

INSERT_UPDATE ServicelayerJob; code[unique=true]; springId

; helloWorldJob ; helloWorldJob

ServicelayerJob with the helloWorldJob bean in the Spring

context.

Once the CronJob model and job are set up, create an instance of the CronJob, linking it to the job and providing any necessary input parameters.

Example:

INSERT_UPDATE HelloWorldCronJob; code[unique=true]; job(code); firstName; sessionLanguage(isocode); sessionCurrency(isocode)

; helloWorldCronJob; helloWorldJob; Mouad; en; EUR

The Trigger defines when the CronJob should be executed, using a Cron expression. You can define a trigger to schedule the CronJob at a specific time or interval.

Example:

INSERT_UPDATE Trigger; cronjob(code)[unique=true]; cronExpression

; helloWorldCronJob ; 0 0 12 ? * SUN *

After configuring the CronJob, you can run it manually through the HMC or Backoffice, or it will execute automatically based on the Trigger configuration.

Internationalization is essential for delivering localized content to users across different languages, currencies, and countries. Through the use of localized attributes, types, and content management interfaces in Backoffice and Storefront, Hybris supports a seamless multilingual experience. Additionally, support for multi-currency, multi-country configurations, and a fallback mechanism ensures the platform can scale to meet diverse regional requirements. Properly configuring these components guarantees an optimal user experience for global audiences.

Internationalization (i18n) enables applications to support multiple languages, currencies, and regions, ensuring that content is presented according to a user's locale. Hybris offers built-in support for managing translations of various elements like product descriptions, categories, and static content. To achieve this, it provides mechanisms such as localized attributes, localized types, and multi-language support through configuration. This guide outlines how to configure and implement internationalization in a Hybris-based system.

Hybris provides a comprehensive internationalization (i18n) framework that allows you to manage multiple languages, currencies, and country-specific content. Here's how you can handle i18n effectively within a Hybris system.

Hybris allows you to configure multiple languages for your storefront and backoffice. These languages are tied to the system's locale.

Add Languages: In the hybris/config folder, add languages in the locales.properties

file.

Example:

supported.languages=en, de, fr, es

This configuration will allow the system to support English, German, French, and Spanish.

Language Management: You can manage languages via the Hybris Administration Console

(HAC) by navigating to the Internationalization section where you can add and configure

new languages.

Localized attributes allow you to store different values of an attribute for each language. For example, a product's description might be different in English and French.

Localized Attribute Configuration: In the item type definition, you use the

localized keyword to define which attributes should support multiple languages.

Example:

<attribute qualifier="description" type="localized:java.lang.String">

<modifiers optional="false"/>

<persistence type="property"/>

</attribute>

localized prefix indicates that the attribute will have different values for each

supported language.

Accessing Localized Data: Hybris provides mechanisms to retrieve localized content through

the use of LocalizedValue objects. You can access localized values programmatically using the

getDescription() method, which returns the description in the current language.

Hybris supports Localized Types that are extended from the LocalizedType class. This is

useful when you need to define multiple variants of an item based on the language.

Example: If you have a product with localized attributes, the product type should extend

LocalizedType.

Example:

<itemtype code="Product" extends="Product">

<attributes>

<attribute qualifier="localizedDescription" type="localized:java.lang.String">

<modifiers optional="false"/>

<persistence type="property"/>

</attribute>

</attributes>

</itemtype>

When a user accesses the product, the appropriate localized description will be returned based on their preferred language.

To manage localized content effectively, Hybris provides user interfaces in the Backoffice and HMC that allow content managers to provide different translations for content such as product descriptions, category names, and banners.

Backoffice: Users can select the language for each field in the product, category, or content page. For example, the "Description" field will allow entries for each supported language.

Storefront: The storefront will automatically display content in the language associated with the user’s locale. The content management system handles the different translations.

Hybris provides multi-currency and multi-country configurations to manage country-specific content such as pricing, shipping methods, and promotions.

Currency Management: You can configure different currencies in the system through HAC or Backoffice. This includes defining exchange rates, supported currencies, and mapping them to specific locales.

Country-Specific Content: In the HAC or Backoffice, you can configure country-specific content, including product catalogs and promotions, to ensure they meet the specific requirements of each country.

When a translation for a given language is unavailable, Hybris uses a fallback mechanism. By default, if the content in the requested language is missing, the system will display the content in the fallback language (usually English). You can configure fallback languages to ensure a seamless user experience even when content is not available in the preferred language.

LocalizedValue Class for Programmatic Accessthe LocalizedValue class is used to store localized values for attributes. This allows

content to be managed in multiple languages.

public String getLocalizedDescription(Product product, Language language) {

LocalizedValue localizedValue = product.getDescription();

return localizedValue.get(language.getIsocode());

}

For translating content, the FlexibleSearch queries can be used to export content for translation purposes. Additionally, Hybris offers SAP Translation Hub integration, which allows easier management and automated translation of content across various languages.

For dynamic content (such as user-generated content), Hybris provides ways to handle multi-language support programmatically by customizing controllers and views to ensure content is presented in the user's preferred language.

Hybris also provides functionality to support SEO and URL localization, so URLs can be adapted based on language and

country. For example, the product detail page might be accessible via /en/product/123 for English users

and /de/produkt/123 for German users.

promotions are powerful but require custom implementation or strategic configuration to handle advanced use cases effectively. Understanding OOTB limitations and proactively addressing them with tailored logic ensures seamless promotion functionality while enhancing the customer experience.

robust support for promotions, but certain mechanics, such as "Buy One Get One Free" (BOGOF), multi-category promotions, and customer-specific discounts, reveal limitations in the Out-of-the-Box (OOTB) implementation. While basic configurations are possible, edge cases and more advanced requirements, like group-specific pricing or flexible free item handling, often require custom logic. Additionally, nuances such as order-of-operations issues in delivery discounts and complications with grouping strategies can impact expected functionality. This detailed overview identifies these gaps and offers insights into best practices for implementation.

Two Implementation Options:

Custom Workarounds:

Custom Actions and Conditions:

Optimize Configuration for Prioritization:

Leverage External Systems:

Analyze Business Needs:

's Promotion Engine, coupled with its modular design, delivers a powerful framework for creating tailored, impactful promotions. Its support for customizations ensures it can meet both standard and complex promotional needs, enabling businesses to enhance customer satisfaction and drive sales effectively.

Promotions leverage a robust Promotion Engine to implement various marketing strategies, from basic discounts to complex custom rules. The Promotion Engine includes essential modules like the Rule Engine, Coupon Module, and Timed Access Promotion Engine Module. Its implementation sequence integrates seamlessly with cart updates, recalculations, and rule evaluations while allowing advanced customization through custom conditions, actions, and facts. Custom promotions like rewarding reviews with group-based discounts showcase the engine's adaptability for unique business requirements.

Sequence of Operations:

Cart Update Trigger: Promotions are reevaluated whenever the cart changes (e.g., adding/removing products).

Promotion Evaluation: The system:

Rule Execution: The Drools Rule Engine evaluates rules and applies actions, such as:

Action Persistence: RAOs representing actions (discounts, messages, etc.) are processed using strategies:

Final Recalculation: Cart recalculations incorporate promotion results into the final order.

Custom promotions extend the flexibility of the Promotion Engine for scenarios not covered out of the box.

Extension Creation:

custompromotionengine extension for custom rules.Define New RAOs:

Custom Conditions and Actions:

Strategy Implementation:

Testing and Deployment:

Scenario: Customers writing product reviews gain access to a unique promotion.

Custom Promotion:

reviewedProductsCustomerGroup).Out-of-the-Box Promotion:

reviewedProductsCustomerGroup.This highlights Hybris's ability to blend standard and custom rules for tailored customer incentives.

Purpose it separating business logic from persistence logic, ensuring that the core functionalities of the platform are modular, extensible, and maintainable. It adheres to service-oriented architecture principles and provides a clean framework for developing and extending services. By focusing on business logic, the ServiceLayer allows developers to interact with the platform's models and manage system events efficiently. It offers hooks into lifecycle events, enabling custom business rules and event handling.

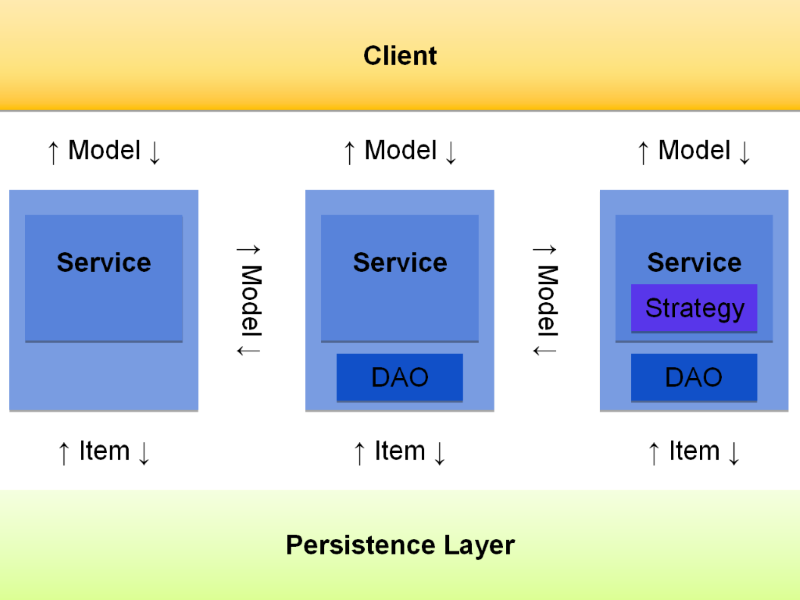

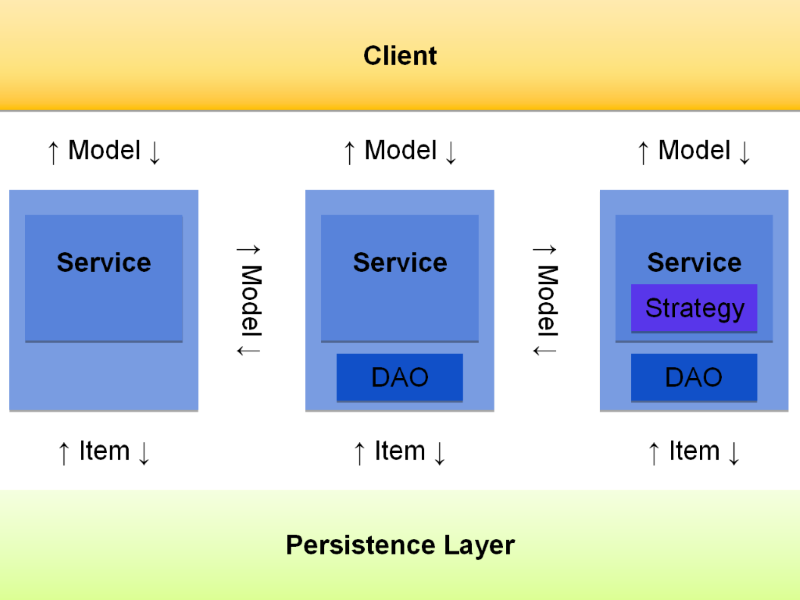

The ServiceLayer is an integral part of the architecture, sitting on top of the persistence layer and responsible for encapsulating business logic. It serves as the bridge between the persistence layer (which interacts with the database) and the client components (such as controllers, scripts, and services). The primary function of the ServiceLayer is to provide services that execute business rules while maintaining separation from the data access and storage logic.

Service-Oriented Architecture (SOA):

The ServiceLayer is based on service-oriented

architecture, which promotes loose coupling, modularity, and flexibility. This architecture ensures

that business logic can be encapsulated within independent services that can be easily extended or replaced

without affecting the core system.

Separation of Business and Persistence Logic:

One of the key principles of the

ServiceLayer is the clear separation between business logic and persistence logic. The services

handle only the functional aspects of the application, while the data access logic is handled separately by

repositories or DAOs (Data Access Objects). This ensures that the system is maintainable, testable, and easier

to extend.

Well-Defined Responsibilities:

Each service in the ServiceLayer is responsible for a

specific piece of functionality, whether it’s handling user management, order processing, or product catalog

management. This clear responsibility division makes it easier for developers to extend and

maintain the codebase.

Extensibility:

The ServiceLayer provides an architecture that supports both custom

service development and the extension of existing services. Custom services can be developed by

following the same patterns as existing services, ensuring consistency across the platform.

Based on the Spring Framework:

The ServiceLayer is built on the Spring

Framework, utilizing Spring’s features like dependency injection and transaction

management. Spring’s powerful dependency injection ensures that services are loosely coupled and that

dependencies can be injected into the services dynamically.

Common Design Patterns:

The ServiceLayer leverages design patterns like interface-oriented

design and dependency injection to ensure scalability and modularity. Services are

typically implemented through interfaces, which decouple the service’s interface from its implementation,

facilitating better maintainability and testability.

Lifecycle Hooks:

The ServiceLayer provides hooks into model lifecycle

events and system lifecycle events. For instance, it allows for custom logic to be

executed when a model is created, updated, or deleted, as well as during the initialization of the system or

when specific updates occur. This ensures that business logic can be executed automatically in response to

changes in the system.

Event Publishing and Subscription:

The ServiceLayer provides a framework for publishing

and receiving events. Events can be published when specific actions or changes occur in the system, such as when

an order is placed, a product is updated, or a promotion is applied. These events can trigger external services

or processes, such as notifications or further business logic.

The ServiceLayer is built using a combination of different architectural concepts. Some of these concepts are optional, while others are mandatory.

The ServiceLayer can be seen as a layer of services built on top of the persistence layer. These services are further divided into subcomponents.

In this context, a client refers to any software component that interacts with the ServiceLayer, such as:

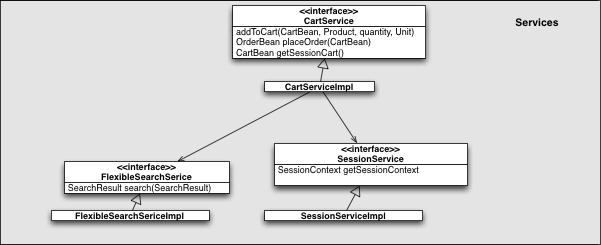

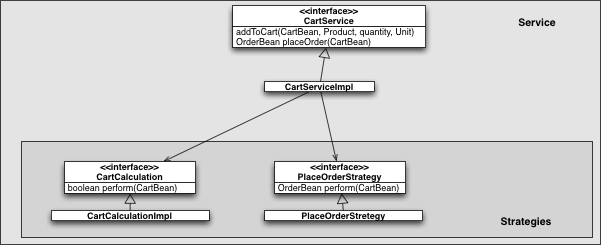

A service encapsulates the logic for performing specific business processes and provides this logic through public methods, typically defined in a Java interface. These methods generally operate on model objects, like products or orders.

Services are designed to abstract away the persistence layer, meaning they focus purely on business logic without handling database operations directly. The goal is to minimize the coupling between services and the underlying persistence layer.

SAP Commerce exposes its full functionality through services, which can be categorized into three types:

Service methods should be designed to be as fine-grained as possible to promote reusability.

Extensions must expose their functionality as services. Each extension can provide multiple services, depending on the needs of the business logic.

While services can interact with other services, it’s recommended to keep these interactions to a minimum to avoid tightly coupled components.

A service can delegate tasks to smaller components called strategies. These strategies are more focused and specialized, making them easier to adapt or replace without affecting the rest of the system. From the client's perspective, the service still offers a stable API, while internally, the functionality is divided into smaller, more manageable parts.

A DAO is an interface used for interacting with the storage backend (e.g., database). It handles tasks like saving, removing, and retrieving models. DAOs encapsulate SQL or FlexibleSearch queries, ensuring that the persistence logic remains separate from the rest of the application.